Entities

View all entitiesCSETv1 Taxonomy Classifications

Taxonomy DetailsIncident Number

146

GMF Taxonomy Classifications

Taxonomy DetailsKnown AI Goal Snippets

(Snippet Text: You can pose any question you like and be sure to receive an answer, wrapped in the authority of the algorithm rather than the soothsayer., Related Classifications: Question Answering)

Risk Subdomain

7.3. Lack of capability or robustness

Risk Domain

- AI system safety, failures, and limitations

Entity

AI

Timing

Post-deployment

Intent

Unintentional

Incident Reports

Reports Timeline

:format(webp)/cdn.vox-cdn.com/uploads/chorus_image/image/70020569/acastro_181017_1777_brain_ai_0002.0.jpg)

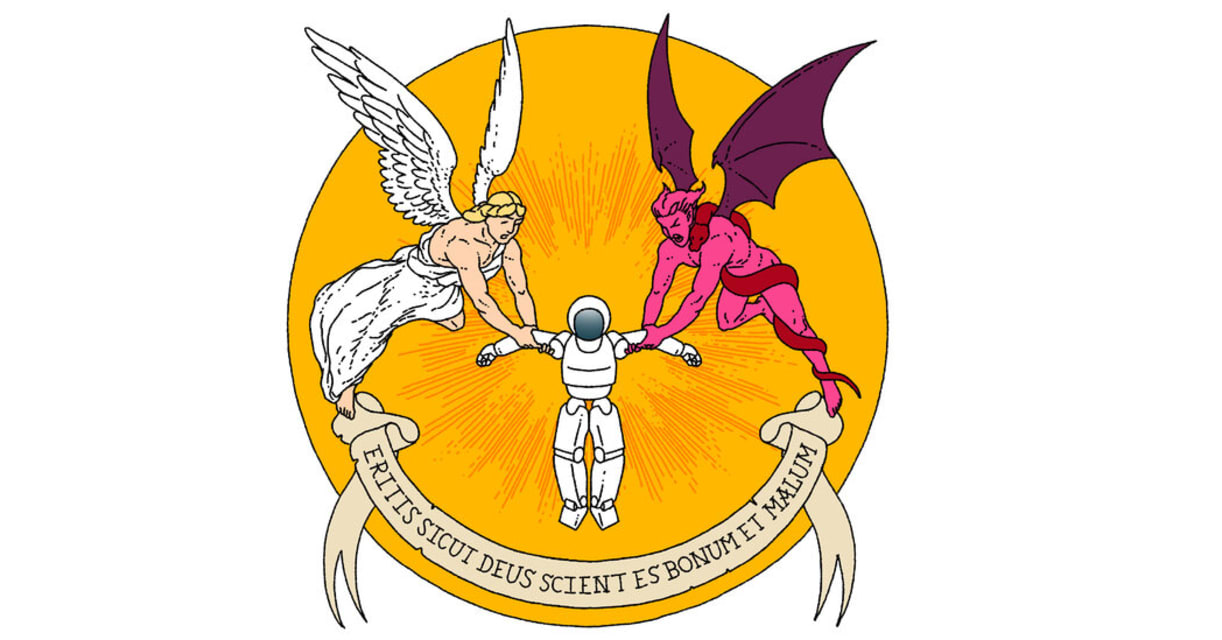

Got a moral quandary you don’t know how to solve? Fancy making it worse? Why not turn to the wisdom of artificial intelligence, aka Ask Delphi: an intriguing research project from the Allen Institute for AI that offers answers to ethical di…

We’ve all been in situations where we had to make tough ethical decisions. Why not dodge that pesky responsibility by outsourcing the choice to a machine learning algorithm?

That’s the idea behind Ask Delphi, a machine-learning model from t…

Researchers at an artificial intelligence lab in Seattle called the Allen Institute for AI unveiled new technology last month that was designed to make moral judgments. They called it Delphi, after the religious oracle consulted by the anci…

Variants

Similar Incidents

Did our AI mess up? Flag the unrelated incidents

Inappropriate Gmail Smart Reply Suggestions

All Image Captions Produced are Violent

Similar Incidents

Did our AI mess up? Flag the unrelated incidents

Inappropriate Gmail Smart Reply Suggestions

:format(webp)/cdn.vox-cdn.com/uploads/chorus_image/image/70020569/acastro_181017_1777_brain_ai_0002.0.jpg)