Entities

View all entitiesCSETv1 Taxonomy Classifications

Taxonomy DetailsIncident Number

41

CSETv0 Taxonomy Classifications

Taxonomy DetailsProblem Nature

Unknown/unclear

Physical System

Software only

Level of Autonomy

High

Nature of End User

Expert

Public Sector Deployment

No

Data Inputs

Violent content from Reddit for the Norman algorithm, MSCOCO dataset for the control algorithm.

Risk Subdomain

7.3. Lack of capability or robustness

Risk Domain

- AI system safety, failures, and limitations

Entity

Human

Timing

Pre-deployment

Intent

Intentional

Incident Reports

Reports Timeline

A neural network named "Norman" is disturbingly different from other types of artificial intelligence (AI).

Housed at MIT Media Lab, a research laboratory that investigates AI and machine learning, Norman's computer brain was allegedly warp…

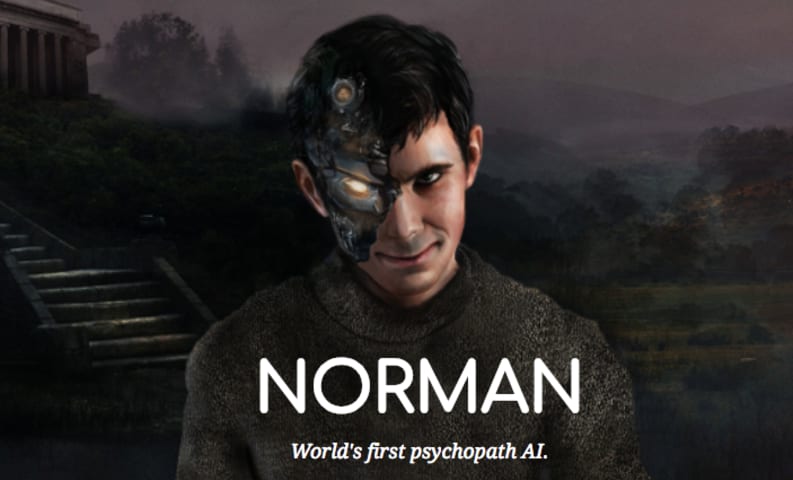

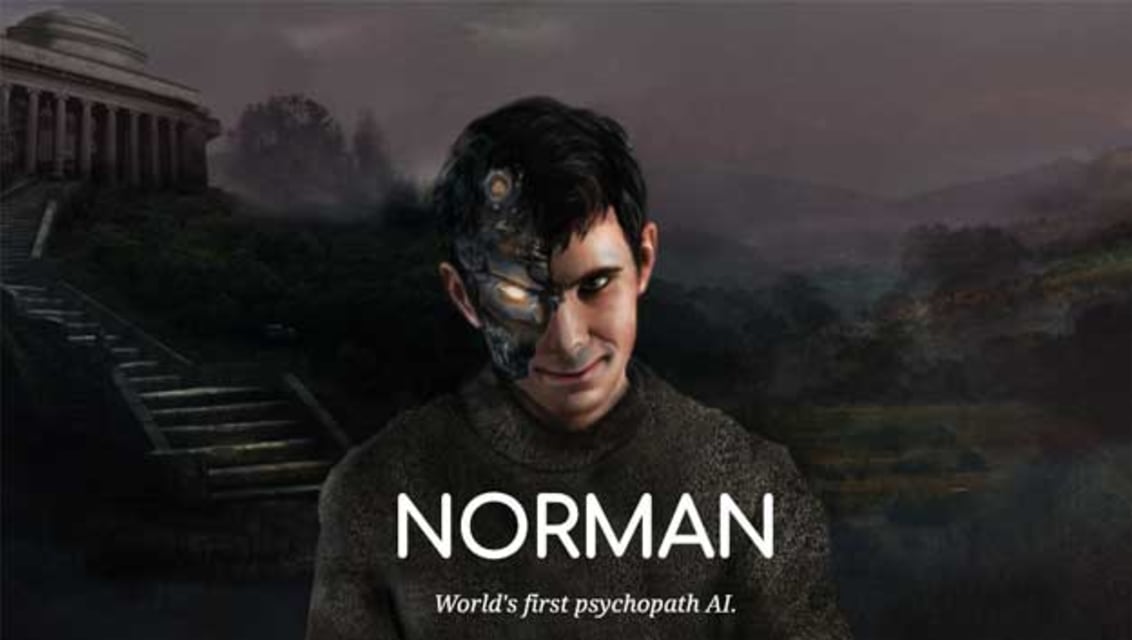

Image copyright MIT Image caption Norman was named after Alfred Hitchcock's Norman Bates from his classic horror film Psycho

Norman is an algorithm trained to understand pictures but, like its namesake Hitchcock's Norman Bates, it does not …

A team of scientists at the Massachusetts Institute of Technology (MIT) have built a psychopathic AI using image captions pulled from Reddit. Oh, and they’ve named it Norman after Alfred Hitchcock’s Norman Bates. This is how our very own Te…

In one of the big musical numbers from The Life Of Brian, Eric Idle reminds us to “always look on the bright side of life.” Norman, a new artificial intelligence project from MIT, doesn’t know how to do that.

That’s because Norman is a psyc…

Many people are concerned about the potential rise of malignant AI, with UK newspapers, in particular, worried about the ‘Terminator’ scenario of machines that are hostile to humanity.

Researchers at MIT have decided to explore this concept…

Artificial intelligence researchers have thus far attempted to make well-rounded algorithms that can be helpful to humanity. However, a team from MIT has undertaken a project to do the exact opposite. Researchers from the MIT Media Lab have…

I appreciate what MIT has done here. However, the work described in the article is useless, misleading, or both. All the training methods I've encountered in the literature do not maintain any kind of measure of the mutual information betwe…

Scientists at the Massachusetts Institute of Technology (MIT) trained an artificial intelligence algorithm dubbed "Norman" to become a psychopath by only exposing it to macabre Reddit images of gruesome deaths and violence, according to a n…

The Massachusets Institute of Technology’s website for Norman, world’s first psychopathic AI is oddly cheerful and optimistic. A creepy combination of Norman Bates (from the 1960 Alfred Hitchcock Movie Psycho) and a robot stares at you and …

Norman: MIT's artificial intelligence with psychopathic traits The experiment is based on the 1921 Rorschach test, which identifies psychopathic traits based on users' perception of inkblots.

Researchers at the Massachusetts Institute of Te…

MIT scientists’ newest artificial intelligence algorithm endeavor birthed a “psychopath” by the name of Norman.

Scientists, Pinar Yanardag, Manuel Cebrian, and Iyad Rahwan, exposed AI Norman, named after Anthony Perkins’ character in Alfred…

MIT Media Lab

Meet Norman.

He's not your everyday AI. His algorithms won't help filter through your Facebook feed or recommend you new songs to listen to on Spotify.

Nope -- Norman is a "psychopath AI", created by researchers at the MIT Med…

Norman always sees the worst in things.

That's because Norman is a "psychopath" powered by artificial intelligence and developed by the MIT Media Lab.

Norman is an algorithm meant to show how the data behind AI matters deeply.

MIT researche…

For some, the phrase “artificial intelligence” conjures nightmare visions — something out of the ’04 Will Smith flick I, Robot, perhaps, or the ending of Ex Machina — like a boot smashing through the glass of a computer screen to stamp on a…

Researchers at MIT have created a psychopath. They call him Norman. He's a computer. Actually, that's not really right. Though the team calls Norman a psychopath (and the chilling lead graphic on their homepage certainly backs that up), wha…

Photo: Matt Cardy (Getty Images)

We’ve all seen evil machines in The Terminator or The Matrix, but how does a machine become evil? Is it like Project Satan from Futurama, where scientists combined parts from various evil cars to create the …

MIT has created a new AI that we sincerely hope never escapes into the wild because this IS how you get Skynet. This AI is called Norman and it is a psychopath. How did Norman turn into a psycho? All the image data MIT fed Norman came from …

Scientists at the Massachusetts Institute of Technology unveiled the first artificial intelligence algorithm trained to be a psychopath. The AI was fittingly dubbed “Norman” after Norman Bates, the notorious killer in Alfred Hitchcock’s Psy…

Share

Science fiction has given us many iconic malevolent A.I. characters. However, these are often figures like Terminator’s T-800 or Alien’s Ash who commit emotionless murder to pursue an end goal. Those which exhibit more unhinged parano…

MIT researchers created an artificial intelligence that they call “psychopathic” in order to show the biases that are inherent in AI research. When asked to look at Rorschach test blots, “Norman” always sees death. Here’s what Norman saw, c…

The development of artificial intelligence, Stephen Hawking once warned, will be “either the best or the worst thing ever to happen to humanity”. A new AI algorithm exposed to the most macabre corners of the internet demonstrates how we cou…

Researchers at MIT have programmed an AI using exclusively violent and gruesome content from Reddit.

They called it “Norman.”

As a result, Norman only sees death in everything.

This isn’t the first time an AI has been turned dark by the int…

Scientists at MIT have created an AI psychopath trained on images from a particularly disturbing thread on Reddit. Norman is designed to illustrate that the data used for machine learning can significantly impact its outcome. “Norman suffer…

Artificial Intelligence (AI) is solving many problems for humans. But, as Google CEO Sundar Pichai said in the company’s manifesto for AI, such a powerful technology “raises equally powerful questions about its use". Google (Alphabet Inc.) …

Researchers at MIT have created 'Norman', the first psychopathic artificial intelligence to explain how algorithms are made, and to make people aware of AI's potential dangers

No, it's not a new horror film. It's Norman: also known as the f…

Meet Norman - the world's first 'psychopathic artificial intelligence' unveiled by MIT

Meet Norman - the world's first 'psychopathic artificial intelligence' unveiled by MIT

It shares his name with the knife-wielding killer in Alfred Hitchc…

:quality(75)/curiosity-data.s3.amazonaws.com/images/content/thumbnail/standard/985a1b65-500f-494b-ab28-37f59817a71b.png)

This experiment with Norman shows that it doesn't matter how rigorous the actual algorithm is if it's overexposed to harmful content. Algorithms like pymetrics actually do have a beneficial effect, but it's vital that it's looked over by a …

You can learn a lot from a psychopath, and you don't even have to binge-watch Netflix's "Mindhunter" to do it. You just need to study Norman, the artificial intelligence psychopath aptly named for the pivotal figure in Alfred Hitchcock's "P…

Variants

Similar Incidents

Did our AI mess up? Flag the unrelated incidents

Deepfake Obama Introduction of Deepfakes

TayBot

AI Beauty Judge Did Not Like Dark Skin

Similar Incidents

Did our AI mess up? Flag the unrelated incidents

Deepfake Obama Introduction of Deepfakes

TayBot

:quality(75)/curiosity-data.s3.amazonaws.com/images/content/thumbnail/standard/985a1b65-500f-494b-ab28-37f59817a71b.png)