Entities

View all entitiesCSETv0 Taxonomy Classifications

Taxonomy DetailsProblem Nature

Unknown/unclear

Physical System

Software only

Level of Autonomy

Unclear/unknown

Nature of End User

Amateur

Public Sector Deployment

No

Data Inputs

14 hours of footage from Obama's public statements and addresses (2017), Jordan Peele's voice and lip movements (2018)

GMF Taxonomy Classifications

Taxonomy DetailsKnown AI Goal Snippets

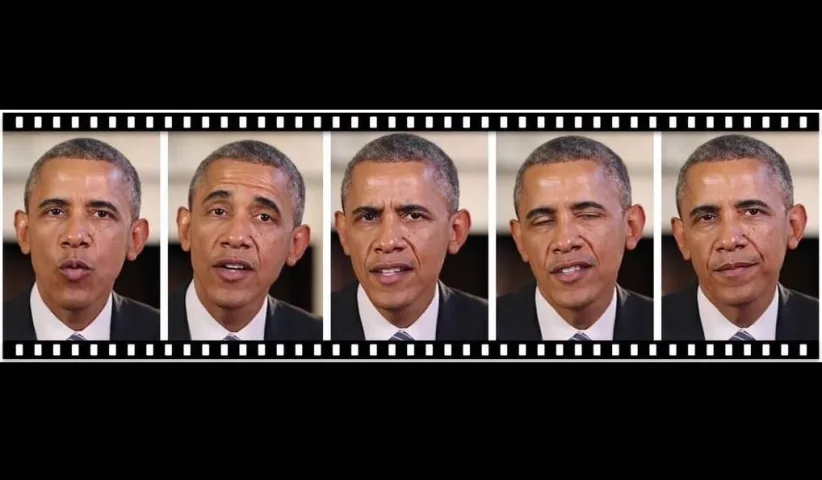

(Snippet Text: The researchers used 14 hours of Obama's weekly address videos to train a neural network. Once trained, their system was then able to take an audio clip from the former president, create mouth shapes that synced with the audio and then synthesize a realistic looking mouth that matched Obama's. , Related Classifications: Deepfake Video Generation)

CSETv1 Taxonomy Classifications

Taxonomy DetailsIncident Number

39

Notes (special interest intangible harm)

4.4 - Technically, misinformation was involved because the deepfakes portrayed Barack Obama saying things he never actually said. However, it did not result in or cause harm.

Special Interest Intangible Harm

no

Risk Subdomain

3.1. False or misleading information

Risk Domain

- Misinformation

Entity

Human

Timing

Pre-deployment

Intent

Intentional

Incident Reports

Reports Timeline

Photo: University of Washington.

Artificial intelligence software could generate highly realistic fake videos of former president Barack Obama using existing audio and video clips of him, a new study [PDF] finds.

Such work could one day hel…

The researchers used 14 hours of Obama's weekly address videos to train a neural network. Once trained, their system was then able to take an audio clip from the former president, create mouth shapes that synced with the audio and then synt…

In a post-Photoshop world, most of us know that pictures can’t always be trusted. Now, thanks to artificial intelligence, it looks like video might lie to us too.

Researchers at the University of Washington have developed an AI that can alt…

Many of us have had to heed the warning of, "Don't put words in my mouth" at some point over the course of our lives, but generally speaking, no one actually means it from in a literal sense. In time, though, thanks to deep learning and art…

Well, we’re sorry to tell you that things are about to get much, much worse!

At least, that’s based on a frankly crazy demonstration of artificial intelligence carried out by computer scientists at the University of Washington. Using a cutt…

Researchers have developed a new tool, powered by artificial intelligence, that can create realistic-looking videos of speech from any audio clip, and they've demoed the tech by synthesising four artificial videos of Barack Obama saying the…

Researchers at the University of Washington have produced a photorealistic former US President Barack Obama.

Artificial intelligence was used to precisely model how Mr Obama moves his mouth when he speaks.

Their technique allows them to put…

If you thought the rampant spread of text-based fake news was as bad as it could get, think again. Generating fake news videos that are undistinguishable from real ones is growing easier by the day.

A team of computer scientists at the Univ…

A computer programme has been created that can edit videos of people speaking to make it look like they have said something when they haven't.

Researchers at the University of Washington have produced a realistic video of former US presiden…

In news that has made pranksters around the world pay attention, there is now a computer program that can create a realistic simulated video of someone speaking.

Researchers at the University of Washington have proved their point by creatin…

He may no longer be president of the White House but former US president Barack Obama is still making some presidential speeches, although all is not as it appears.

Despite looking and sounding exactly like the real deal the new Obama is ac…

A new breed of video and audio manipulation tools allow for the creation of realistic looking news footage, like the now infamous fake Obama speech

In an age of Photoshop, filters and social media, many of us are used to seeing manipulated …

It seems that nowadays, there isn’t a day that passes by without someone proclaiming “fake news” — that now-infamous phrase that rose to prominence during the last American election and is now being bandied about ad nauseum.

But as any inte…

A neural network trained on hours of footage to produce this video of President Barack Obama lipsyncing.

It always starts with porn.

What first revealed the internet's power to distribute information? The immense and immediate explosion of …

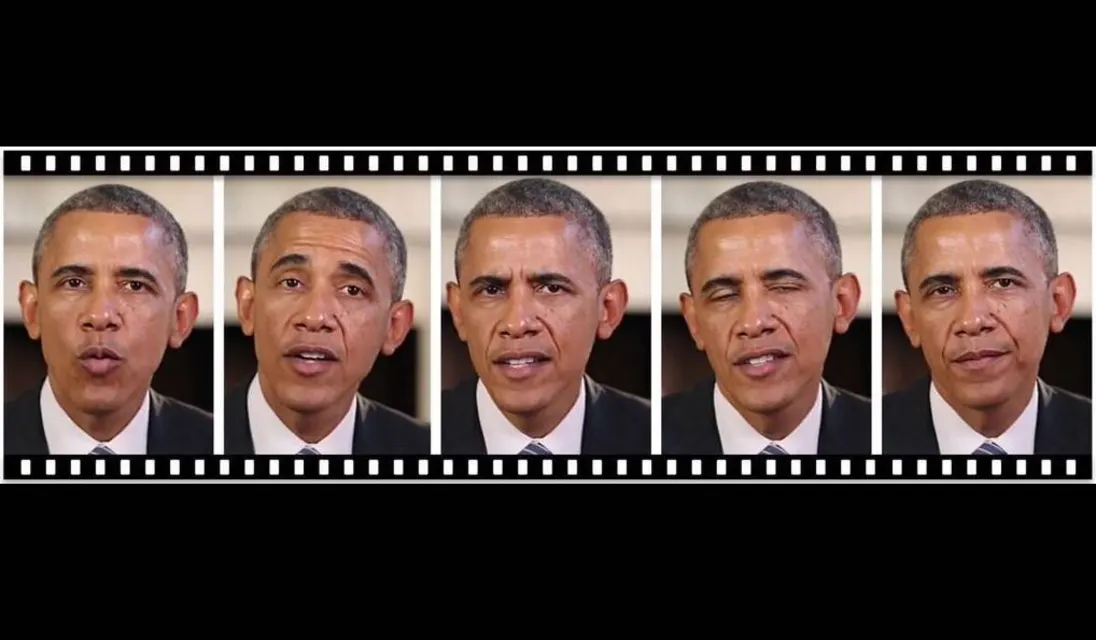

What does the future of fake news look like? No one really knows, but here’s a little sampler from Jordan Peele and BuzzFeed, who teamed up to make the above PSA. Using some of the latest AI techniques, Peele ventriloquizes Barack Obama, ha…

Fake news comes in all shapes and sizes, and unfortunately, it’s becoming more difficult to detect as technology advances. Courtesy of BuzzFeed and Get Out director Jordan Peele, we now have an appropriately terrifying idea of what it may l…

It starts with a clickbait-y title -- "You Won't Believe What Obama Says In This Video!" -- and then delivers on that promise.

Buzzfeed released a video on Tuesday that at first appears to show former US President Barack Obama conveying a w…

An exercise in creating a humorous faked video of Barack Obama is in reality a disturbing preview of how AI can be used to manipulate ordinary people. Ironically, it turns out, AI might also be our savior from fake news at the same time.

Wo…

ANALYSIS/OPINION:

Fake news, meet artificial intelligence.

A video created by Oscar-winning filmmaker Jordan Peele and released by BuzzFeed appears to show Barack Obama referencing the movie “Black Panther,” remarking on HUD Secretary Ben C…

The technology has both astounded and frightened people in equal measure, but a new video highlights just how influential AI has become in news reporting.

Not so long ago – and even today – the authenticity of a piece of news was based on p…

If you, like me, believe yourself to be a scrupulous consumer of news, full of healthy skepticism and too smart for that fake-news bullshit, Jordan Peele has got a big, stiff-ass cup of wake-the-hell-up for you. Yesterday, Peele released a …

![Creepy "Barack Obama" video shows how easy it is to manipulate what people say [video]](https://res.cloudinary.com/pai/image/upload/f_auto/q_auto/c_fill,h_480/v1/reports/gcs.thesouthafrican.com/2015/03/Barack-Obama.jpg)

We live in crazy times, where #FakeNews and data gathering is increasingly shaping the discourse.

The revelations from the Cambridge Analytica have shed some light on just how deep the rabbit hole goes.

According to the New York Times and B…

The problem with reality is that discerning what truly is can take an enormous amount of work. Take science, for instance. There is actually pretty consistent data on what types of food most should be eating — particularly if you do deep di…

There’s a video making its rounds online in which former President Barack Obama is cosigning the efforts of Erik Killmonger in Black Panther, offering a controversial opinion about HUD Secretary Ben Carson, and calling President Donald Trum…

How will artificial intelligence affects jobs?

Let’s hear from Barack Obama on the future of the world.

To learn more, check out: 10 Key Robotics Facts You Need to Know and 10 Ways Robotics Could Transform Our Future.

“All of this is fun and late night comedy and video games – until it isn’t,” says former White House senior advisor David Edelman

David Edelman, former special adviser to Presidents Bush and Obama on technology and cyber security, was worki…

Rami Niemi

“President Trump is a complete and total dipshit.” So announced Barack Obama, in a video released on YouTube earlier this year. Uncharacteristic, certainly, but it appeared very real. It was, however, a falsified video made — by …

A new technique using artificial intelligence to manipulate video content gives new meaning to the expression “talking head.”

An international team of researchers showcased the latest advancement in synthesizing facial expressions—including…

A minute-long video of Barack Obama has been seen more than 4.8 million times since April. It shows the former U.S. president seated, with the American flag in the background, speaking directly to the viewer and using an obscenity to refer …

Variants

Similar Incidents

Did our AI mess up? Flag the unrelated incidents

All Image Captions Produced are Violent

TayBot

AI Beauty Judge Did Not Like Dark Skin

Similar Incidents

Did our AI mess up? Flag the unrelated incidents

All Image Captions Produced are Violent

TayBot

![Creepy "Barack Obama" video shows how easy it is to manipulate what people say [video]](https://res.cloudinary.com/pai/image/upload/f_auto/q_auto/c_fill,h_640/v1/reports/gcs.thesouthafrican.com/2015/03/Barack-Obama.jpg)