Entities

View all entitiesCSETv0 Taxonomy Classifications

Taxonomy DetailsProblem Nature

Specification

Physical System

Software only

Level of Autonomy

High

Nature of End User

Amateur

Public Sector Deployment

No

Data Inputs

email text

CSETv1 Taxonomy Classifications

Taxonomy DetailsIncident Number

17

Special Interest Intangible Harm

no

Date of Incident Year

2017

Date of Incident Month

11

Date of Incident Day

03

Estimated Date

Yes

Risk Subdomain

7.3. Lack of capability or robustness

Risk Domain

- AI system safety, failures, and limitations

Entity

AI

Timing

Post-deployment

Intent

Unintentional

Incident Reports

Reports Timeline

Ever wished your phone could automatically reply to your email messages?

Well, Google just unveiled technology that's at least moving in that direction. Using what's called "deep learning"—a form of artificial intelligence that's rapidly re…

Just a few months ago, Google really started showing off its newest secret weapon: a robot brain that learns. Using machine learning algorithms that actually get smarter as they practice, Google's been able to identify your loved ones in yo…

There's actually a lot going on behind the scenes to make Smart Reply work. Inbox uses machine learning to recognize emails that need responses and to generate the natural language responses on the fly. If you're interested in how Smart Rep…

Not every email deserves a handcrafted response. Sometimes, all it takes is a sentence to answer the one burning question that someone has dropped in your inbox, which is why Google is using the power of machine learning to make email triag…

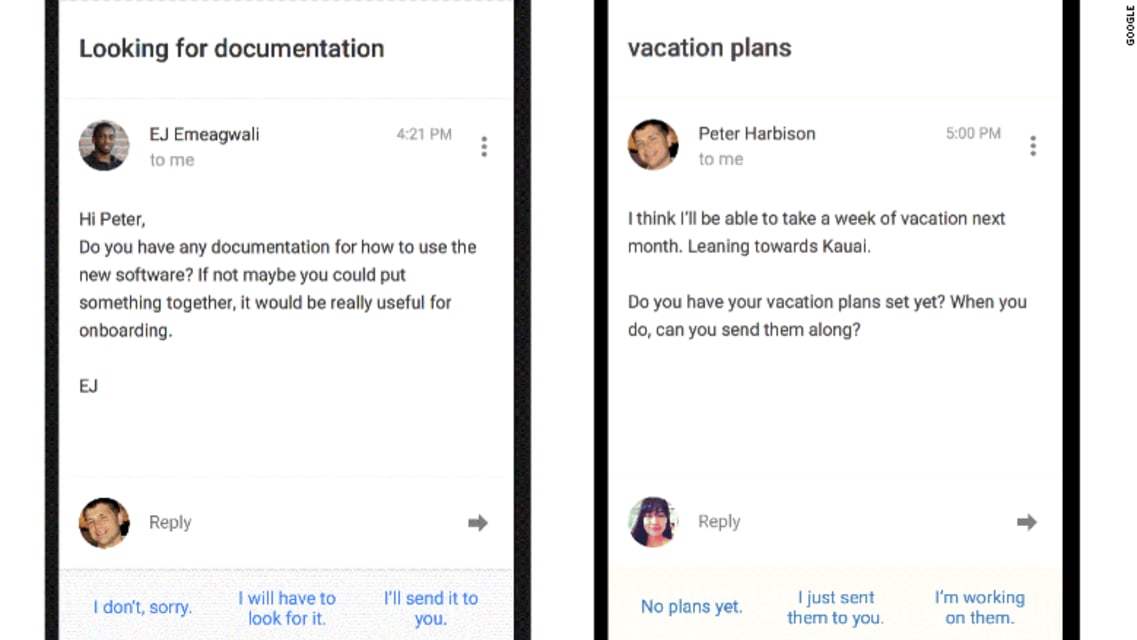

Replying to routine email requests just got easier with a new smart reply feature for Gmail users who get their email through Google Inbox. Starting this week, the app's Smart Reply feature will analyze incoming email messages and offer a s…

Google will soon scan the content of your emails and serve up what it thinks is the perfect reply.

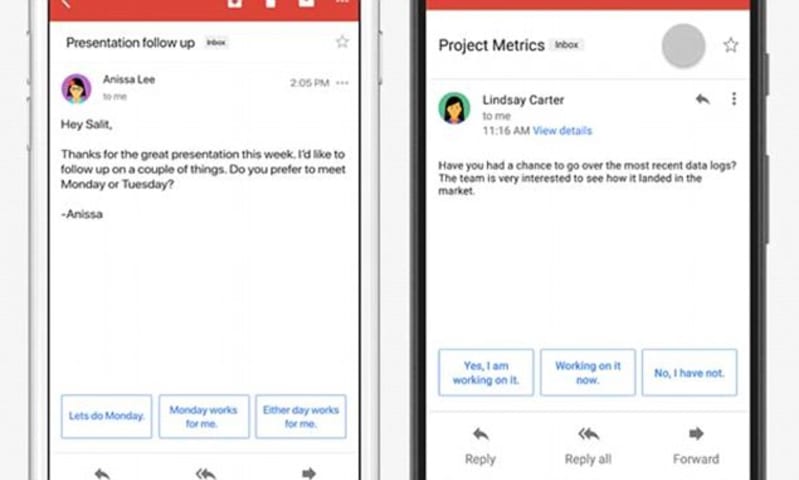

The new feature, called Smart Reply, identifies Gmail emails that require a response and presents three options for replies. Smart Reply will…

When Google launched Inbox, its most recent email app from the Gmail team, it touted the app's ability to act almost like an assistant. Now, a year later, Google is making the app more like an aide than ever.

The company will soon be rollin…

On April 1, 2009, Google unveiled Gmail Autopilot, a plug-in that promised to read and generate contextually relevant replies to the messages piling up in users’ inboxes. “As more and more everyday communication takes place over email, lots…

A system Google designed for replying to emails proved a little friendlier than anyone expected.

There are quite a few humans out there who are absolutely convinced robots will kill us the moment they become self-aware. But what if the exac…

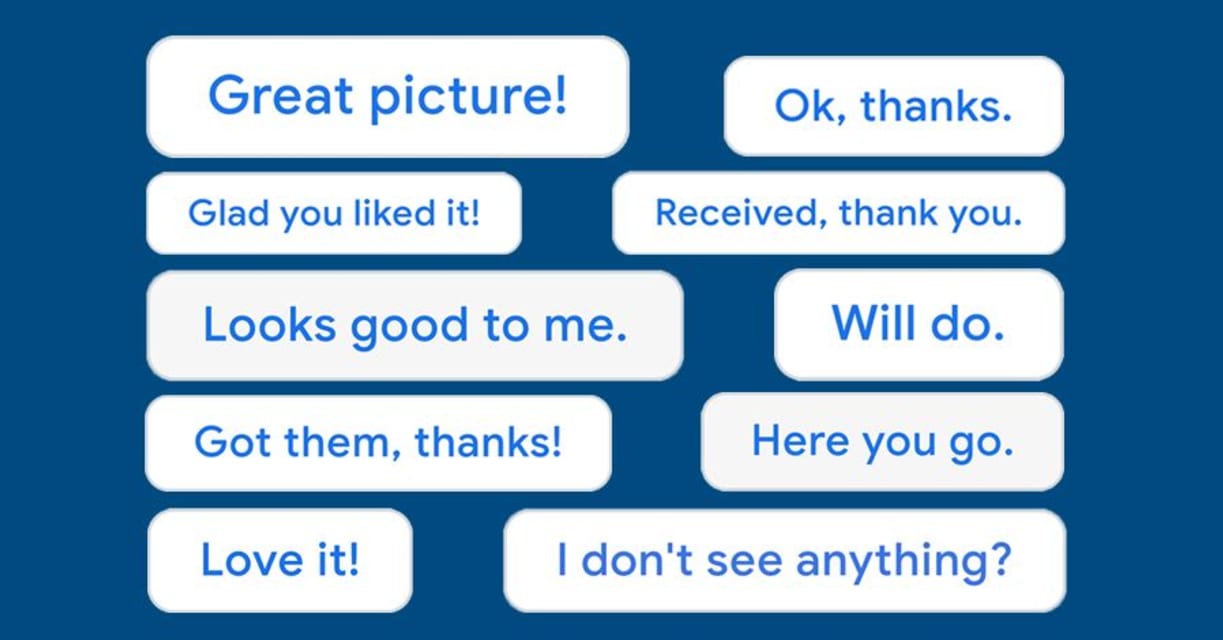

If you use Google products for email, you may have noticed those suggested replies at the bottom of your mobile inbox. These give users options to choose short and snappy responses to emails, such as, “Will do,” “I am working on that now,” …

Image: Google: Photo: Konstantin Sergeyev

The most recognizable feature of Gmail’s newly rolled-out redesign is the so-called smart reply, wherein bots offer three one-click responses to each mail message. Say your email contains the words …

“Thanks for letting me know.”

Have you noticed an uptick in this phrase appearing in emails, on social media, and IRL? Do you find yourself saying it even though maybe a year ago you would never use the phrase? Is there a new wave of semi-f…

When Jess Klein emailed her mother to make plans, the response startled her.

“Cool, see you there.”

Ms. Klein, a 38-year-old from Brooklyn, was suspicious. “Why is my mom talking like a Valley Girl?” she wondered.

She...

Around 10 percent of Gmail responses are now generated with the Smart Reply feature, according to the Wall Street Journal, and it has been available on Gmail apps since last year. Ajit Varma, Google's director of product management, told th…

After users complained that Gmail’s Smart Reply wasn’t so smart, Google announced that it will soon give desktop users the ability to disable the A.I.-based feature. Although the time-saving feature relies on artificial intelligence to come…

The Wall Street Journal is out today with a quick story on the rollout and reception of Gmail’s new Smart Reply feature, and it comes with a few interesting bits of information and anecdotes from random users and Google alike — one of which…

Romantic AI: Google Had to Change Gmail's New Smart Reply Responses Because it Kept Suggesting "I Love You"

How do you feel about Gmail’s new Smart Reply tool? We’re hearing mixed reports. On one hand it’s super handy to be able to tap/click that “Thank you so much!” button when you’re in a rush.

However, users are reporting the suggestions aren’…

Google recently made its handy 'Smart Reply' feature available to Gmail's 1 billion-plus users.

But early prototypes of the tool didn't work so seamlessly, according to the Wall Street Journal.

In one case, the algorithms powering Gmail's '…

A few days ago, I received a short, effective email in my inbox: "Sounds good!"

I had to pause. Although that was the response I wanted — I was arranging a meeting — I wondered: Did he really send that, or did he simply hit Google's automat…

From: Corinne Purtill (Quartz)

To: Corinne Purtill (Gmail)

Mon, Oct 1, 2018 at 11:08 AM

At its annual developers conference earlier this year Google introduced “smart compose,” a new Gmail feature that helps users complete their sentences. …

Google will no longer continue support for Reply, an experimental app that offers smart reply responses to various messaging apps such as Slack, Hangouts, and Messenger. The app launched earlier this year as part of Google’s Area 120 divisi…

Variants

Similar Incidents

Did our AI mess up? Flag the unrelated incidents

TayBot

Nest Smoke Alarm Erroneously Stops Alarming

Similar Incidents

Did our AI mess up? Flag the unrelated incidents

TayBot