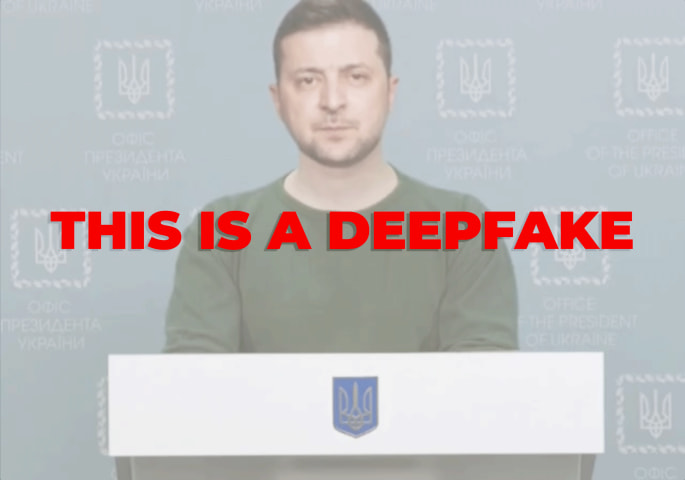

Description: Researchers and European officials reported that Russian operatives have been using purportedly AI-generated posts, videos, and websites to influence Moldova's September 2025 parliamentary elections. Networks of over 900 accounts across TikTok, Facebook, Instagram, Telegram, and YouTube have reportedly spread fabricated narratives, including reportedly misogynistic and false smears of President Maia Sandu.

Entities

View all entitiesAlleged: Unknown generative AI developers developed an AI system deployed by Storm-1679 , Storm-1516 , Russian state-linked actors , Russian disinformation operators , Matryoshka and Influence operation groups, which harmed Moldovan voters , Moldovan democratic institutions , Maia Sandu , General public of Moldova , General public , European Union , Epistemic integrity , Electoral integrity and National security and intelligence stakeholders.

Alleged implicated AI system: Unknown generative AI systems

Incident Stats

Risk Subdomain

A further 23 subdomains create an accessible and understandable classification of hazards and harms associated with AI

4.1. Disinformation, surveillance, and influence at scale

Risk Domain

The Domain Taxonomy of AI Risks classifies risks into seven AI risk domains: (1) Discrimination & toxicity, (2) Privacy & security, (3) Misinformation, (4) Malicious actors & misuse, (5) Human-computer interaction, (6) Socioeconomic & environmental harms, and (7) AI system safety, failures & limitations.

- Malicious Actors & Misuse

Entity

Which, if any, entity is presented as the main cause of the risk

AI

Timing

The stage in the AI lifecycle at which the risk is presented as occurring

Post-deployment

Intent

Whether the risk is presented as occurring as an expected or unexpected outcome from pursuing a goal

Intentional

Incident Reports

Reports Timeline

Loading...

Since returning to the White House in January, President Trump has dismantled the American government's efforts to combat foreign disinformation. The problem is that Russia has not stopped spreading it.

How much that matters can now be seen…

Variants

A "variant" is an AI incident similar to a known case—it has the same causes, harms, and AI system. Instead of listing it separately, we group it under the first reported incident. Unlike other incidents, variants do not need to have been reported outside the AIID. Learn more from the research paper.

Seen something similar?