Entities

View all entitiesCSETv1 Taxonomy Classifications

Taxonomy DetailsIncident Number

53

Notes (special interest intangible harm)

Propagation of systemic racial bias by Google search

Special Interest Intangible Harm

yes

Date of Incident Year

2012

Date of Incident Month

04

Estimated Date

Yes

CSETv0 Taxonomy Classifications

Taxonomy DetailsProblem Nature

Robustness, Assurance

Physical System

Software only

Level of Autonomy

High

Nature of End User

Amateur

Public Sector Deployment

No

Data Inputs

user input, images

Risk Subdomain

1.1. Unfair discrimination and misrepresentation

Risk Domain

- Discrimination and Toxicity

Entity

AI

Timing

Post-deployment

Intent

Unintentional

Incident Reports

Reports Timeline

On occasion, I ask my university students to follow me through a day in the life of an AfricanAmerican aunt, mother, mentor, or friend who is trying to help young women learn to use the Internet. In this exercise, I ask what kind of things …

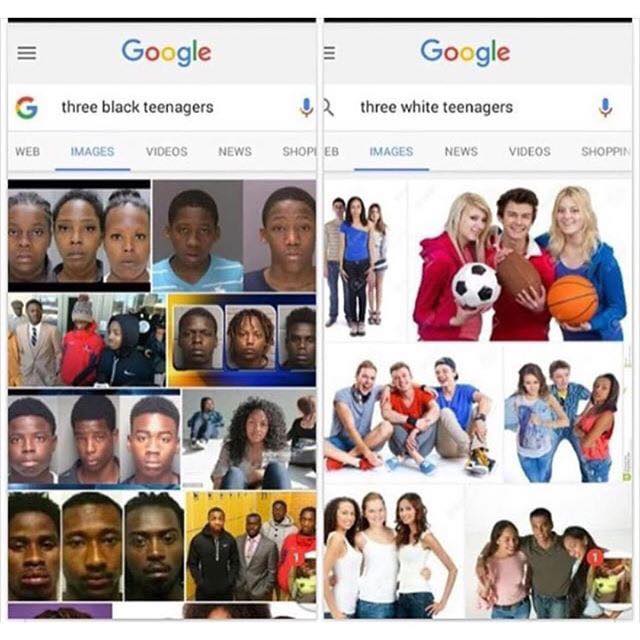

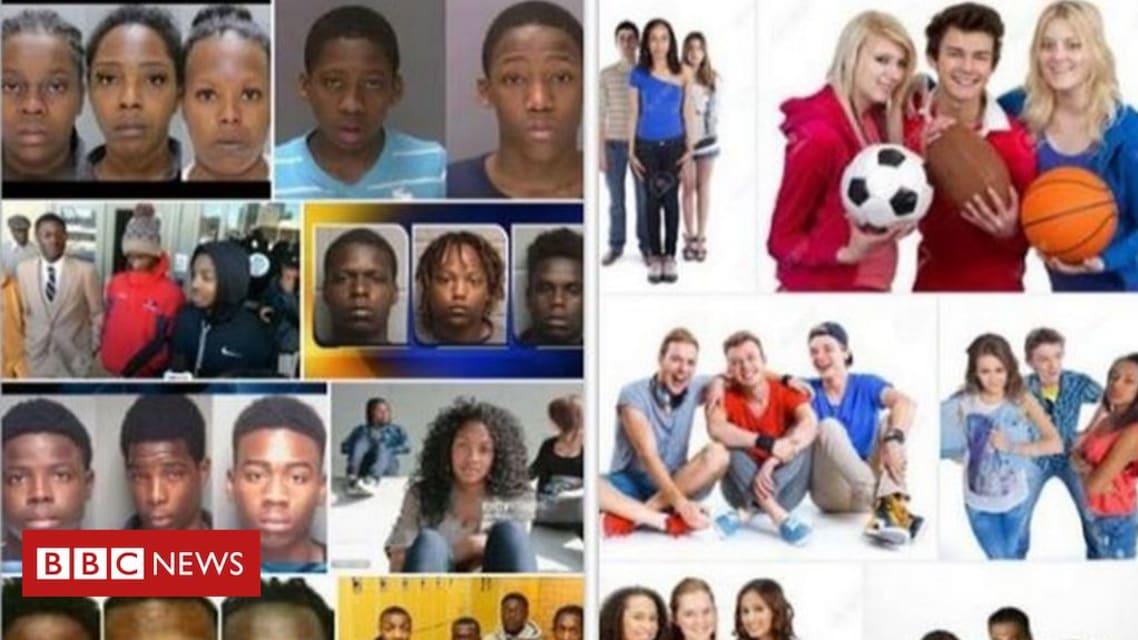

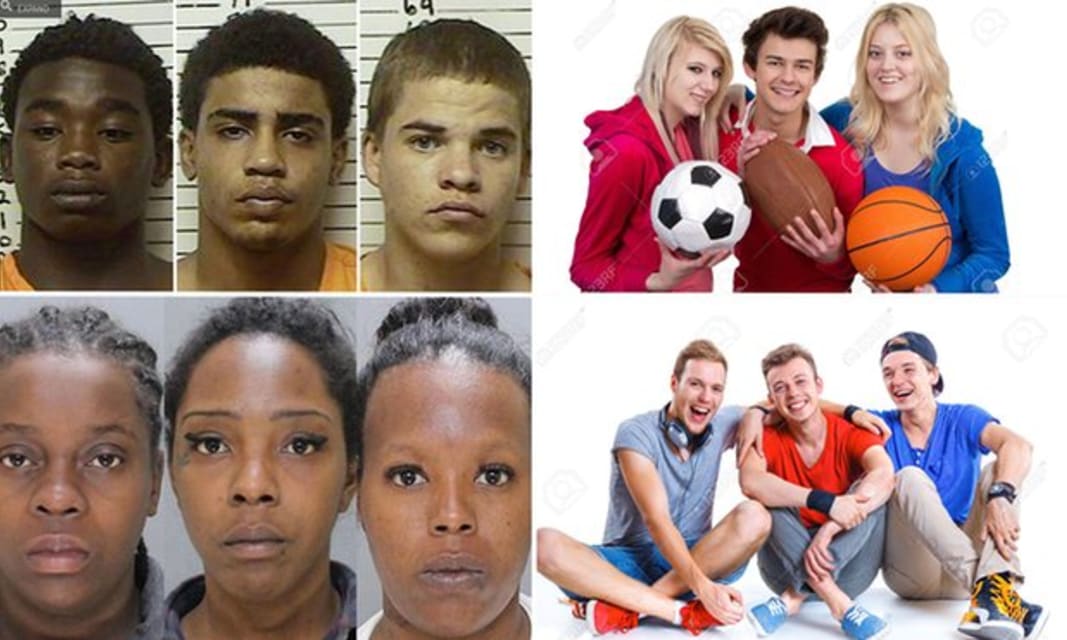

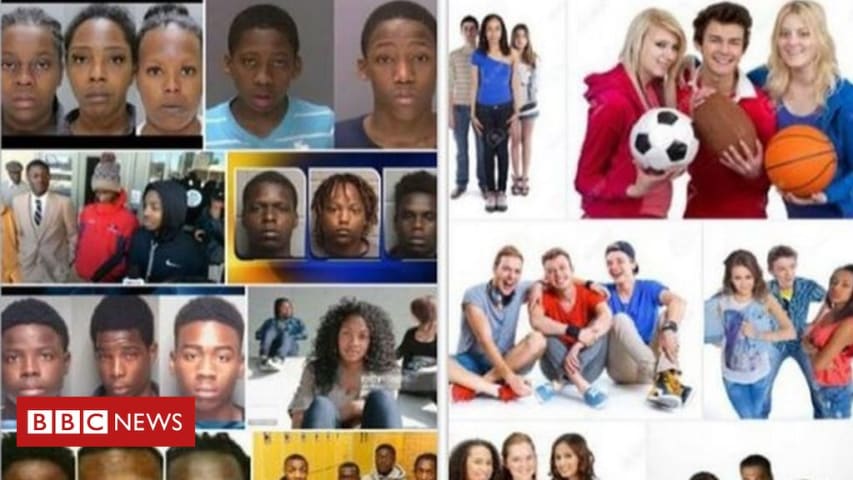

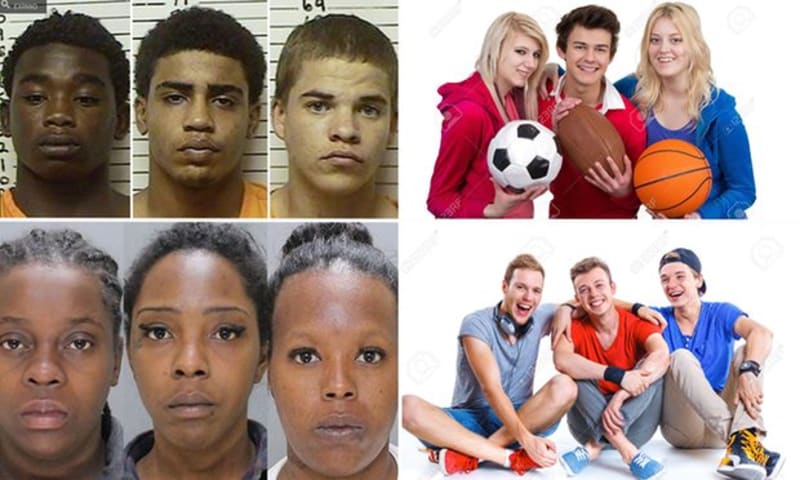

What is the difference between the image search results when a person types “Three Black Teenagers” into google and then types “Three White Teenagers”?

Some people found the results disturbing and some people are calling google racist; Watc…

On Monday, Twitter user @iBeKabir learned that searching the phrase "three black teenagers" on Google images yields almost exclusively mugshots of black teens.

Curious, @iBeKabir says, "Let's just change the color" and swaps out "black" for…

On Monday, Twitter user @iBeKabir learned that searching the phrase "three black teenagers" on Google images yields almost exclusively mugshots of black teens.

Curious, @iBeKabir says, "Let's just change the color" and swaps out "black" for…

A video shows image search results where the only difference in the search is "white" versus "black". In the first case stock photography is returned, while in the second, mugshots are returned.

To view this video please enable JavaScript, and consider upgrading to a web browser that supports HTML5 video

A man compared the results for Googling ‘Three black teenagers’ and ‘Three white teenagers’ – and the results have ignited debate…

Image copyright Google images

What happens when you type: "Three black teenagers" into a Google image search?

Twitter user @ibekabir reposted a video showing someone doing just that, and thousands of social media users responded - because m…

A Google image search is going viral on Twitter as it’s said to highlight the pervasiveness of racial bias and media profiling.

A dude named Kabir Alli posted this clip on Twitter of himself carrying out a simple search of ‘three black teen…

When one searches a stock photo website like Shutterstock for “three black teenagers,” photos like the one above of three cute black teenagers by a photographer dubbed “Logo Boom” show up. Perhaps Google Images should take notes from the st…

Google image search 'three black teenagers' and then 'three white teenagers'.

A Twitter video of a man typing in this supposedly unremarkable search has gone massively viral since it was uploaded yesterday afternoon.

That's because his clip…

This week Twitter user Kabir Alli posted a video of him carrying out two specific searches on Google. The search for “three white teenagers” produced smiling and happy generic images of white teenagers, while the search for “three black tee…

What you need to know about AMI, the company Jeff Bezos says tried to blackmail him

A Google Image search which reveals starkly different results for 'three black teenagers' and 'three white teenagers' has sparked anger on social media.

'Three black teenagers' was trending on Twitter this week after 18-year-old Kabir Alli …

The short answer to why Google's algorithm returns racist results is that society is racist. But let's start with a lil' story.

On June 6 (that's Monday, for those of you keeping track at home) Kabir Alli, an 18-year old in Virginia, posted…

If you searched for “three white teenagers” on Google Images earlier this month, the result spat up shiny, happy people in droves — an R.E.M. song in JPG format. The images, mostly stock photos, displayed young Caucasian men and women laugh…

And just to make sure it’s not some sort of algorithmic Google search that only this guy is seeing:

Screen Shot 2016-06-08 at 11.00.47 AM

Screen Shot 2016-06-08 at 11.01.15 AM

Okay I’m sorry but those three dudes in the white teenagers pic …

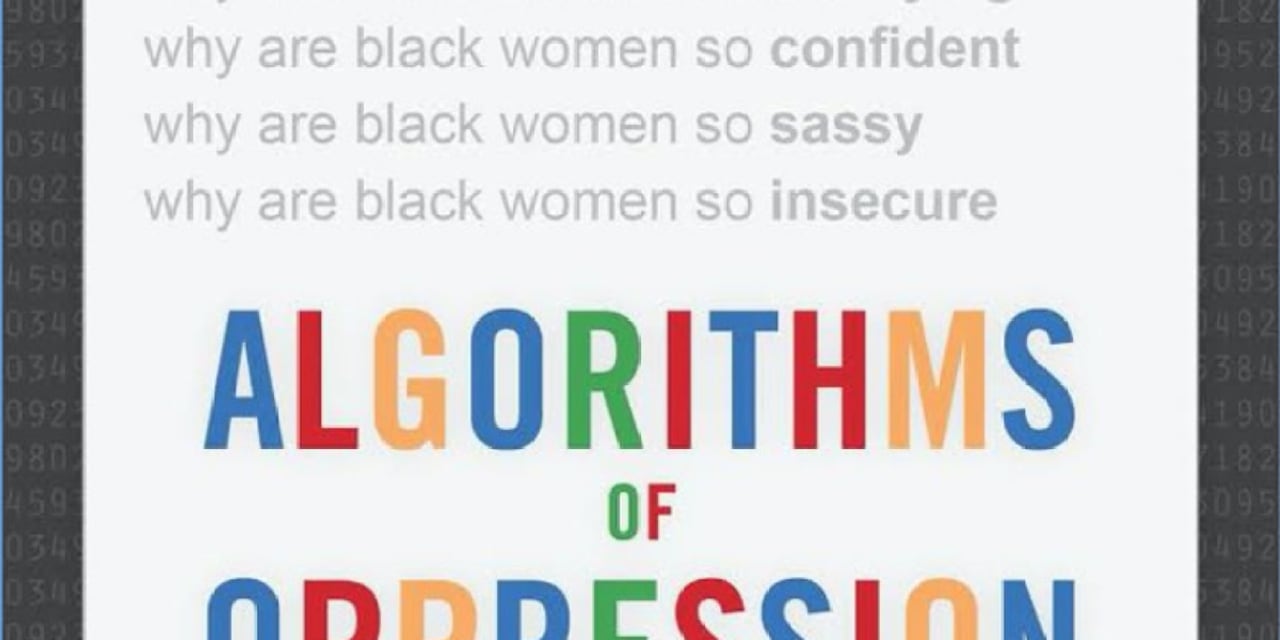

The internet might seem like a level playing field, but it isn’t. Safiya Umoja Noble came face to face with that fact one day when she used Google’s search engine to look for subjects her nieces might find interesting. She entered the term …

Gregory Bush is arraigned on two counts of murder and 10 counts of wanton endangerment Thursday, Oct. 25, 2018, in Louisville, Ky. Bush fatally shot two African-American customers at a Kroger grocery store Wednesday and was swiftly arrested…

Variants

Similar Incidents

Did our AI mess up? Flag the unrelated incidents

Images of Black People Labeled as Gorillas

FaceApp Racial Filters

Sexist and Racist Google Adsense Advertisements

Similar Incidents

Did our AI mess up? Flag the unrelated incidents

Images of Black People Labeled as Gorillas