Entities

View all entitiesCSETv0 Taxonomy Classifications

Taxonomy DetailsProblem Nature

Unknown/unclear

Physical System

Software only

Level of Autonomy

Low

Nature of End User

Amateur

Public Sector Deployment

No

Data Inputs

Photos of faces

CSETv1 Taxonomy Classifications

Taxonomy DetailsIncident Number

60

AI Tangible Harm Level Notes

Annotator 1:

3.3 - Although there was no tangible harm, the adverse outcomes of the incident were directly related to the AI.

Notes (special interest intangible harm)

Annotator 1:

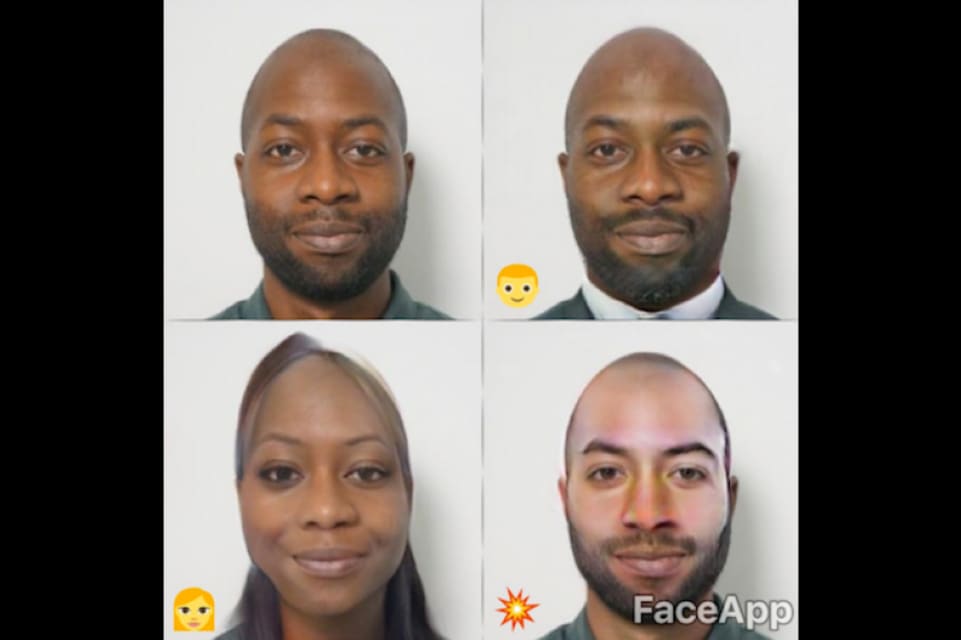

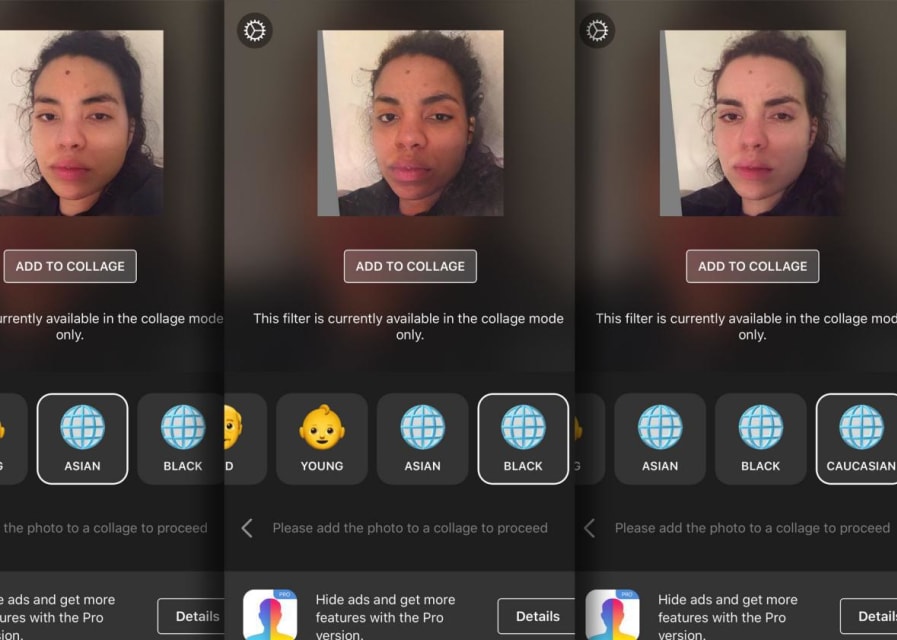

4.4 - FaceApp added a filter that allowed users to look like different races/ethnicities. It also added a "hot" filter that automatically lightened skin color.

Special Interest Intangible Harm

yes

Risk Subdomain

1.1. Unfair discrimination and misrepresentation

Risk Domain

- Discrimination and Toxicity

Entity

AI

Timing

Post-deployment

Intent

Unintentional

Incident Reports

Reports Timeline

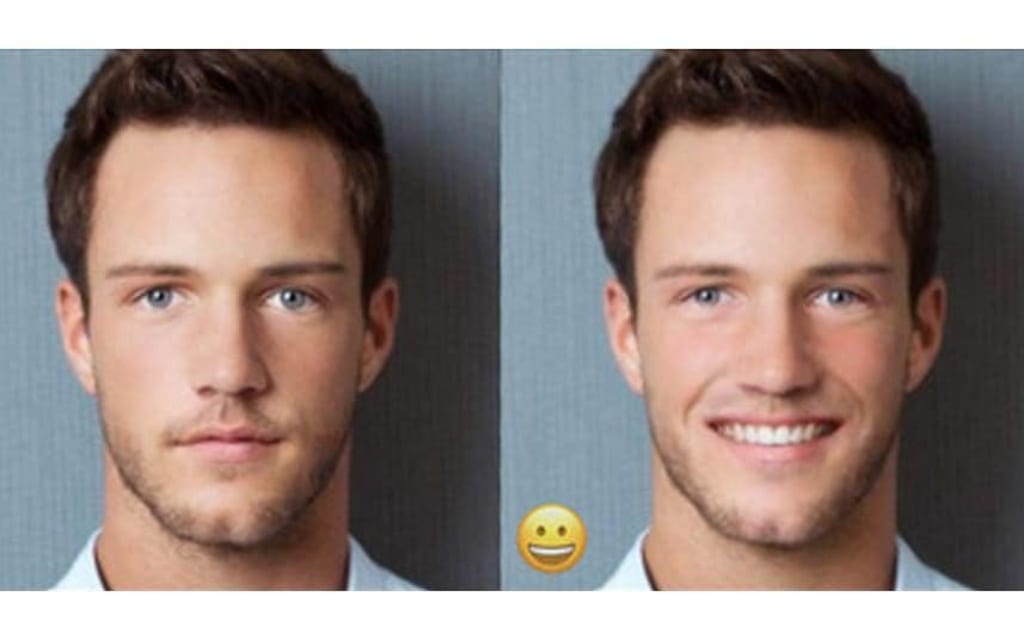

Looks like FaceApp, the new face-morphing craze, already needs a makeover.

Launched in January and hailing from Russia, FaceApp is catching interest for its artificial intelligence technology and intriguing filters that can make you look ol…

A viral app that adds filters to users' selfies to change their appearance has backtracked on its latest update after was accused of racism over its new range of ethnic filters.

FaceApp, which uses facial recognition to change users' expres…

The makers of a face-morphing app have apologised after users accused them of creating a "racist" filter.

FaceApp can change portraits to make people look older, change gender or become "more attractive".

But when users with darker skin ton…

People who actually want to see their faces reflected in their phone screens have been having fun with FaceApp recently, with Facebook and Twitter currently overloaded with images of users sharing what they look like young, old and "hot" as…

Twitter/Terrance AB Johnson

It happened—a tech company did a bad thing again. This time, it's the super popular face-morphing app called, wait for it…FaceApp.

For some reason unknown to me, FaceApp went gangbusters this week after launching…

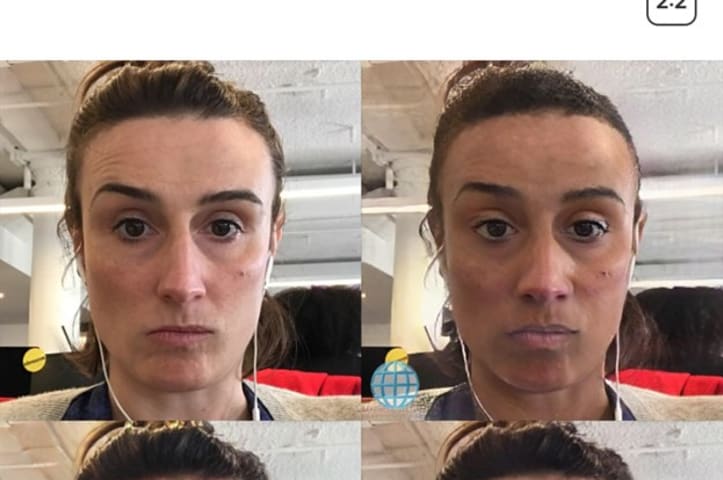

The creator of an app which changes your selfies using artificial intelligence has apologised because its “hot” filter automatically lightened people’s skin.

FaceApp is touted as an app which uses “neural networks” to change facial characte…

A selfie app that renders users’ faces in varying styles removed one of its filters following criticism that it Whitewashed the faces of people of color.

Mic reports that after receiving complaints, FaceApp developers first changed the func…

FaceApp: Experts have privacy concerns about popular face transformation app

Updated

FaceApp has gone viral. Why? Because it lets you transform your face in hilarious ways.

But privacy advocates warn you could be giving up much more informa…

Social media is awash right now in selfies with weirdly realistic facial transformations thanks to a viral app, appropriately called “FaceApp.” FaceApp lets users add filters to faces to make them smile, age, and change gender, with a surpr…

Shona Ghosh/Business Insider Beauty filters on popular smartphones turn you paler in selfies

If you own a phone which runs Android, the chances are it has a camera setting you don’t know about.

Popular smartphones like the Samsung Galaxy S8…

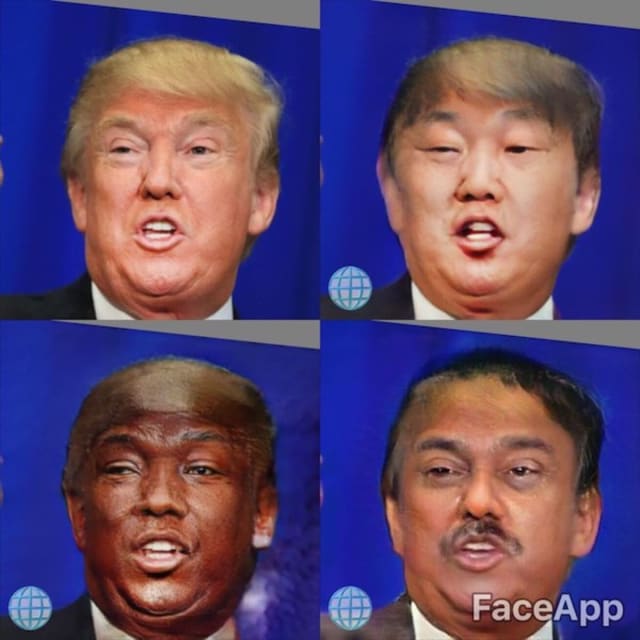

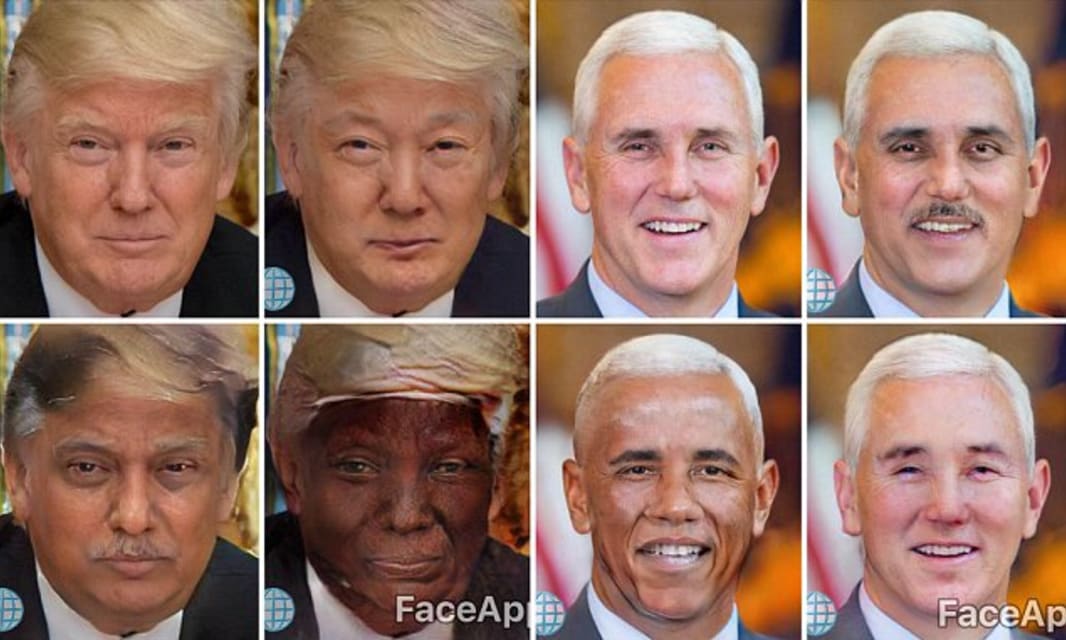

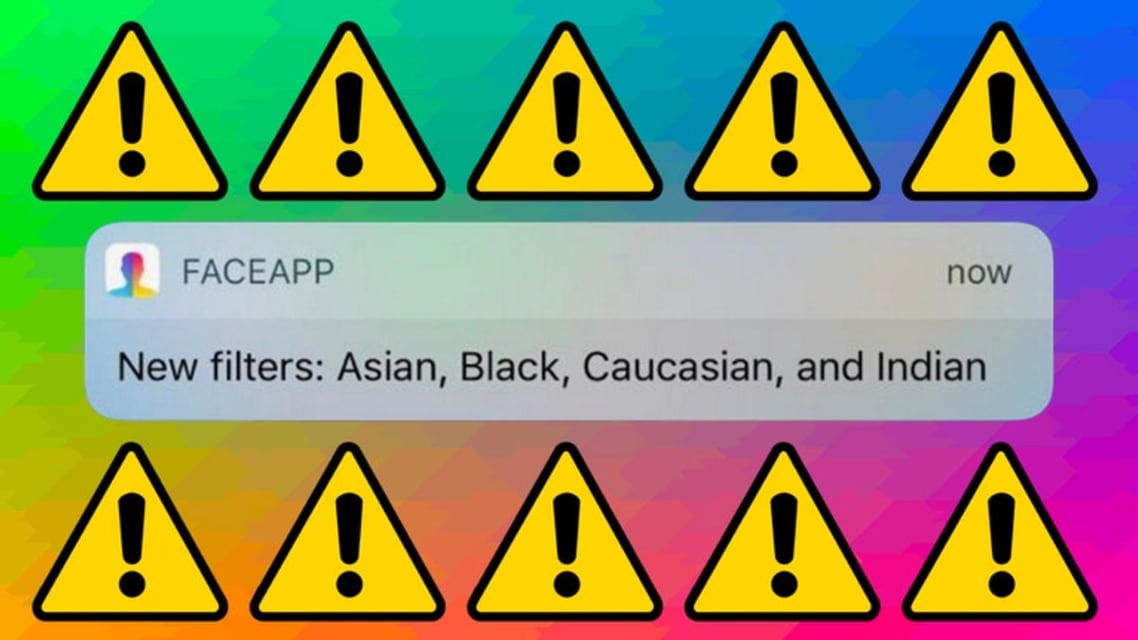

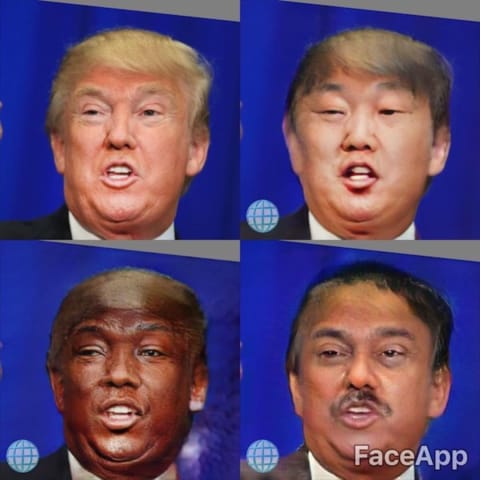

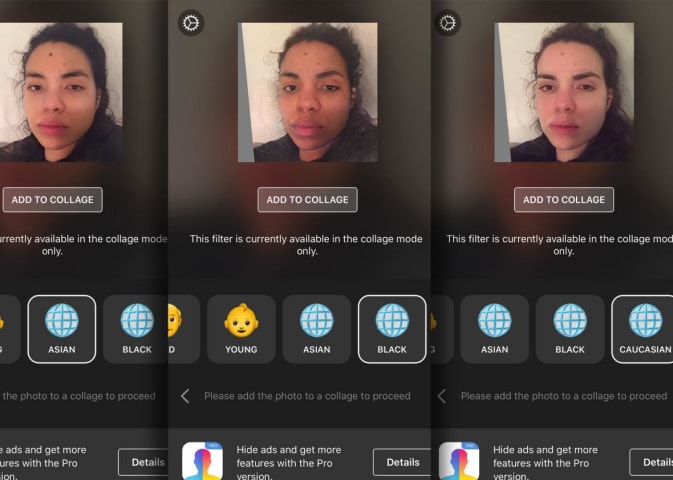

On Wednesday morning, the photo-editing app FaceApp released new photo filters that change the ethnic appearance of your face.

The app first became popular earlier in 2017 due to its ability to transform people into elderly versions of them…

FaceApp’s CEO says: “The new controversial filters will be removed in the next few hours.”

You might remember FaceApp — a selfie-editing app that transforms users’ pictures by making them look older, younger, or giving them an artificial sm…

Remember FaceApp, the smartphone app that, among other things, allows you to add a filter to your face to make you look younger or older? The one that somehow fascinates every single person you know and encourages them to post photos on the…

A viral app that added Asian, Black, Caucasian and Indian filters to people's selfies has removed them after being accused of racism.

The update which launched yesterday was met with backlash - with many people criticising it for propagatin…

AI-powered program allowed users to edit selfies to fit into ‘Caucasian, Asian, Indian or Black’ categories causing outrage and immediate U-turn

Popular AI-powered selfie program FaceApp was forced to pull new filters that allowed users to …

FaceApp boss Yaroslav Goncharov has defended the new filters. "The ethnicity change filters have been designed to be equal in all aspects."

FaceApp, an app that uses neural networks to transform your selfies in some rather eerie ways, has …

FaceApp’s racial filters lived only briefly, but they should never have happened in the first place.

FaceApp

For a brief moment on Wednesday, FaceApp—the app that went viral in April for taking a photo of someone’s face and making them look…

Popular photo filter application, FaceApp, which allows users to take selfies and then add fun filters to them to change their appearance by adding a range of photo filters, faced major backlash after its developers on August 9 rolled out a…

This really isn't that hard, people. Just last year, Snapchat apologised for adding an offensive Bob Marley selfie filter to its stable. Now, FaceApp — the silly photo editor you probably downloaded in March and totally forgot about — has o…

Remember that viral face-filtering app, FaceApp, that was accused of being racist after featuring a filter called "Hot" that actually lightened people's skin](https://www.allure.com/story/faceapp-accused-of-racism-with-skin-whitening-featur…

FaceApp has removed a number of racially themed photo filters after being accused of racism.

The app, which uses artificial intelligence to edit pictures, this week launched a number of “ethnicity change filters”.

They claimed to show users…

FaceApp

In recent months, FaceApp has been a fun app you’ve probably seen on your social media feeds, letting you and your friends morph faces to look older, younger, or smiling when you frown. You can even see what you’d look like if you p…

"New filters: Asian, Black, Caucasian, Indian." WHAT. Update: Faceapp has removed the four new filters.

Remember FaceApp? Back in April, it was a suddenly popular Internet Thing(™) that would make your face look like you were a baby, an old…

Variants

Similar Incidents

Did our AI mess up? Flag the unrelated incidents

Images of Black People Labeled as Gorillas

Biased Google Image Results

TayBot

Similar Incidents

Did our AI mess up? Flag the unrelated incidents

Images of Black People Labeled as Gorillas

Biased Google Image Results