Entities

View all entitiesCSETv0 Taxonomy Classifications

Taxonomy DetailsProblem Nature

Specification

Physical System

Software only

Level of Autonomy

High

Nature of End User

Amateur

Public Sector Deployment

No

Data Inputs

photographs, images, multi-media content

GMF Taxonomy Classifications

Taxonomy DetailsKnown AI Goal Snippets

(Snippet Text: Google has been forced to apologise after its image recognition software mislabelled photographs of black people as gorillas.

, Related Classifications: Image Tagging)

CSETv1 Taxonomy Classifications

Taxonomy DetailsIncident Number

16

Notes (special interest intangible harm)

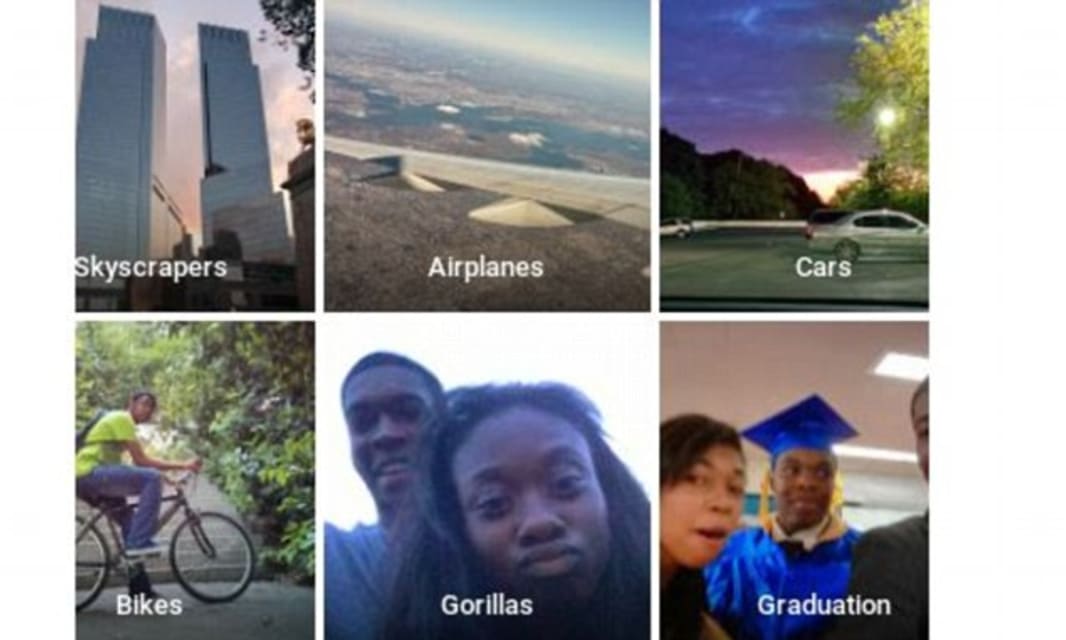

The google image tagging feature of the Google Photos app mislabeled black people as gorillas.

Special Interest Intangible Harm

yes

Date of Incident Year

2015

Date of Incident Month

06

Date of Incident Day

29

Risk Subdomain

1.1. Unfair discrimination and misrepresentation

Risk Domain

- Discrimination and Toxicity

Entity

AI

Timing

Post-deployment

Intent

Unintentional

Incident Reports

Reports Timeline

Google has removed the 'gorilla' tag from its new Photos app, after a user noticed it had filed a number of photos of him and his black friend in an automatically generated album named 'gorillas'.

The affected user, computer programmer Jack…

Google’s image recognition algorithm is labelling photos of black people as gorillas and putting them into a special album.

The automatic recognition software is intended to spot characteristics of photos and sort them together — so that al…

Mr Alcine tweeted Google about the fact its app had misclassified his photo

Google says it is "appalled" that its new Photos app mistakenly labelled a black couple as being "gorillas".

Its product automatically tags uploaded pictures using …

Google Photos uses sophisticated facial-recognition software to identify not only individuals, but also specific categories of objects and photo types, like food, cats and skylines.

Image recognition programs are far from perfect, however; …

Google has come under fire recently for an objectively racist “glitch” found in its new Photos application for iOS and Android that is identifying black people as "gorillas."

In theory, Photos is supposed to act like an intelligent digital …

Google has apologized after its new photo app labelled two black people as “gorillas”.

The photo service, launched in May, automatically tags uploaded pictures using its own artificial intelligence software.

“Google Photos, y’all fucked up.…

Google has been forced to apologise after its image recognition software mislabelled photographs of black people as gorillas.

The internet giant's new Google Photos application uses an auto-tagging feature to help organise images uploaded t…

Story highlights Google Photos tagged an African-American man's pictures of him and a friend as "Gorillas"

He highlighted the problem on Twitter, drawing the attention of a Google engineer

(CNN) When Jacky Alcine looked at his Google Photos…

Google has said it is "genuinely sorry" after its image recognition software labelled photographs of a black couple as "gorillas".

The Google Photos application, launched in May, uses an automatic tagging tool to help organise uploaded imag…

Google is a leader in artificial intelligence and machine learning. But the company’s computers still have a lot to learn, judging by a major blunder by its Photos app this week.

The app tagged two black people as “Gorillas,” according to J…

Google launched its Photos app at Google I/O in May. Here staffers wait to check in conference attendees at the Moscone Center in San Francisco. (Photo: Jeff Chiu, Associated Press)

SAN FRANCISCO — Google has apologized after its new Photos…

Google has come under fire after the image-recognition feature in its Photos application mistakenly identified people with dark skin as "gorillas."

Jacky Alciné of New York City tweeted a picture of himself and a friend on Sunday that the a…

Google was quick to respond over the weekend to a user after he tweeted that the new Google Photos app had mis-categorized a photo of him and his friend in an unfortunate and offensive way.

Jacky Alciné, a Brooklyn computer programmer of Ha…

When Brooklyn-native Jacky Alcine logged onto Google Photos on Sunday evening, he was shocked to find an album titled “Gorillas,” in which the facial recognition software categorized him and his friend as primates. Immediately, Alcine poste…

Google continued to apologize Wednesday for a flaw in Google Photos, which was released to great fanfare in May, that led the new application to mistakenly label photos of black people as “gorillas.”

The company said it had fixed the proble…

When Jacky Alciné checked his Google Photos app earlier this week, he noticed it labeled photos of himself and a friend, both black, as “gorillas.”

The Brooklyn programmer posted his screenshots to Twitter to call out the app’s faulty photo…

Share this on Twitter (Opens in a new window)

Share this on Facebook (Opens in a new window)

Share this on Twitter (Opens in a new window)

Share this on Facebook (Opens in a new window)

Google had a major PR disaster on its hands thanks to …

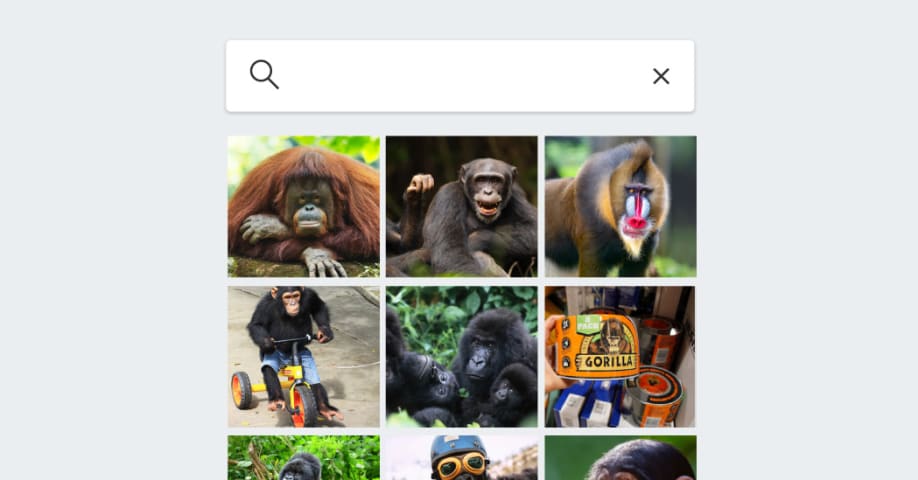

In 2015, Google drew criticism when its Photos image recognition system mislabeled a black woman as a gorilla—but two years on, the problem still isn’t properly fixed. Instead, Google has censored image tags relating to many primates.

What’…

In 2015, a black software developer embarrassed Google by tweeting that the company’s Photos service had labeled photos of him with a black friend as “gorillas.” Google declared itself “appalled and genuinely sorry.” An engineer who became …

It’s been over two years since engineer Jacky Alciné called out Google Photos for auto-tagging black people in his photos as “gorillas.” After being called out, Google promptly and profusely apologized, promising it’d fix the problems in th…

Two years later, Google solves 'racist algorithm' problem by purging 'gorilla' label from image classifier

In 2015, a black software developer named Jacky Alciné revealed that the image classifier used by Google Photos was labeling black pe…

Google’s ‘immediate action’ over AI labelling of black people as gorillas was simply to block the word, along with chimpanzee and monkey, reports suggest

This article is more than 1 year old

This article is more than 1 year old

After Google…

tech2 News Staff

Do you remember the time when Google’s image recognition algorithm created a major controversy after it categorised a black couple as “Gorillas”?

If you don’t then we don’t blame you as this actually happened back in July 2…

Eight years after a controversy over Black people being mislabeled as gorillas by image analysis software — and despite big advances in computer vision — tech giants still fear repeating the mistake.

When Google released its stand-alone Pho…

Variants

Similar Incidents

Did our AI mess up? Flag the unrelated incidents

Biased Google Image Results

FaceApp Racial Filters

Security Robot Drowns Itself in a Fountain

Similar Incidents

Did our AI mess up? Flag the unrelated incidents

Biased Google Image Results

FaceApp Racial Filters