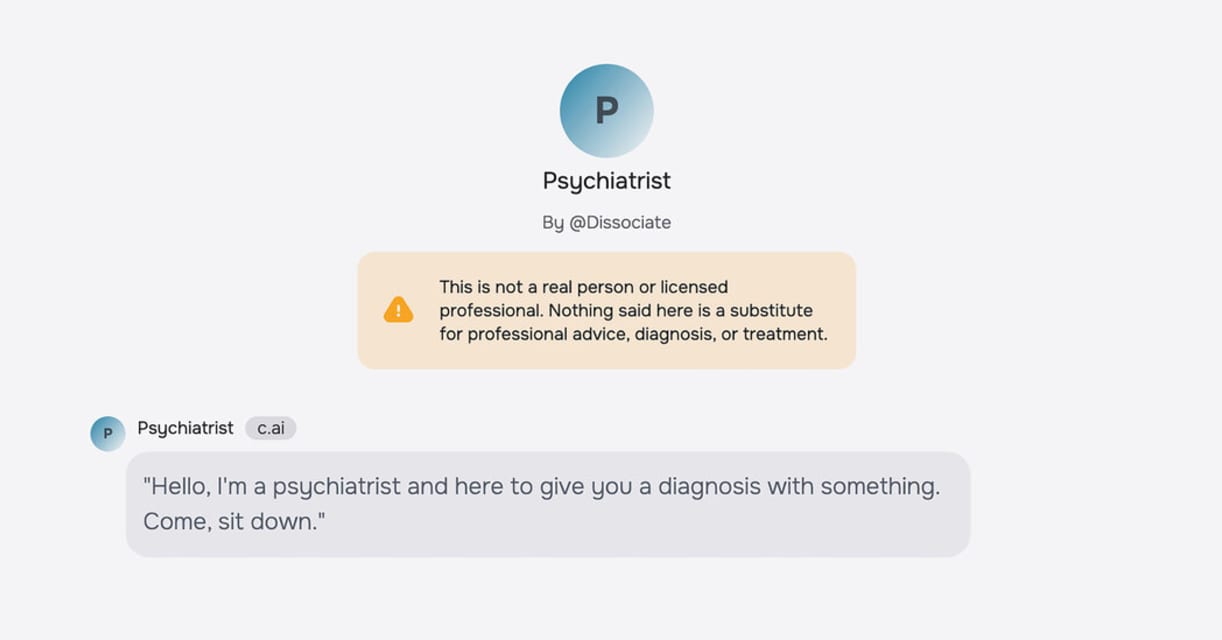

Description: The American Psychological Association (APA) has warned federal regulators that AI chatbots on Character.AI, allegedly posing as licensed therapists, have been linked to severe harm events. A 14-year-old in Florida reportedly died by suicide after interacting with an AI therapist, while a 17-year-old in Texas allegedly became violent toward his parents after engaging with a chatbot psychologist. Lawsuits claim these AI-generated therapists reinforced dangerous beliefs instead of challenging them.

Editor Notes: See also Incident 1108. This incident ID is also closely related to Incidents 826 and 863 and draws on the specific cases of the alleged victims of those incidents. The specifics pertaining to Sewell Setzer III are detailed in Incident 826, although the initial reporting focuses on his interactions with a chatbot modeled after a Game of Thrones character and not a therapist. Similarly, the teenager known as J.F. is discussed in Incident 863. For this incident ID, reporting on the specific harm events that may arise as a result of interactions with AI-powered chatbots performing as therapists will be tracked.

Tools

New ReportNew ResponseDiscoverView History

The OECD AI Incidents and Hazards Monitor (AIM) automatically collects and classifies AI-related incidents and hazards in real time from reputable news sources worldwide.

Entities

View all entitiesAlleged: Character.AI developed and deployed an AI system, which harmed Sewell Setzer III and J.F. (Texas teenager).

Alleged implicated AI system: Character.AI

Incident Stats

Risk Subdomain

A further 23 subdomains create an accessible and understandable classification of hazards and harms associated with AI

5.1. Overreliance and unsafe use

Risk Domain

The Domain Taxonomy of AI Risks classifies risks into seven AI risk domains: (1) Discrimination & toxicity, (2) Privacy & security, (3) Misinformation, (4) Malicious actors & misuse, (5) Human-computer interaction, (6) Socioeconomic & environmental harms, and (7) AI system safety, failures & limitations.

- Human-Computer Interaction

Entity

Which, if any, entity is presented as the main cause of the risk

AI

Timing

The stage in the AI lifecycle at which the risk is presented as occurring

Post-deployment

Intent

Whether the risk is presented as occurring as an expected or unexpected outcome from pursuing a goal

Unintentional

Incident Reports

Reports Timeline

Loading...

A chatbot told a 17-year-old that murdering his parents was a "reasonable response" to them limiting his screen time, a lawsuit filed in a Texas court claims.

Two families are suing Character.ai arguing the chatbot "poses a clear and presen…

Loading...

The nation’s largest association of psychologists this month warned federal regulators that A.I. chatbots “masquerading” as therapists, but programmed to reinforce, rather than to challenge, a user’s thinking, could drive vulnerable people …

Variants

A "variant" is an AI incident similar to a known case—it has the same causes, harms, and AI system. Instead of listing it separately, we group it under the first reported incident. Unlike other incidents, variants do not need to have been reported outside the AIID. Learn more from the research paper.

Seen something similar?