Entities

View all entitiesRisk Subdomain

4.1. Disinformation, surveillance, and influence at scale

Risk Domain

- Malicious Actors & Misuse

Entity

Human

Timing

Post-deployment

Intent

Intentional

Incident Reports

Reports Timeline

A prominent New Hampshire Democrat wants the makers of a robocall mimicking the voice of Joe Biden and encouraging Democrats not to vote in the primary on Tuesday "prosecuted to the fullest extent" for attempting "an attack on democracy" it…

MANCHESTER, N.H. --- The New Hampshire attorney general's office says it is investigating what appears to be an "unlawful attempt" at voter suppression after NBC News reported on a robocall impersonating President Joe Biden that told recipi…

Jan 23 (Reuters) - A fake robocall urging Democrats not to vote in New Hampshire's presidential primary on Tuesday is unlikely to affect the results, the secretary of state said as voters cast ballots in the northern New England state.

"I d…

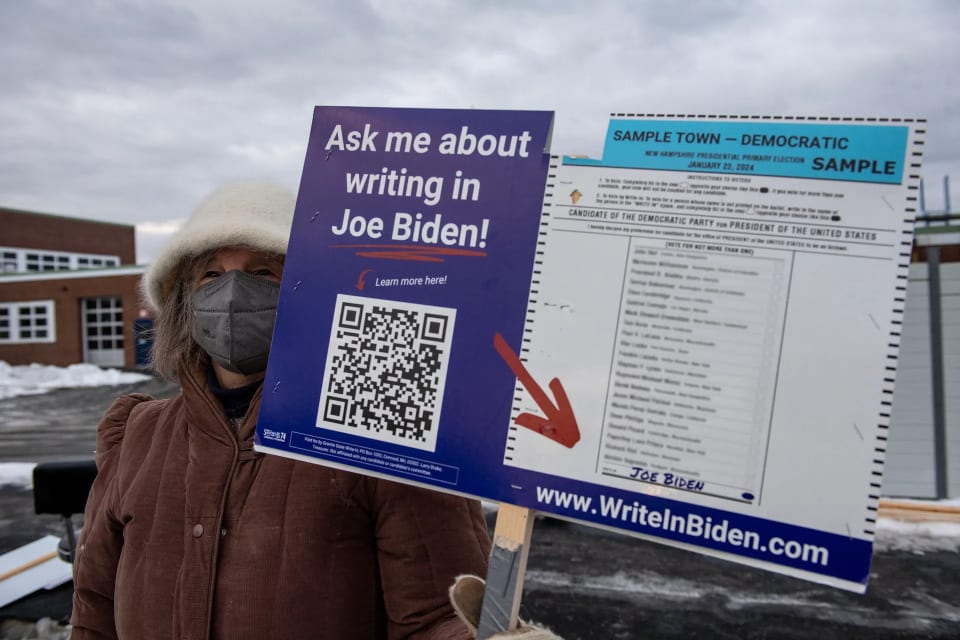

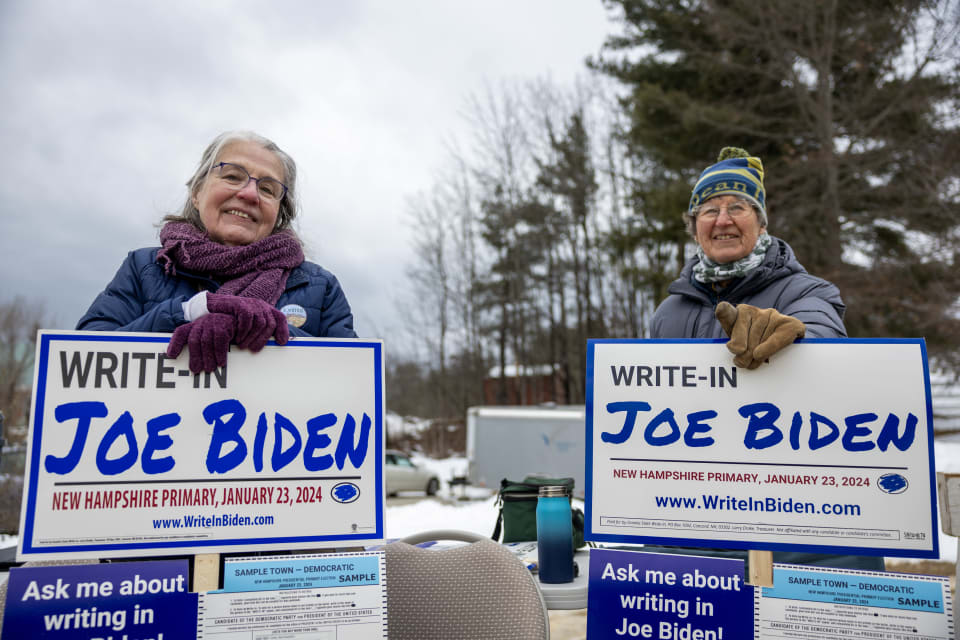

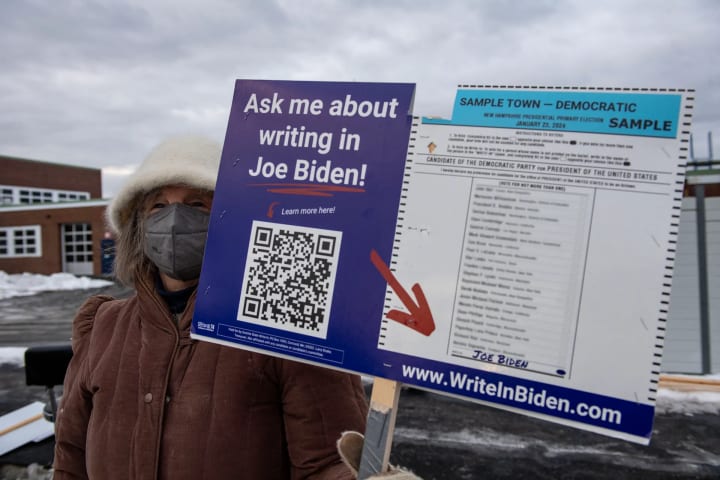

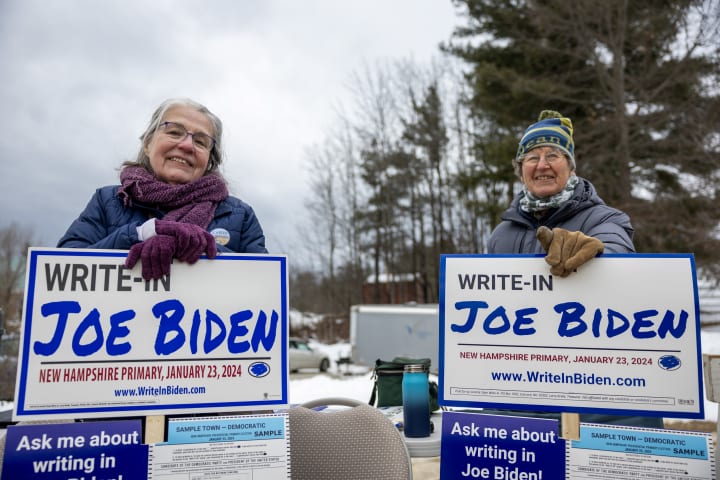

Late in the afternoon on Sunday, Jan. 21, Kathy Sullivan received a text from a family member who said they had received a call from Sullivan or her husband and were following up.

The treasurer of a super PAC running a write-in campaign for…

Robocalls featuring the AI-generated voice of President Joe Biden made to New Hampshire voters ahead of the state’s primary last month allegedly originated with a shadowy Texas-based telecommunications firm called Life Corporation, the New …

NEW ORLEANS — A Democratic consultant who worked for a rival presidential campaign paid a New Orleans magician to use artificial intelligence to impersonate President Joe Biden for a robocall that is now at the center of a multistate law en…

CONCORD, NH — A New Orleans magician was hired to use artificial intelligence to impersonate President Joe Biden for a robocall urging New Hampshire democrats to hold their votes in the presidential primary, according to an exclusive NBC Ne…

A New Orleans magician is claiming he was paid by a political consultant to create an AI-generated Joe Biden voice that was used in voter suppression robocalls to New Hampshire voters.

Paul Carpenter told NBC News that he was hired by Steve…

Steve Kramer, a veteran political consultant working for a rival candidate, acknowledged Sunday that he commissioned the robocall that impersonated President Joe Biden using artificial intelligence, confirming an NBC News report that he was…

The news broke on the eve of New Hampshire's presidential primary: Someone, somewhere, had used artificial intelligence to mimic the voice of a leading candidate to suppress voter turnout among his supporters.

Thousands of New Hampshire vot…

Three New Hampshire voters and the nonprofit League of Women Voters filed a civil suit Thursday against a number of individuals and companies allegedly behind a January robocall featuring the AI-generated voice of President Joe Biden that …

A voting advocacy group is suing a political consultant and companies behind an AI-generated robocall of President Biden that in January urged New Hampshire voters not to participate in the state's presidential primary.

The League of Women …

Steve Kramer, a Democratic operative who admitted to commissioning an artificial intelligence-generated robocall of President Biden that instructed New Hampshire voters to not vote early this year, is now facing criminal charges and federal…

The Federal Communications Commission is pressing telecommunications carriers for more transparency around efforts to curb the use of voice-cloned robocalls that impersonate political candidates over their networks.

Chairwoman Jessica Rosen…

The FBI said Thursday that malicious actors have been impersonating senior U.S. government officials in a text and voice messaging campaign, using phishing texts and AI-generated audio to trick other government officials into giving up acce…