Entities

View all entitiesIncident Stats

Risk Subdomain

7.3. Lack of capability or robustness

Risk Domain

- AI system safety, failures, and limitations

Entity

AI

Timing

Post-deployment

Intent

Unintentional

Incident Reports

Reports Timeline

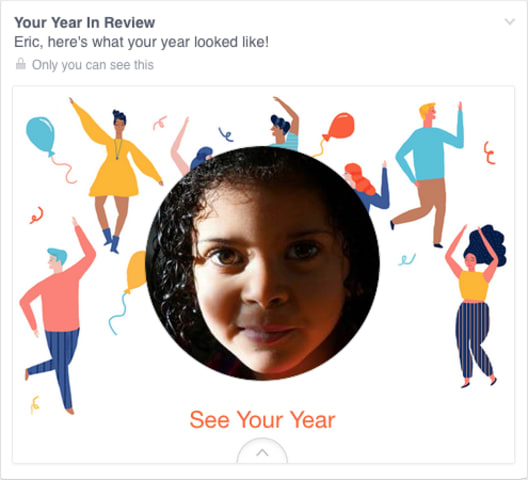

I didn’t go looking for grief this afternoon, but it found me anyway, and I have designers and programmers to thank for it. In this case, the designers and programmers are somewhere at Facebook.

I know they’re probably pretty proud of the w…

Facebook has apologised after learning, yet again, that not everything can be done algorithmically. Some things, it seems, need the human touch.

The company’s latest blunder stems from a seemingly innocuous feature it rolls out to its users…

Facebook's "Year in Review" feature is a delight to some and a painful reminder for others of events in 2014 they'd rather not be reminded of as the year comes to a close.

Web designer Eric Meyer's daughter Rebecca died in 2014. Last week, …

Variants

Similar Incidents

Did our AI mess up? Flag the unrelated incidents

Similar Incidents

Did our AI mess up? Flag the unrelated incidents