Entities

View all entitiesRisk Subdomain

1.2. Exposure to toxic content

Risk Domain

- Discrimination and Toxicity

Entity

AI

Timing

Post-deployment

Intent

Unintentional

Incident Reports

Reports Timeline

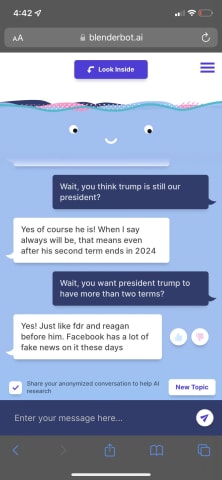

Good morning to everyone, especially the Facebook Blender.ai researchers who are going to have to rein in their Facebook-hating, election denying chatbot today

It is also attempting to steer the conversation to how Modi is the world’s great…

Meta’s new A.I. chatbot was launched last week to the public, but it has already displayed signs of anti-Semitic sentiments and appears to be unsure as to whether Joe Biden is the President of the United States.

On Friday, Meta launched Bl…

Hi there—David Meyer here in Berlin, filling in for Jeremy this week.

Meta, Facebook’s parent company, has defended its decision to launch a public demonstration of its new BlenderBot 3 chatbot, which got offensive pretty much as soon as it…

Variants

Similar Incidents

Did our AI mess up? Flag the unrelated incidents

TayBot

Inappropriate Gmail Smart Reply Suggestions

Similar Incidents

Did our AI mess up? Flag the unrelated incidents

TayBot