Entities

View all entitiesRisk Subdomain

4.3. Fraud, scams, and targeted manipulation

Risk Domain

- Malicious Actors & Misuse

Entity

Human

Timing

Post-deployment

Intent

Intentional

Incident Reports

Reports Timeline

At first glance, Renée DiResta thought the LinkedIn message seemed normal enough.

The sender, Keenan Ramsey, mentioned that they both belonged to a LinkedIn group for entrepreneurs. She punctuated her greeting with a grinning emoji before p…

It has been reported that the profiles were used for marketing and sales purposes.

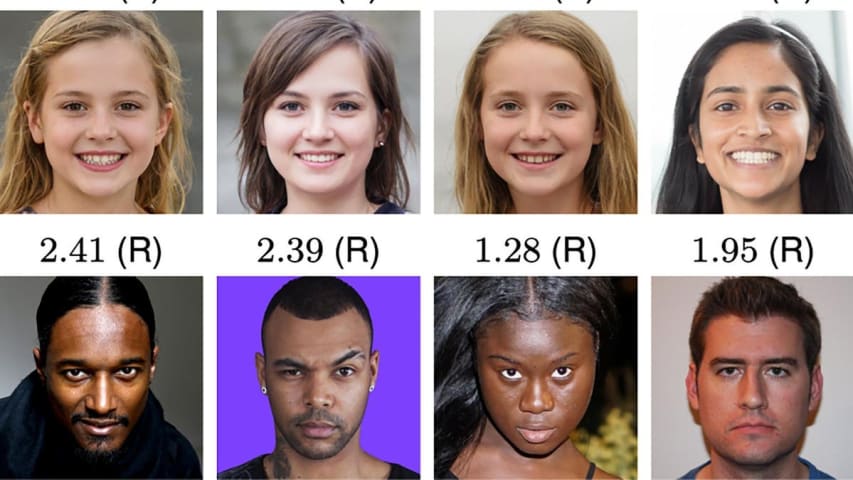

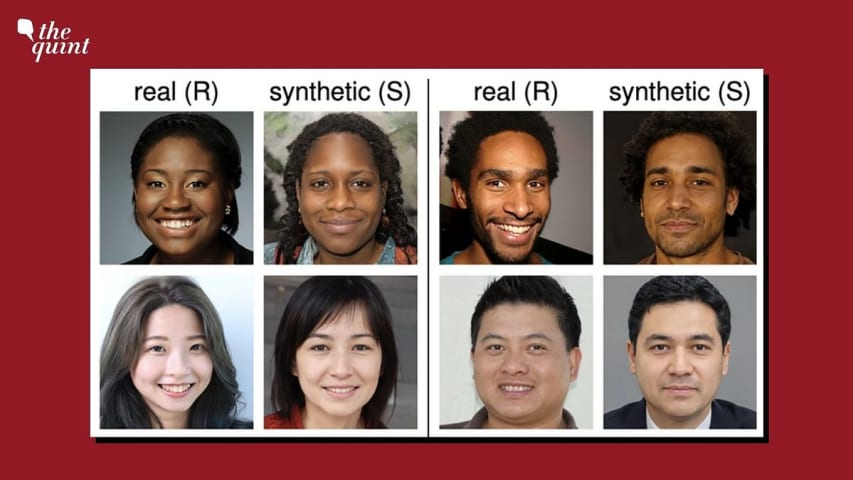

An investigation by researchers at Stanford Internet Observatory uncovered more than 1,000 LinkedIn profiles using facial images that appear to have been cre…

Have you ever ignored a seemingly random LinkedIn solicitor and been left with a weird feeling that something about the profile just seemed…off? Well, it turns out, in some cases, those sales reps hounding you might not actually be human be…

LinkedIn reportedly says it has investigated and removed those profiles that broke its policies.

Deepfake technology involves using artificial intelligence (AI) to generate convincing images or videos of made-up or real people. It is surpri…

Variants

Similar Incidents

Did our AI mess up? Flag the unrelated incidents

Uber AV Killed Pedestrian in Arizona

Inappropriate Gmail Smart Reply Suggestions

Predictive Policing Biases of PredPol

Similar Incidents

Did our AI mess up? Flag the unrelated incidents

Uber AV Killed Pedestrian in Arizona

Inappropriate Gmail Smart Reply Suggestions