Description: Facebook’s hate-speech detection algorithms was found by company researchers to have under-reported less common but more harmful content that was more often experienced by minority groups such as Black, Muslim, LGBTQ, and Jewish users.

Entities

View all entitiesAlleged: Facebook developed and deployed an AI system, which harmed Facebook users of minority groups and Facebook users.

Risk Subdomain

A further 23 subdomains create an accessible and understandable classification of hazards and harms associated with AI

1.2. Exposure to toxic content

Risk Domain

The Domain Taxonomy of AI Risks classifies risks into seven AI risk domains: (1) Discrimination & toxicity, (2) Privacy & security, (3) Misinformation, (4) Malicious actors & misuse, (5) Human-computer interaction, (6) Socioeconomic & environmental harms, and (7) AI system safety, failures & limitations.

- Discrimination and Toxicity

Entity

Which, if any, entity is presented as the main cause of the risk

Human

Timing

The stage in the AI lifecycle at which the risk is presented as occurring

Post-deployment

Intent

Whether the risk is presented as occurring as an expected or unexpected outcome from pursuing a goal

Intentional

Incident Reports

Reports Timeline

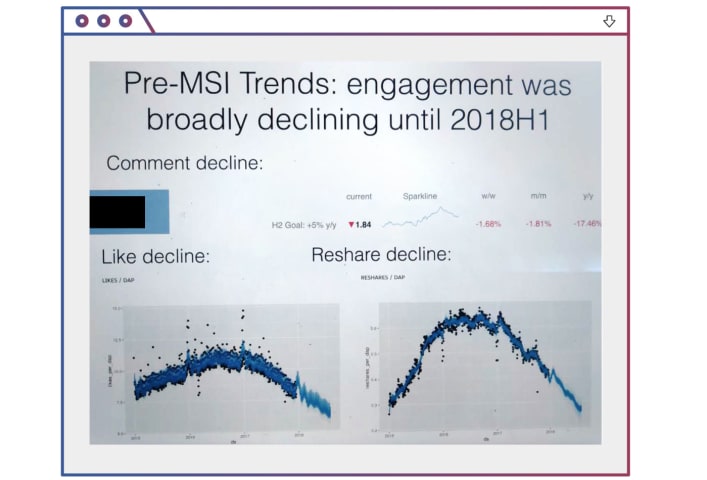

In public, Facebook seems to claim that it removes more than 90 percent of hate speech on its platform, but in private internal communications the company says the figure is only an atrocious 3 to 5 percent. Facebook wants us to believe tha…

Last year, researchers at Facebook showed executives an example of the kind of hate speech circulating on the social network: an actual post featuring an image of four female Democratic lawmakers known collectively as “The Squad.”

The poste…

Variants

A "variant" is an AI incident similar to a known case—it has the same causes, harms, and AI system. Instead of listing it separately, we group it under the first reported incident. Unlike other incidents, variants do not need to have been reported outside the AIID. Learn more from the research paper.

Seen something similar?