Entities

View all entitiesCSETv1 Taxonomy Classifications

Taxonomy DetailsIncident Number

103

Special Interest Intangible Harm

yes

Notes (AI special interest intangible harm)

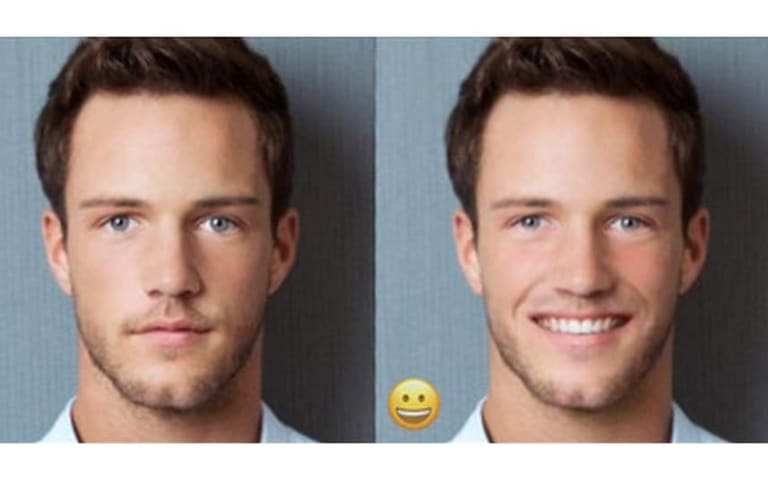

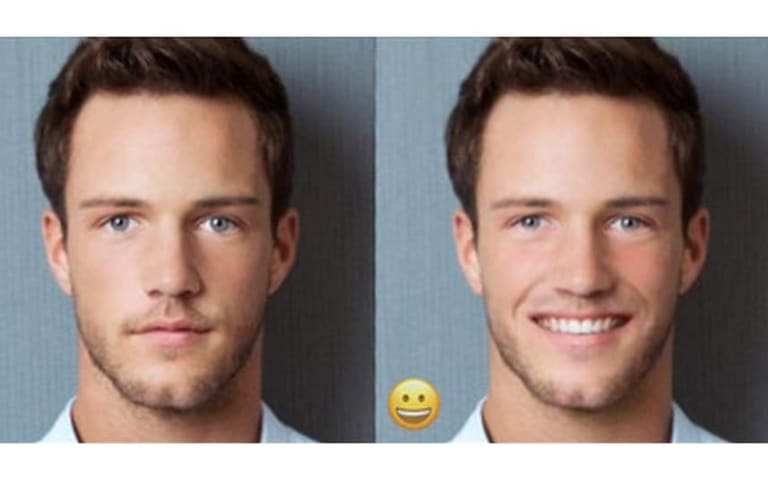

The cropping neutral network would crop the preview image in way that focused more on individuals with lighter completions, younger, female, or without disabilities.

Date of Incident Year

2020

GMF Taxonomy Classifications

Taxonomy DetailsKnown AI Goal Snippets

(Snippet Text: Twitter‘s algorithm for automatically cropping images attached to tweets often doesn’t focus on the important content in them. , Related Classifications: Image Cropping)

Risk Subdomain

1.1. Unfair discrimination and misrepresentation

Risk Domain

- Discrimination and Toxicity

Entity

AI

Timing

Post-deployment

Intent

Unintentional

Incident Reports

Reports Timeline

Twitter‘s algorithm for automatically cropping images attached to tweets often doesn’t focus on the important content in them. A bother, for sure, but it seems like a minor one on the surface. However, over the weekend, researchers found th…

A study of 10,000 images found bias in what the system chooses to highlight. Twitter has stopped using it on mobile, and will consider ditching it on the web.

LAST FALL, CANADIAN student Colin Madland noticed that Twitter’s automatic croppi…

In October 2020, we heard feedback from people on Twitter that our image cropping algorithm didn’t serve all people equitably. As part of our commitment to address this issue, we also shared that we'd analyze our model again for bias. Over …

Twitter has laid out plans for a bug bounty competition with a difference. This time around, instead of paying researchers who uncover security issues, Twitter will reward those who find as-yet undiscovered examples of bias in its image-cro…

Twitter's first bounty program for AI bias has wrapped up, and there are already some glaring issues the company wants to address. CNET reports that grad student Bogdan Kulynych has discovered that photo beauty filters skew the Twitter sali…

Variants

Similar Incidents

Did our AI mess up? Flag the unrelated incidents

Images of Black People Labeled as Gorillas

FaceApp Racial Filters

TayBot

Similar Incidents

Did our AI mess up? Flag the unrelated incidents

Images of Black People Labeled as Gorillas

FaceApp Racial Filters