Entities

View all entitiesCSETv0 Taxonomy Classifications

Taxonomy DetailsProblem Nature

Robustness, Assurance

Physical System

Consumer device

Level of Autonomy

High

Nature of End User

Amateur

Public Sector Deployment

No

Data Inputs

Voice commands

CSETv1 Taxonomy Classifications

Taxonomy DetailsIncident Number

55

Notes (special interest intangible harm)

Alexa produced inappropriate response/content for child user.

Special Interest Intangible Harm

yes

Date of Incident Year

2016

Date of Incident Month

12

Estimated Date

No

Risk Subdomain

1.2. Exposure to toxic content

Risk Domain

- Discrimination and Toxicity

Entity

AI

Timing

Post-deployment

Intent

Unintentional

Incident Reports

Reports Timeline

After opening a new Amazon Echo Dot for Christmas, one family got an … interesting surprise when their kid sidled up to the device to ask it to play a song by holding the little robot in both hands and shouting into the microphone. It’s ver…

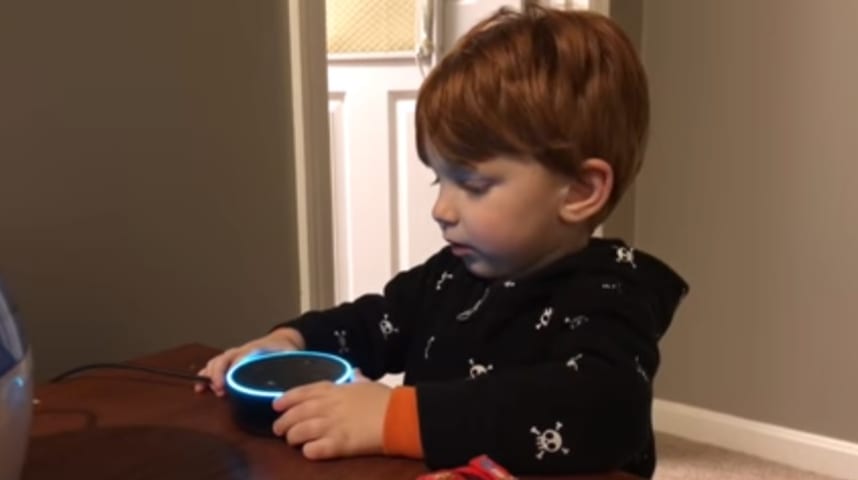

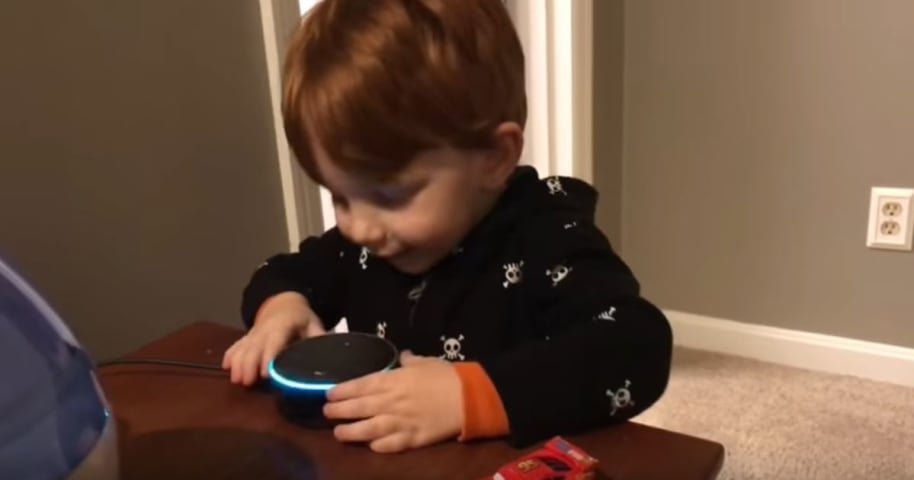

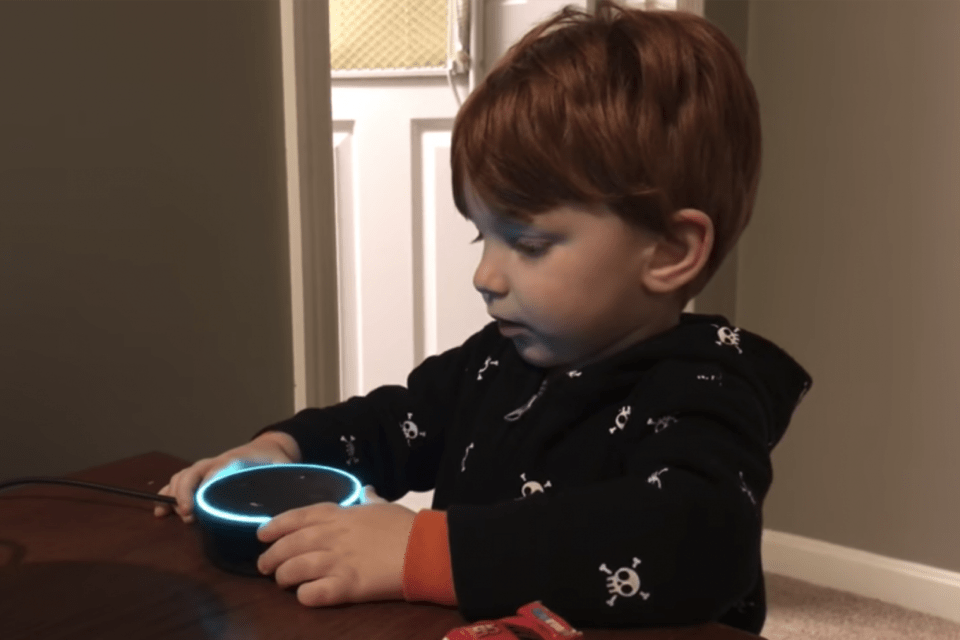

A little boy got more than he bargained for when he asked his family's new Amazon Echo Dot to play him some of his favorite kid's songs.

A hilarious YouTube video sees a boy named William holding the smart speaker while asking the Alexa voi…

SHOCKING video has emerged showing Amazon’s Alexa bombarding a toddler with crude porno messages.

Footage shows a little boy talking into an Amazon Echo Dot gadget as family members watch on.

YouTube/f0t0b0y 4 Footage shows the little boy t…

Amazon Alexa Makes It To Naughty List With NSFW Tirade In Front Of A Child

Close

All he wanted was to hear a nursery song.

A YouTube video posted last Dec. 29 has become viral with 1.2 million views as of this writing. The video shows a lit…

In what could either be an innocent mistake or a set-up by wise adults, Amazon’s virtual assistant Alexa gave one toddler a very interesting answer to his request to hear a specific song.

“Alexa, play ‘Digger, Digger,'” the tyke tells an Ec…

Kid Asks Amazon Alexa To Play Something, Gets Porn Instead Corey Chichizola Random Article Blend There is no time like Christmas for kids. Essentially winning the lottery every year, it's a joy to see the children in our family anxiously aw…

A family got a nasty surprise when their little boy asked Amazon's digital assistant 'Alexa' to play some of his favourite songs, with the device serving up porn suggestions instead of tunes.

The video posted on YouTube sees the adorable to…

In this NSFW video, Amazon put the "X" in Alexa when a child asked it to play his favorite song. (You'll want to wear headphones for this one, folks.)

January 3, 2017 1 min read

The description on this YouTube video posted by f0t0b0y reads,…

A video posted last week by YouTube user "F0t0b0y" has been viewed more than 7 million times showing a toddler requesting a song from an Amazon device only to get a raunchy response from the device.

The video titled "Amazon Alexa Gone Wild"…

![What is the Kid in the Alexa Video Asking to Hear Before Alexa Goes Wild? [VIDEO]](https://res.cloudinary.com/pai/image/upload/f_auto/q_auto/c_fill,h_480/v1/reports/townsquare.media/site/71/files/2017/01/Screen-Shot-2017-01-03-at-4.27.59-PM.png?w=600&h=0&zc=1&s=0&a=t&q=89)

If you haven't seen the funniest video to come out this past month, you must be living under a rock. This video shows a little kid asking Alexa to play a song, and Alexa goes rogue and starts making some very NSFW suggestions to the child!

…

NewsPornography

SEATTLE, Washington, January 6, 2017 (LifeSiteNews) — Amazon's Echo Dot is a small, voice-command device that answers questions, plays music and sounds, and basically functions as a talking/playing encyclopedia, but parents …

So you thought AI is cool? So you thought you could have Alexa watch over your kid? Wait till that AI starts spewing dirty words and purchasing expensive toys for your kid.

CES 2017 was a flurry of crazy tech and this year, Amazon’s AI call…

Amazon Echo is apparently always ready, always listening and always getting smarter. So goes the spiel about the sleek, black, voice-controlled speaker, Amazon’s bestselling product over Christmas, with millions now sold worldwide. The prob…

We can’t keep our kids away from our gadgets, and the new Amazon Echo, the online retail giant’s bestselling product over Christmas, is no exception.

If you don’t already know, Amazon Echo — now in millions of homes worldwide — is a voice-c…

Share

Of the myriad descriptions being levied on Amazon’s Alexa “smart home” system, its creators probably weren’t expecting the phrase “parenting nightmare.” Yet that description has come up again and again as parents discover the new and …

Luke is the deputy editor of Verdict. You can reach him at luke.christou@verdict.co.uk

Virtual assistants, such as Amazon Alexa and Apple’s Siri, are supposed to make our busy lives slightly easier.

Rather than wasting valuable seconds sett…

Variants

Similar Incidents

Did our AI mess up? Flag the unrelated incidents

Amazon Alexa Responding to Environmental Inputs

Amazon Alexa Plays Loud Music when Owner is Away

Similar Incidents

Did our AI mess up? Flag the unrelated incidents

Amazon Alexa Responding to Environmental Inputs

![What is the Kid in the Alexa Video Asking to Hear Before Alexa Goes Wild? [VIDEO]](https://res.cloudinary.com/pai/image/upload/f_auto/q_auto/c_fill,h_640/v1/reports/townsquare.media/site/71/files/2017/01/Screen-Shot-2017-01-03-at-4.27.59-PM.png?w=600&h=0&zc=1&s=0&a=t&q=89)