Entities

View all entitiesCSETv1 Taxonomy Classifications

Taxonomy DetailsIncident Number

1

Special Interest Intangible Harm

yes

Date of Incident Year

2016

Estimated Date

Yes

Multiple AI Interaction

no

Embedded

no

CSETv0 Taxonomy Classifications

Taxonomy DetailsProblem Nature

Unknown/unclear

Physical System

Software only

Nature of End User

Amateur

Public Sector Deployment

No

Data Inputs

Videos

Lives Lost

No

GMF Taxonomy Classifications

Taxonomy DetailsKnown AI Goal Snippets

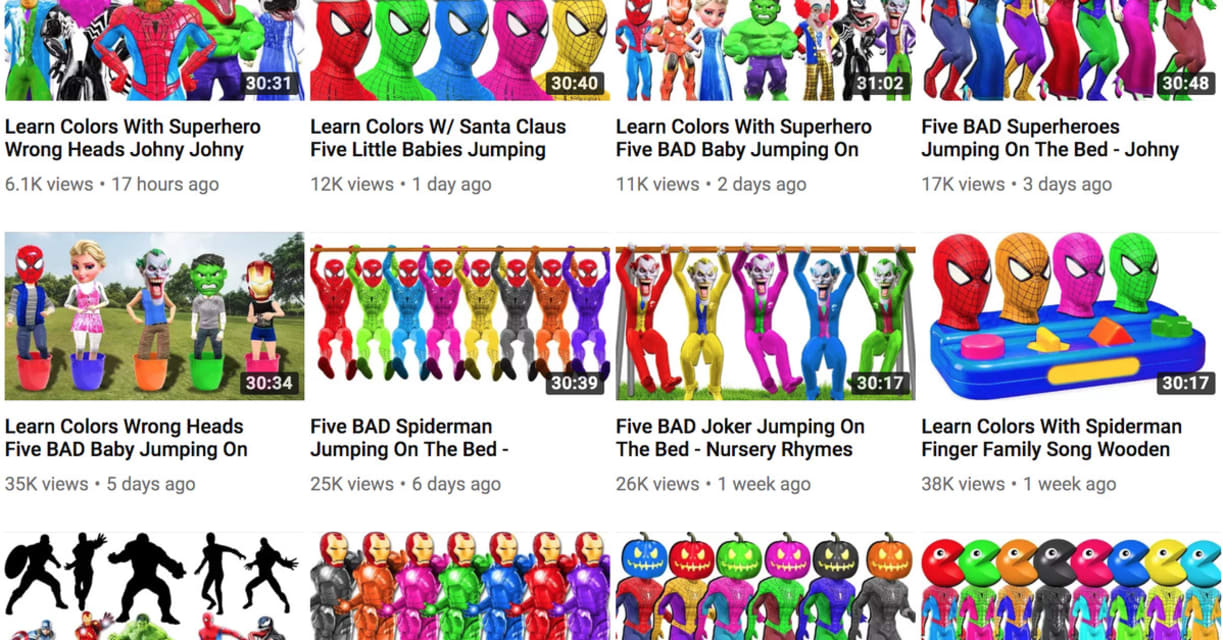

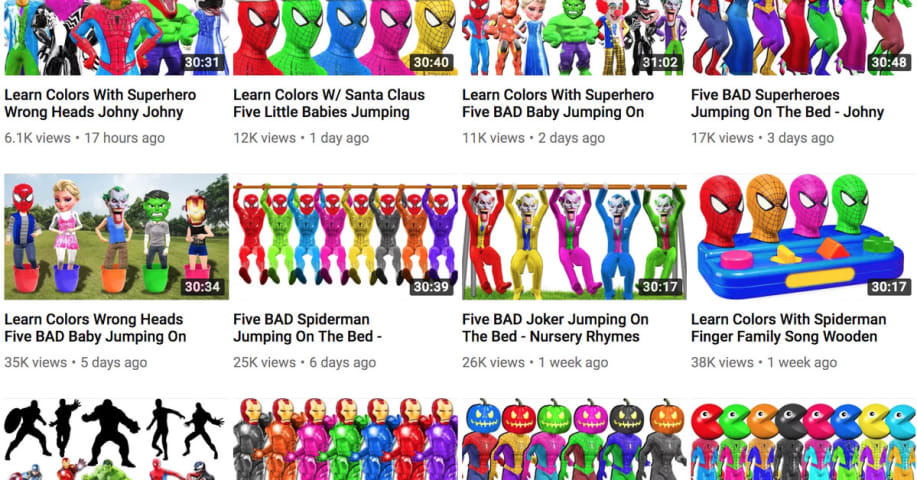

(Snippet Text: An off-brand Paw Patrol video called "Babies Pretend to Die Suicide" features several disturbing scenarios. The YouTube Kids app filters out most - but not all - of the disturbing videos.

Before any video appears in the YouTube Kids app, it's filtered by algorithms that are supposed to identify appropriate children's content YouTube also has a team of human moderators that review any videos flagged in the main YouTube app by volunteer Contributors (users who flag inappropriate content) or by systems that identify recognizable children's characters in the questionable video. Many of those views came from YouTube's "up next" and "recommended" video section that appears while watching any video. YouTube's algorithms attempt to find videos that you may want to watch based on the video you chose to watch first If you don't pick another video to watch after the current video ends, the "up next" video will automatically play. , Related Classifications: Content Recommendation, Content Search)

Risk Subdomain

1.2. Exposure to toxic content

Risk Domain

- Discrimination and Toxicity

Entity

AI

Timing

Post-deployment

Intent

Unintentional

Incident Reports

Reports Timeline

Child and consumer advocacy groups complained to the Federal Trade Commission Tuesday that Google’s new YouTube Kids app contains “inappropriate content,” including explicit sexual language and jokes about pedophilia.

Google launched the ap…

Videos filled with profanity, sexually explicit material, alcohol, smoking, and drug references - this is what parents are finding on Google’s YouTube Kids app. That’s right - its kids app. Now, parents across the country are calling on Go…

Update, Nov. 7, 2017: TODAY Parents is resharing this story from 2016 because a new series of inappropriate videos have cropped up on YouTube Kids and are making headlines. While the channel names and characters used may be different, the p…

Media playback is unsupported on your device

Thousands of videos on YouTube look like versions of popular cartoons but contain disturbing and inappropriate content not suitable for children.

If you're not paying much attention, it might loo…

An off-brand Paw Patrol video called "Babies Pretend to Die Suicide" features several disturbing scenarios. YouTube

Parents who let their kids watch YouTube unattended might want to pay closer attention to what they're viewing.

YouTube is s…

Earlier this week, a report in The New York Times and a blog post on Medium drew a lot of attention to a world of strange and sometimes disturbing videos on YouTube aimed at young children. The genre, which we reported on in February of thi…

Recent news stories and blog posts highlighted the underbelly of YouTube Kids, Google's children-friendly version of the wide world of YouTube. While all content on YouTube Kids is meant to be suitable for children under the age of 13, some…

InIn the last few weeks, the world has learned through a number of reports that YouTube is plagued by problems with children’s content. The company has ramped up moderation in recent weeks to fight the wave of inappropriate content, but thi…

Google-owned YouTube has apologised again after more disturbing videos surfaced on its YouTube Kids app.

Investigators found several unsuitable videos including one of a burning aeroplane from the cartoon Paw Patrol and footage explaining h…

Children were able to watch David Icke's conspiracy videos through YouTube Kids. Flickr/Tyler Merbler

YouTube's app specifically for children is meant to filter out adult content and provide a "world of learning and fun," but Business Insid…

Video still of a reproduced version of Minnie Mouse, which appeared on the now-suspended Simple Fun channel Simple Fun / WIRED

YouTube videos using child-oriented search terms are evading the company's attempts to control them. In one carto…

YouTube Kids, which has been criticized for inadvertently recommending disturbing videos to children, said Wednesday that it would introduce several ways for parents to limit what can be watched on the popular app.

Beginning this week, pare…

After multiple reports of inappropriate content on YouTube over the past year, “Good Morning America” wanted to take a closer look at the site. How often do kids end up seeing inappropriate content on the video platform? We talked with a gr…

YouTube is both a massive industry and browsing staple that people use to fill their educational and entertainment needs. According to Business Insider, the website accumulates around 1.8 billion logged-in users per month. With its high tra…

Variants

Similar Incidents

Did our AI mess up? Flag the unrelated incidents

Alexa Plays Pornography Instead of Kids Song

Amazon Censors Gay Books

Amazon Alexa Responding to Environmental Inputs

Similar Incidents

Did our AI mess up? Flag the unrelated incidents

Alexa Plays Pornography Instead of Kids Song

Amazon Censors Gay Books