Entities

View all entitiesCSETv1 Taxonomy Classifications

Taxonomy DetailsIncident Number

34

CSETv0 Taxonomy Classifications

Taxonomy DetailsProblem Nature

Robustness, Assurance

Physical System

Consumer device

Level of Autonomy

Medium

Nature of End User

Amateur

Public Sector Deployment

No

Data Inputs

environment audio, Alexa software

GMF Taxonomy Classifications

Taxonomy DetailsKnown AI Goal Snippets

(Snippet Text: According to one couple on Twitter, their Amazon Echo lit up on Thursday night, not in response to their voice, but a voice the device heard on TV. , Related Classifications: AI Voice Assistant)

Risk Subdomain

7.3. Lack of capability or robustness

Risk Domain

- AI system safety, failures, and limitations

Entity

AI

Timing

Post-deployment

Intent

Unintentional

Incident Reports

Reports Timeline

In June, Amazon made its always-listening personal assistant Echo available to all. It's a neat little device—basically, Siri in a cylinder—but not without its quirks.

According to one couple on Twitter, their Amazon Echo lit up on Thursday…

Amazon Echo owners have been issued a security warning after a number in America automatically ordered dolls houses being discussed on a TV show. The high-tech gadgets have become a recent and welcome addition to hundreds of thousands of UK…

Amazon's Alexa sure is one high-class shopper.

The retail giant's Alexa voice assistant aims to revolutionize the shopping experience, but recently delivered a big surprise to one six-year-old's parents.

Dallas, Tx. resident Megan Neitzel, …

Step away from the Dot, tiny online shopper.

When a 6-year-old girl in Texas managed to get herself a big tin of cookies and a fancy new dollhouse thanks to Amazon’s voice-enabled assistant Alexa in her family’s Echo device, the incident wa…

DALLAS, Texas — It's the Amazon order that's gone viral.

A six-year-old girl's conversation with Amazon's voice-activated Echo Dot ended up with her parents being charged for a dollhouse and four pounds of cookies.

For 6-year-old Brooke Nei…

DALLAS, Texas -- Amazon's voice-activated Echo Dot promises to make life a little easier for users. Alexa can provide weather updates, set alarms and help you shop.For 6-year-old Brooke Neitzel, the device made dollhouse dreams a reality."A…

Story highlights Amazon Echo Dot's digital assistant delivered when girl asked for a dollhouse and snacks

Family now requires a four-digit code before anything can be ordered via Alexa

(CNN) It was either a late Christmas present or an unfu…

A TV news report in San Diego about the child that accidentally ordered a dollhouse via Amazon’s Alexa inadvertently set off some viewers’ Echo devices, which in turn tried to order dollhouses using Alexa.

The Amazon Echo devices connect to…

Child orders dollhouse, cookies using Alexa

DALLAS (CNN) — It’s the amazon order that’s gone viral.

A 6-year-old girl’s conversation with Amazon’s voice-activated Echo Dot wound up with her parents being charged for a dollhouse and four pou…

- The 6-year-old North Texas girl who made headlines earlier this week after asking Amazon’s Alexa to order her a dollhouse and cookies has turned the incident into something positive.

Brooke Neitzel and her family ate the cookies, but dona…

A San Diego TV station sparked complaints this week – after an on-air report about a girl who ordered a dollhouse via her parents' Amazon Echo caused Echoes in viewers' homes to also attempt to order dollhouses.

Telly station CW-6 said the …

Children ordering (accidentally or otherwise) items from gadgets is nothing new. Major retailers have refunded purchases made by children playing with phones or computers, and with voice-activated devices making their way into homes, it’s a…

Alexa is turning out to be a pretty bad listener.

Streaming songs, ordering pizza, and booking cabs are no-brainers for Alexa, the voice-activated assistant installed on Amazon Echo devices. But Alexa also unfortunately appears to enjoy eng…

It is supposed to make life easier – but owners of the new Amazon Echo have fallen foul of the high-tech gadget's automatic features.

Owners of the device been warned after a number accidentally ordered dollhouses that were being discussed …

Amazon released the Echo in the UK in September 2016

A US TV station has been inundated with complaints after viewers' voice-commanded Amazon Echo systems "heard" a presenter's remarks about doll houses - and started ordering them.

Using th…

Picture Amazon

Amazon’s new Echo device is programmed to respond to voice commands whenever it hears the word, ‘Alexa’ – and this can lead to disaster.

A newsreader in San Diego said, ‘Alexa, order me a dollhouse’ on air – while reporting o…

.jpg?n=4839)

It doesn’t take much to order a dollhouse mansion and four pounds of sugar cookies with an Amazon Echo

In an ironic turn of events, Amazon’s voice assistant, Alexa, is turning out to be quite a terrible listener (or perhaps it has some thin…

Close

Children who have access to their parents' credit card may accidentally or intentionally buy stuff online without permission. That's mostly fine, if not an often cutesy mishap, making for some few laughs and an anecdote some years to …

ES News email The latest headlines in your inbox ES News email The latest headlines in your inbox Enter your email address Continue Please enter an email address Email address is invalid Fill out this field Email address is invalid You alre…

Amazon Echo is a gift that keeps on giving.

Owners complained that their voice-activated devices set off on an inadvertent shopping spree after a California news program triggered the systems to make erroneous purchases, according a local r…

Amazon Echo is apparently always ready, always listening and always getting smarter. So goes the spiel about the sleek, black, voice-controlled speaker, Amazon’s bestselling product over Christmas, with millions now sold worldwide. The prob…

Alexa Went On A Dollhouse Shopping Spree After Hearing “Command” From News Anchor On TV

Close

When a news anchor for CW6 News in San Diego reported about a funny incident about a little girl and an Amazon Echo, little did he know that he wo…

Dallas mom Megan Neitzel’s cautionary tale involving her 6-year-old daughter accidentally ordering pricey gifts through “Alexa,” Amazon’s voice-activated Echo Dot, went viral last week as stories about the incident were shared by news organ…

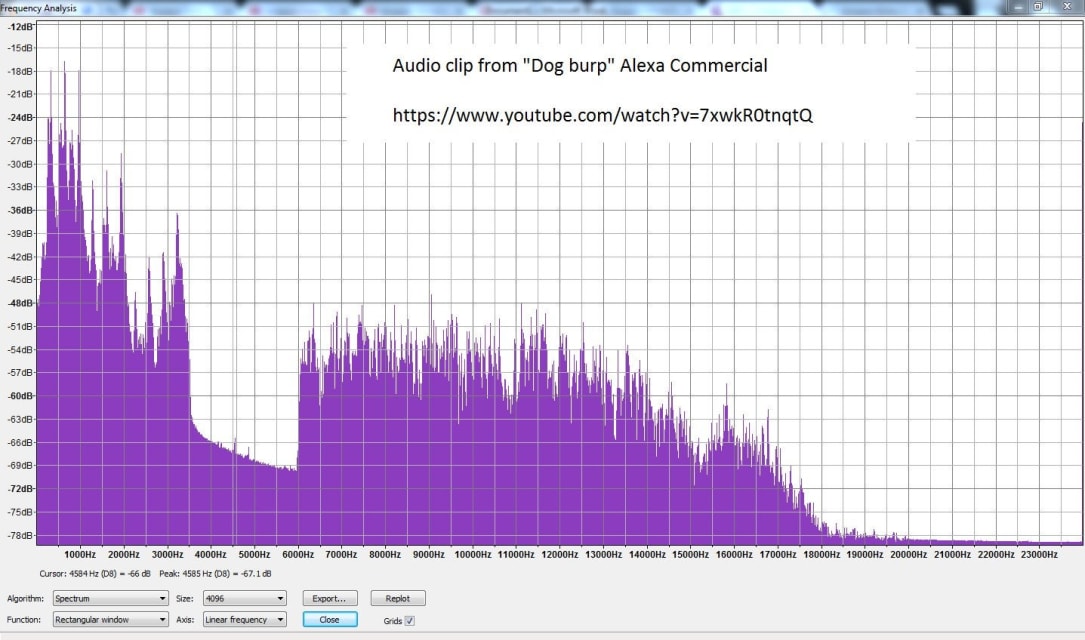

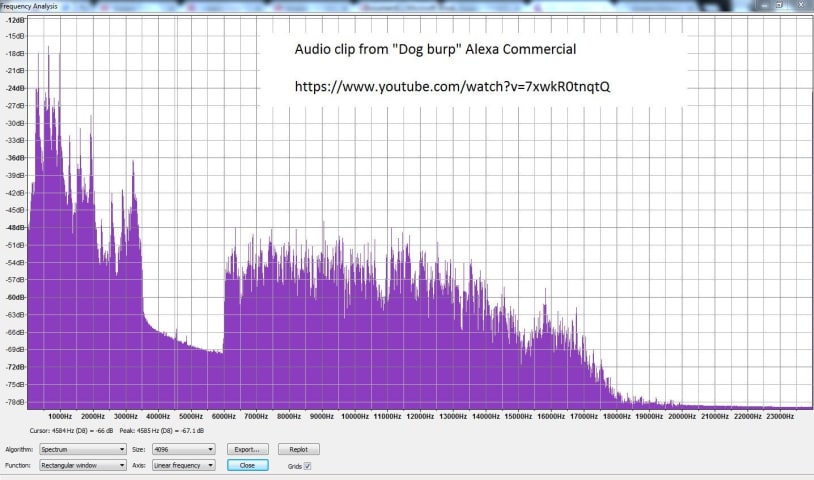

I noticed that the Amazon commercials usually do not trigger the device, or if they do, she only momentarily wakes before ignoring what is said. I did a little research tonight and found that the Echo, while it’s processing the wake word, s…

Close

As you probably know by now, when your TV started blaring Google's Super Bowl ad, Google Homes across the nation promptly responded to the actors saying the "OK Google" command. The same happened to several Amazon Echo devices, which …

Voice assistants such as the Amazon Echo and Google Home are pretty smart, but they’re not yet sharp enough to understand the difference between TV and reality. A Google commercial during yesterday’s Super Bowl prompted Home to play whale n…

Amazon Echo and Google Home can make you feel like you’ve stumbled across a genie: say what you want and, like magic, it appears on your doorstep. That's both cool and convenient — until it isn't.

And as the incident earlier this year where…

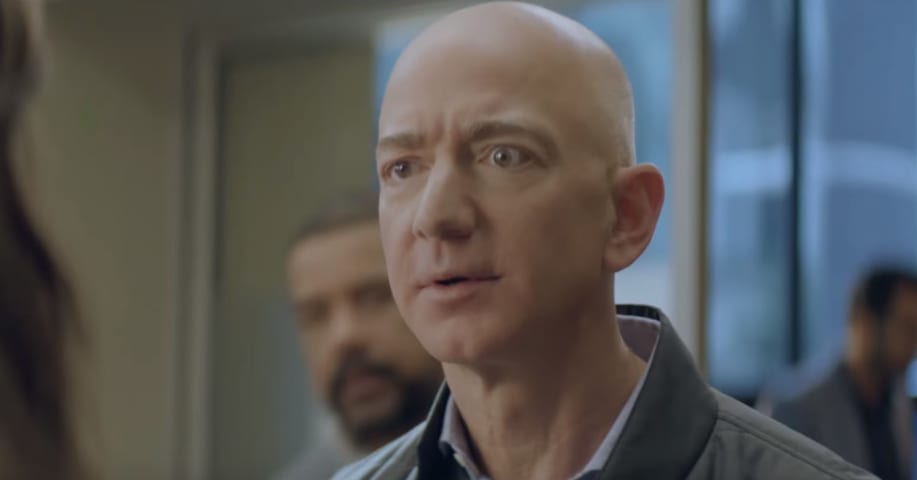

Amazon’s Super Bowl ad will air this Sunday in millions of homes across the country. But no matter how many times Jeff Bezos bewilderedly mutters “Alexa’s lost her voice?,” no Alexa devices should turn on during the commercial, according to…

If you haven't seen it by now, Amazon's Super Bowl LII commercial is fairly clever. Kudos to the company for coming up with a fun way to mash together celebrities and Alexa without it feeling overly cheesy or trying-too-hard. More important…

AMAZON has revealed how it stops your Amazon Echo speaker from waking up when the word "Alexa" is used in an official TV ad.

The company uses "acoustic fingerprints" that help your Echo understand that you're being advertised to, rather tha…

There probably hasn't been a time that you've consciously been thankful for deep neural networks and machine learning, but that's what Amazon is using to ensure that Alexa doesn't respond to the "Alexa" keyword when you don't want it to.

To…

An Amazon Echo owner has tried to get a television advertising campaign for the smart speaker banned after the Alexa virtual assistant attempted to order cat food when it heard its name on an ad.

An Amazon TV ad for the Echo Dot, which can …

Image copyright Getty Images Image caption The Amazon Echo Dot TV commercial was cleared by the UK's advertising regulator

A television ad for Amazon's Echo Dot smart speaker that caused a viewer's device to try to order cat food has been c…

An Amazon Echo owner has tried to get a television advertising campaign for the smart speaker banned after the Alexa virtual assistant attempted to order cat food when it heard its name on an ad.

An Amazon TV ad for the Echo Dot, which can …

We have in the past seen instances such as the failure of Microsoft bot Tay, when it developed a tendency to come up with racist remarks. Within 24 hours of its existence and interaction with people, it starting sending offensive comments, …

Variants

Similar Incidents

Did our AI mess up? Flag the unrelated incidents

Similar Incidents

Did our AI mess up? Flag the unrelated incidents

.jpg?n=4839)