Entities

View all entitiesCSETv1 Taxonomy Classifications

Taxonomy DetailsIncident Number

281

Risk Subdomain

1.2. Exposure to toxic content

Risk Domain

- Discrimination and Toxicity

Entity

AI

Timing

Post-deployment

Intent

Unintentional

Incident Reports

Reports Timeline

Speaking to The Telegraph, a former Tumblr blogger, who asked for anonymity, said she had to stop her own depression and anxiety help blog after she found herself “falling down the rabbit hole of content that triggered negative emotions”.

“…

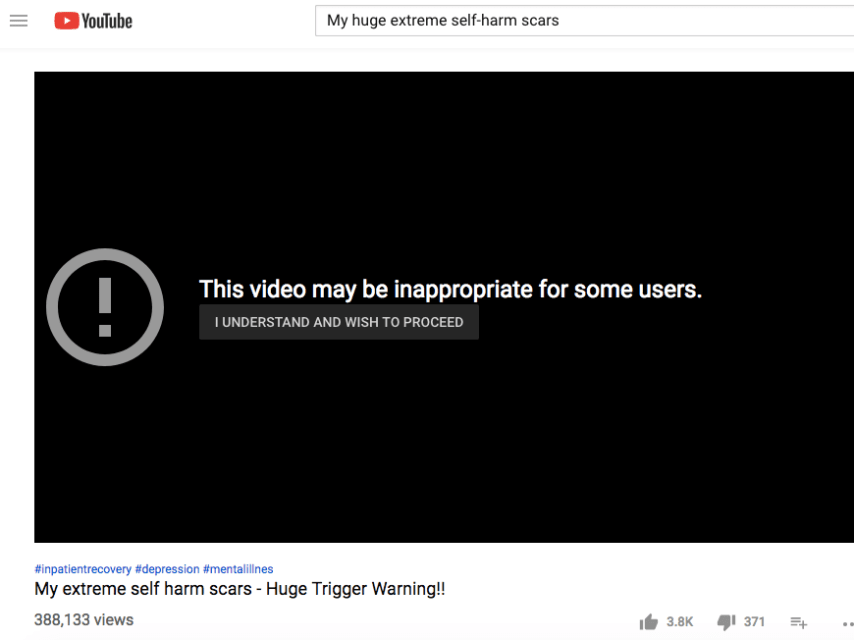

Potentially harmful content has reportedly slipped through the moderation algorithms again at YouTube.

According to a report by The Telegraph on Monday, YouTube has been recommending videos that contain graphic images of self-harm to users …

YouTube has been caught recommending "dozens" of graphic videos relating to self-harm to children.

The hugely popular video sharing app has been blasted for promoting dangerous clips to users as young as 13.

YouTube is under fire for failin…

Variants

Similar Incidents

Did our AI mess up? Flag the unrelated incidents

Similar Incidents

Did our AI mess up? Flag the unrelated incidents