Entities

View all entitiesCSETv1 Taxonomy Classifications

Taxonomy DetailsIncident Number

18

Notes (special interest intangible harm)

Significant gender/sex bias in google search image results

Special Interest Intangible Harm

yes

Date of Incident Year

2015

Date of Incident Month

04

Date of Incident Day

09

CSETv0 Taxonomy Classifications

Taxonomy DetailsProblem Nature

Specification

Physical System

Software only

Level of Autonomy

High

Nature of End User

Amateur

Public Sector Deployment

Yes

Data Inputs

open source internet, user requests, user searches

Risk Subdomain

1.1. Unfair discrimination and misrepresentation

Risk Domain

- Discrimination and Toxicity

Entity

AI

Timing

Post-deployment

Intent

Unintentional

Incident Reports

Reports Timeline

Who’s a CEO? Google image results can shift gender biases

Jennifer Langston UW News

Getty Images last year created a new online image catalog of women in the workplace – one that countered visual stereotypes on the Internet of moms as frazz…

The University of Washington just released a preview of a study that claims search engine results can influence people's perceptions about how many men or women hold certain jobs. One figure quoted in the preview is that in a Google image s…

The Ellen Pao-Kleiner Perkins trial shone a light on discrimination in the tech industry, but for a more immediate look at the challenges women face in corporate America, look no further than a Google Images search.

Doing a search at the si…

Google is a modern oracle, and a miraculous one at that. It can lead you to the Perfect Strangers theme song lyrics, or to a satellite image of your childhood neighborhood, or to a blueprint for building a quantum computer. But for as much …

In today's modern professional world men can be doctors, investment bankers, and professors, while women, of course, can be nurses, secretaries, and sexy Halloween costume models—at least according to Google Image Search.

Why did we spend a…

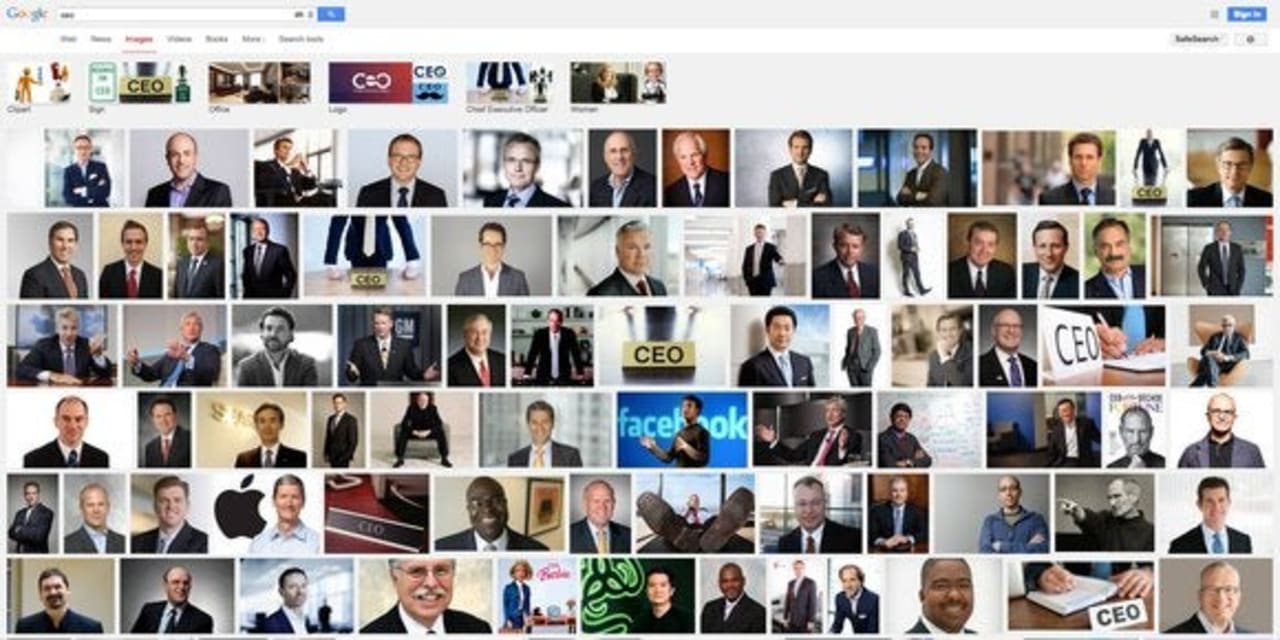

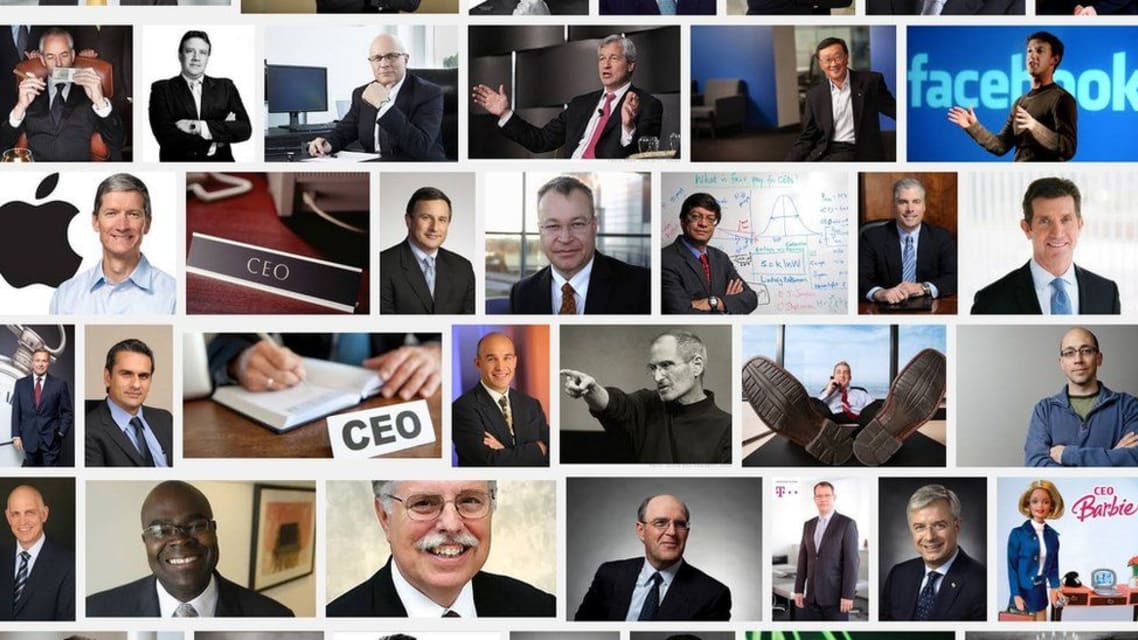

Not all doctors or CEOs are men. Not all nurses are women. But you might think otherwise if you searched for these professions in Google images.

It turns out that there's a noticeable gender bias in the image search results for some jobs, a…

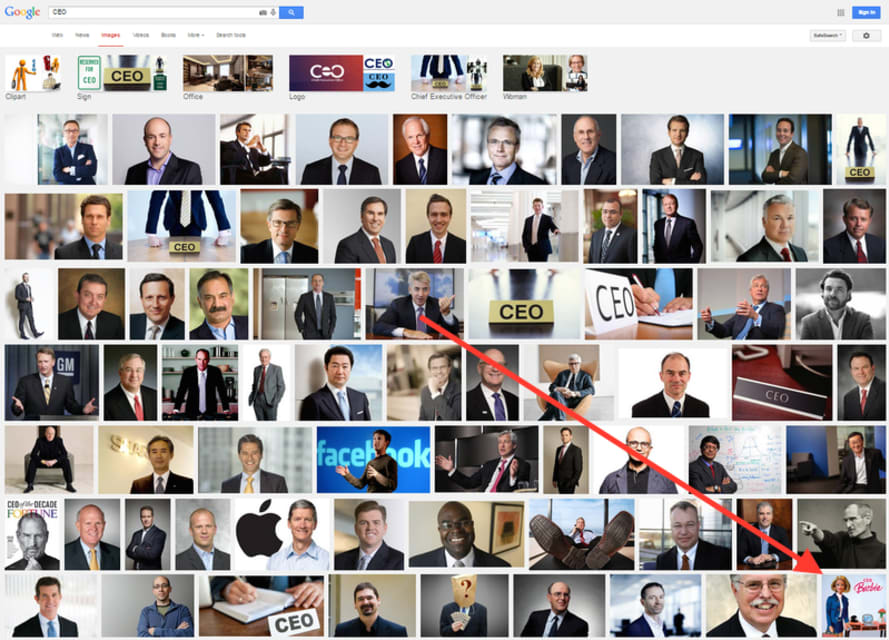

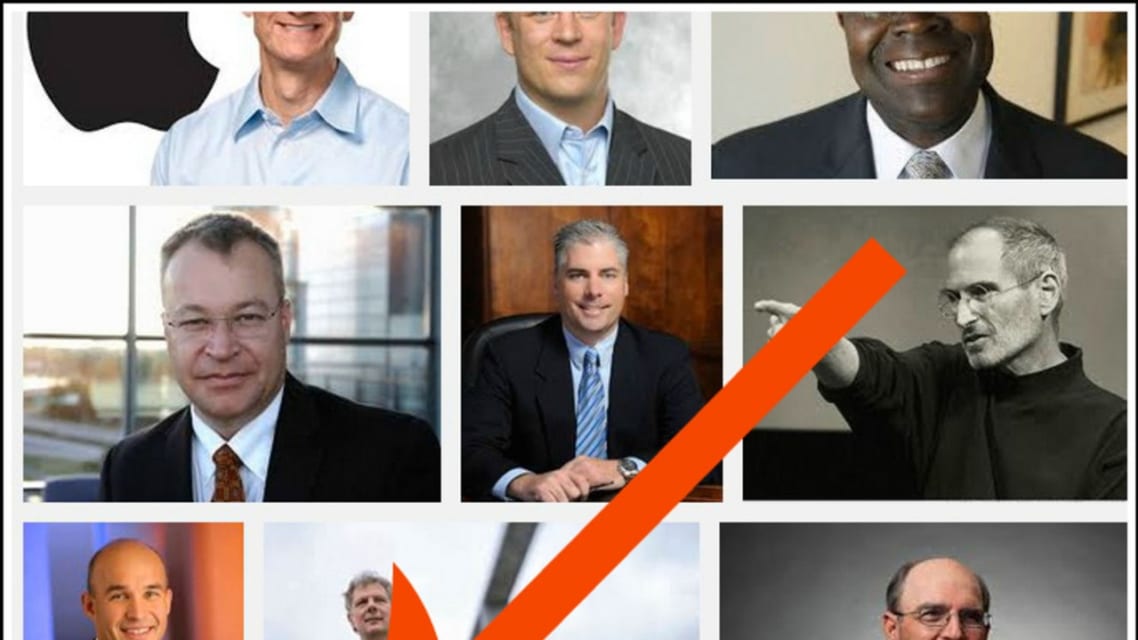

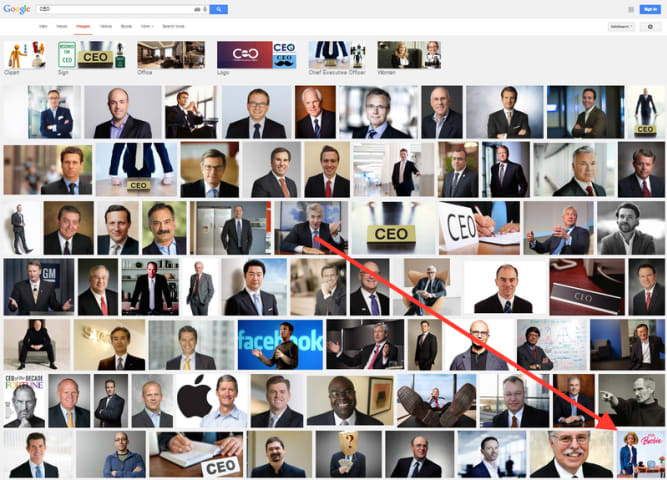

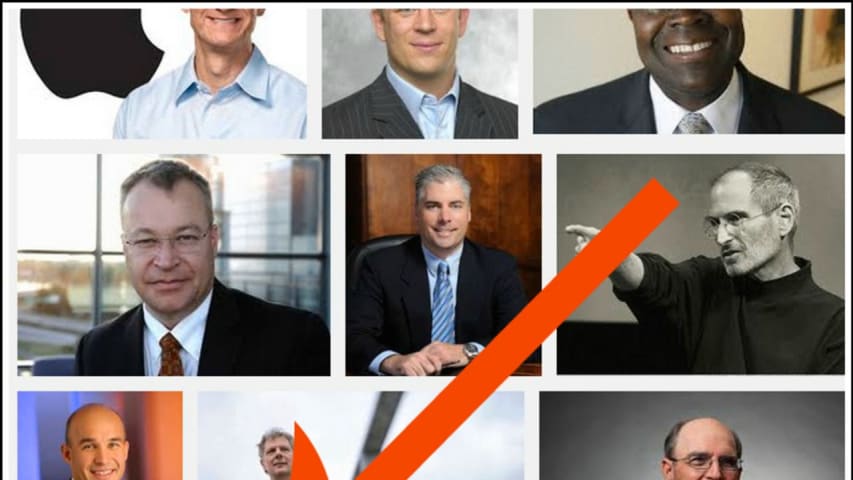

Try this: Google image "CEO." Notice anything? The first female Google image search result for "CEO" appears TWELVE rows down—and it's Barbie.

A recent study conducted at the University of Washington sought to examine how well female repres…

Search the term "CEO" in Google Images and the first picture of woman you get is a picture of Barbie in a suit.

This "gender bias" has become apparent after a paper was published showing that many image searches for specific occupations fav…

Just when you thought biases were a completely human construct, more evidence suggests that both algorithms and interfaces could be biased, too.

ADVERTISEMENT

The latest example of this is from a study conducted by researchers from Universi…

Fresh off the revelation that Google image searches for “CEO” only turn up pictures of white men, there’s new evidence that algorithmic bias is, alas, at it again. In a paper published in April, a team of researchers from Carnegie Mellon Un…

“You can’t be what you can’t see,” Marie Wilson of the White House Project said back in 2010. According to a new study, Google Images may not be helping to improve the situation.

AdView analyzed employment data to determine the number of wo…

Variants

Similar Incidents

Did our AI mess up? Flag the unrelated incidents

Sexist and Racist Google Adsense Advertisements

AI Beauty Judge Did Not Like Dark Skin

Biased Google Image Results

Similar Incidents

Did our AI mess up? Flag the unrelated incidents

Sexist and Racist Google Adsense Advertisements

AI Beauty Judge Did Not Like Dark Skin