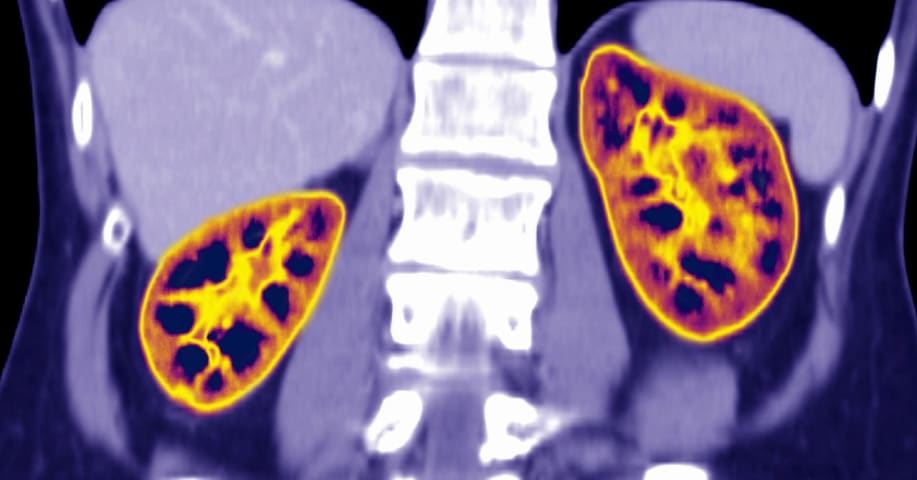

Description: Reports from Hyderabad describe two alleged patient harms after individuals acted on purportedly ChatGPT-generated medical advice instead of clinician guidance. A kidney-transplant recipient reportedly discontinued prescribed post-transplant medications based on a chatbot suggestion and experienced graft failure. In a separate case, a man with diabetes allegedly developed severe hyponatremia after following a chatbot-advised zero-salt diet.

Editor Notes: The reporting on this incident seems to draw on three discrete harm events to point to a wider concern in Hyderabad. The case involving an unnamed kidney transplant patient and an elderly man with diabetes are Hyderabad-specific, whereas the third case that seems to appear in the reporting is a reference to a New York case, Incident 1166: ChatGPT Reportedly Suggests Sodium Bromide as Chloride Substitute, Leading to Bromism and Hospitalization.

Entities

View all entitiesAlleged: OpenAI and ChatGPT developed and deployed an AI system, which harmed Unnamed patient with diabetes in Hyderabad , Unnamed kidney transplant patient in Hyderabad , OpenAI users , General public of India , General public of Hyderabad , General public and ChatGPT users.

Alleged implicated AI system: ChatGPT

Incident Stats

Incident ID

1281

Report Count

2

Incident Date

2025-11-10

Editors

Daniel Atherton

Incident Reports

Reports Timeline

Loading...

Doctors urge patients to stop using AI chatbots as a substitute for medical care

Clinicians in Hyderabad are seeing a sharp rise in patients acting on generic chatbot advice and paying a heavy price. Two recent cases underscore the risk: di…

Loading...

Hyderabad: Doctors in Hyderabad have cautioned people against relying solely on artificial intelligence (AI) tools such as ChatGPT for medical advice. They emphasised that patients, especially those with chronic or serious health conditions…

Variants

A "variant" is an AI incident similar to a known case—it has the same causes, harms, and AI system. Instead of listing it separately, we group it under the first reported incident. Unlike other incidents, variants do not need to have been reported outside the AIID. Learn more from the research paper.

Seen something similar?