Entities

View all entitiesCSETv1 Taxonomy Classifications

Taxonomy DetailsIncident Number

115

Special Interest Intangible Harm

no

Notes (AI special interest intangible harm)

There is no evidence or indication that the system led to any special interest intangible harms through its use or deployment.

Date of Incident Year

2020

Date of Incident Month

07

Date of Incident Day

Risk Subdomain

1.1. Unfair discrimination and misrepresentation

Risk Domain

- Discrimination and Toxicity

Entity

AI

Timing

Post-deployment

Intent

Unintentional

Incident Reports

Reports Timeline

:format(webp)/cdn.vox-cdn.com/uploads/chorus_image/image/67125757/Ec0GHZ0XYAQ_zau.0.jpeg)

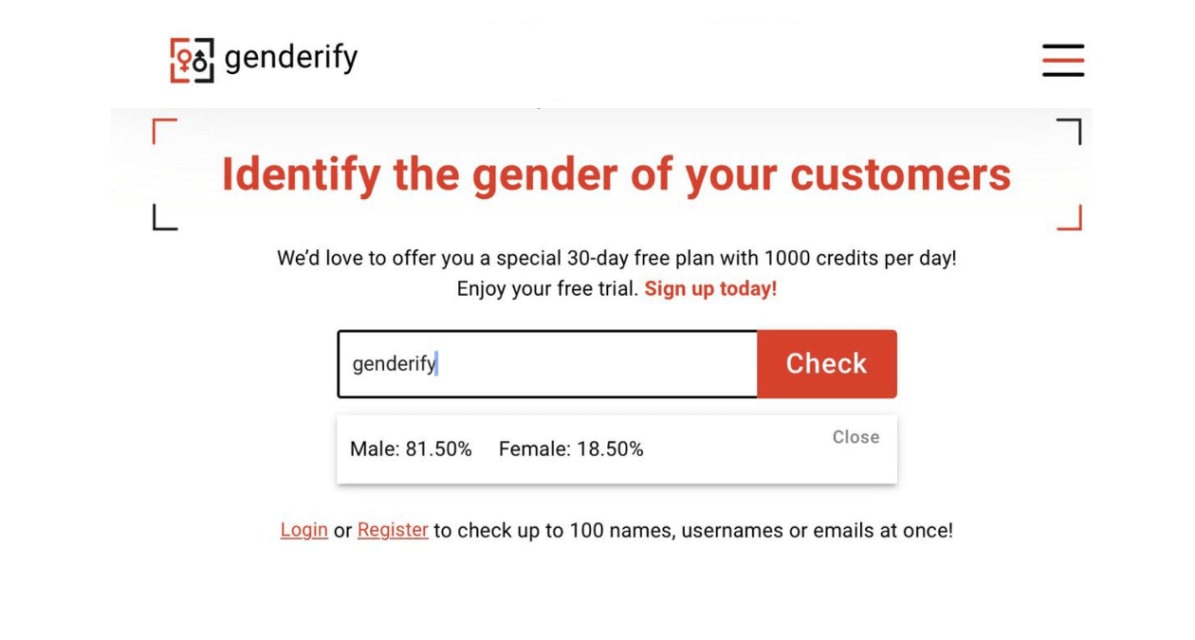

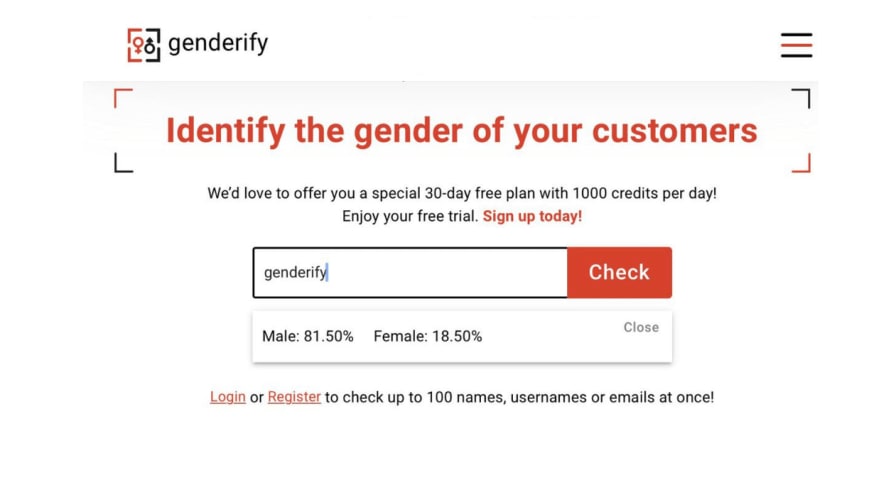

Some tech companies make a splash when they launch, others seem to bellyflop.

Genderify, a new service that promised to identify someone’s gender by analyzing their name, email address, or username with the help AI, looks firmly to be in th…

Just hours after making waves and triggering a backlash on social media, Genderify — an AI-powered tool designed to identify a person’s gender by analyzing their name, username or email address — has been completely shut down.

Launched last…

The creators of a controversial tool that attempted to use AI to predict people's gender from their internet handle or email address have shut down their service after a huge backlash.

The Genderify app launched this month, and invited peop…

Variants

Similar Incidents

Did our AI mess up? Flag the unrelated incidents

Similar Incidents

Did our AI mess up? Flag the unrelated incidents

:format(webp)/cdn.vox-cdn.com/uploads/chorus_image/image/67125757/Ec0GHZ0XYAQ_zau.0.jpeg)