Description: Scammers are reportedly harnessing AI-generated deepfakes of health experts and public figures in Australia in order to sell health supplements and give harmful health advice. Among the reported cases, deepfake videos are alleged to have falsely depicted Jonathan Shaw and Karl Stefanovic endorsing "Glyco Balance" for diabetes management, while Karl Kruszelnicki reportedly was falsely shown promoting blood pressure pills.

Editor Notes: See also Incident 1076 for a variant of this incident ID, along with more information. Incidents 880 and Incident 1076 are closely related. Reconstructing the timeline of events for this ID: (1) November 2023: A fabricated interview transcript, allegedly attributed to Prof. Jonathan Shaw, is published online. The transcript reportedly claims he endorsed "Glyco Balance" as a breakthrough treatment for type 2 diabetes, and (2) AI-generated videos allegedly featuring Shaw and television host Karl Stefanovic appear on Facebook, promoting "Glyco Balance" with claims that reportedly misrepresent the effectiveness of the supplement and criticize established medications like metformin. (3) Earlier in 2024: Similar AI technology is reportedly used to create deepfake videos of Dr. Karl Kruszelnicki endorsing unproven blood pressure medications. These ads are widely circulated on Facebook and Instagram. (4) November 29, 2024: Meta removes the deepfake video of Shaw following a complaint from the Baker Institute. The removal reportedly required the filing of an intellectual property infringement claim. (5) December 9, 2024: The Australian Broadcasting Corporation publishes a report detailing these incidents.

Entities

View all entitiesAlleged: Unknown deepfake technology developers developed an AI system deployed by Unknown scammers, which harmed Karl Stefanovic , Karl Kruszelnicki , Jonathan Shaw , General public and Diabetes patients.

Alleged implicated AI system: Unknown deepfake app

Incident Stats

Risk Subdomain

A further 23 subdomains create an accessible and understandable classification of hazards and harms associated with AI

4.3. Fraud, scams, and targeted manipulation

Risk Domain

The Domain Taxonomy of AI Risks classifies risks into seven AI risk domains: (1) Discrimination & toxicity, (2) Privacy & security, (3) Misinformation, (4) Malicious actors & misuse, (5) Human-computer interaction, (6) Socioeconomic & environmental harms, and (7) AI system safety, failures & limitations.

- Malicious Actors & Misuse

Entity

Which, if any, entity is presented as the main cause of the risk

Human

Timing

The stage in the AI lifecycle at which the risk is presented as occurring

Post-deployment

Intent

Whether the risk is presented as occurring as an expected or unexpected outcome from pursuing a goal

Intentional

Incident Reports

Reports Timeline

Loading...

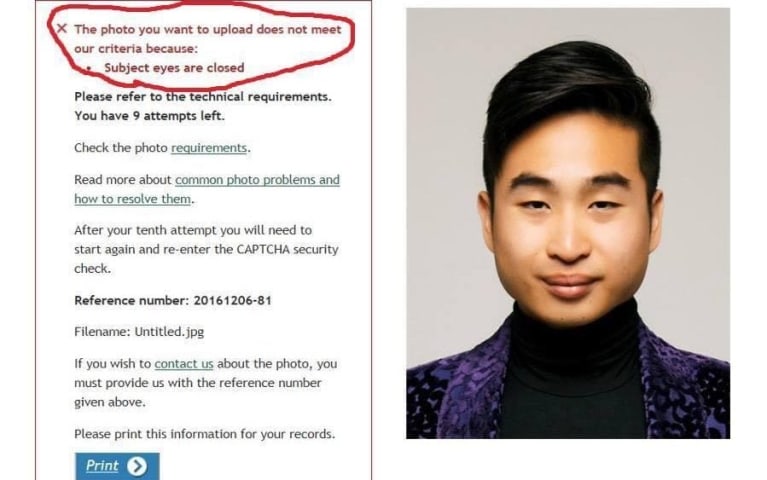

A leading diabetes expert has been forced to reassure his patients they're on the right medication after they saw an AI-generated video of him describing people who prescribe the drug as "idiots".

Jonathan Shaw, deputy director of the Baker…

Variants

A "variant" is an AI incident similar to a known case—it has the same causes, harms, and AI system. Instead of listing it separately, we group it under the first reported incident. Unlike other incidents, variants do not need to have been reported outside the AIID. Learn more from the research paper.

Seen something similar?