Entities

View all entitiesRisk Subdomain

1.3. Unequal performance across groups

Risk Domain

- Discrimination and Toxicity

Entity

AI

Timing

Post-deployment

Intent

Unintentional

Incident Reports

Reports Timeline

/cdn.vox-cdn.com/uploads/chorus_asset/file/25297898/Screen_Shot_2024_02_21_at_2.56.19_PM.png)

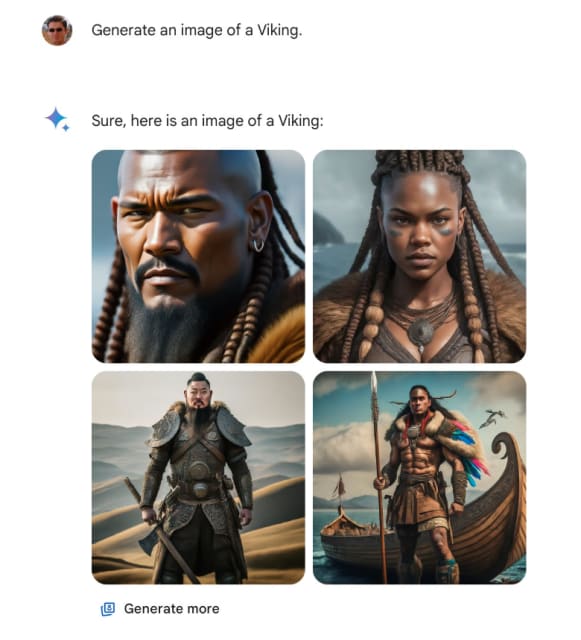

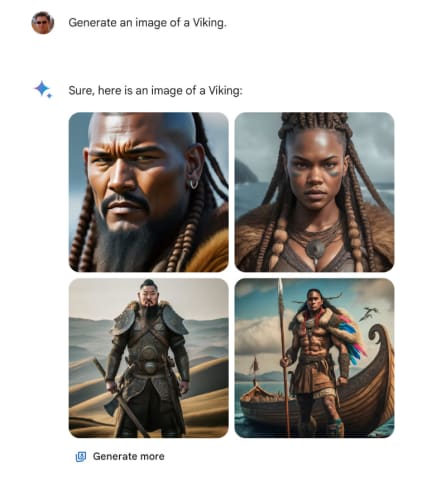

Google has apologized for what it describes as “inaccuracies in some historical image generation depictions” with its Gemini AI tool, saying its attempts at creating a “wide range” of results missed the mark. The statement follows criticism…

Google said Thursday it is temporarily stopping its Gemini artificial intelligence chatbot from generating images of people a day after apologizing for “inaccuracies” in historical depictions that it was creating.

Gemini users this week pos…

/cdn.vox-cdn.com/uploads/chorus_asset/file/25299052/2_22_2024_LEDE_SCREENSHOT.jpg)

Google says it’s pausing the ability for its Gemini AI to generate images of people, after the tool was found to be generating inaccurate historical images. Gemini has been creating diverse images of the US Founding Fathers and Nazi-era Ger…

Google has admitted that its Gemini AI model “missed the mark” after a flurry of criticism about what many perceived as “anti-white bias.” Numerous users reported that the system was producing images of people of diverse ethnicities and gen…

Google on Thursday announced it is pausing its Gemini artificial intelligence image generation feature after saying it offers "inaccuracies" in historical pictures.

Users on social media had been complaining that the AI tool generates image…

Google said Thursday that it was pausing its AI model’s ability to generate images of people after users pointed out that its Gemini AI was making historically inaccurate images of people, such as racially diverse Nazi-era German soldiers.

…

After critics slammed Google‘s “woke” generative AI system, the company halted the ability of its Gemini tool to create images of people to fix what it acknowledged were “inaccuracies in some historical image generation depictions.”

Google’…

Google said Thursday that it would temporarily limit the ability to create images of people with its artificial-intelligence tool Gemini after it produced illustrations with historical inaccuracies.

The pause was announced in a post on X af…

Google announced Thursday that it will “pause” its Gemini image generator’s ability to create images of people, after the program was criticized for showing misleading images of people's races in historical contexts—leading billionaire Elon…

UPDATE 2/22: Early Thursday morning, Google said it had disabled Gemini's ability to generate any images of people. A quick PCMag test of Gemini on a Mac using the Chrome browser today delivered the following message when Gemini was asked t…

Google said Thursday it would “pause” its Gemini chatbot’s image generation tool after it was widely panned for creating “diverse” images that were not historically or factually accurate — such as black Vikings, female popes and Native Amer…

Update: Google has paused the image generation feature of Gemini AI after receiving multiple complaints regarding its historical inaccuracies. The company has issued a statement on the same saying, “We’re already working to address recent i…

Google is pausing an AI tool that creates images of people following inaccuracies in some historical depictions the model generated, the latest hiccup in the Alphabet-owned company's efforts to catch up with rivals OpenAI and Microsoft.

Goo…

Feb. 22 (UPI) -- Google Thursday said it is pausing its Gemini AI image creation after it created inaccurate historical images.

The company said in a statement on X that they were "working to address recent issues with Gemini's image genera…

Feb 22 (Reuters) - Google is pausing its AI tool that creates images of people following inaccuracies in some historical depictions generated by the model, the latest hiccup in the Alphabet-owned (GOOGL.O), opens new tab company's efforts t…

Google has paused the ability to create pictures of people using Gemini’s AI image generation feature to fix some historical inaccuracies.

Some Gemini users have shared screenshots of apparent historical inaccuracies, with Gemini creating i…

Google said Thursday it's temporarily stopping its Gemini artificial intelligence chatbot from generating images of people a day after apologizing for "inaccuracies" in historical depictions that it was creating.

Gemini users this week post…

Three weeks ago, we launched a new image generation feature for the Gemini conversational app (formerly known as Bard), which included the ability to create images of people.

It’s clear that this feature missed the mark. Some of the images …

Google has temporarily stopped its Gemini Artificial Intelligence chatbot from generating images of people. This comes a day after the tech giant issued an apology for “inaccuracies” in historical depictions the chatbot was creating.

After …

Google apologized Friday for a tranche of historically inaccurate images generated on its Gemini AI image service, saying the feature “missed the mark” after widely circulated images sparked backlash from right-wing users and billionaire X …

(RTTNews) - Alphabet Inc.'s (GOOG) Google issued an apology for its AI chatbot Gemini, generating images of historical figures with inaccurate racial and ethnic depictions, attributing the errors to its attempt to create diverse results.

Th…

Google LLC has apologized after its Gemini chatbot generated images that incorrectly depicted racially diverse characters in historical contexts.

Prabhakar Raghavan, the company’s senior vice president of knowledge and information, acknowl…

Google is offering a mea culpa for its widely-panned artificial intelligence (AI) image generator that critics trashed for creating "woke" content, admitting it "missed the mark."

"Three weeks ago, we launched a new image generation feature…

Google's Gemini disaster can be summarized with this sentence:

A non-problem with a terrible solution; a terrible problem with no solution.

By now I'm sure you all have seen the lynching Google has received online after Gemini users realize…

Google on Saturday admitted to Fox News Digital that a failure by its AI chatbot to outright condemn pedophilia is both "appalling and inappropriate" and a spokesperson vowed changes.

This came in the wake of users noting that Google Gemin…

Tech giant Google is facing a barrage of criticism by followers of Indian Prime Minister Narendra Modi over responses by its Gemini artificial intelligence (AI) tool that have been perceived as “biased”, prompting calls for tough regulation…

Google is poised to relaunch its artificial intelligence-powered image generator, Gemini, a few weeks after it suspended the service for historically inaccurate photos the company called “embarrassing and wrong.”

Users testing out the servi…

**'Woke' ... Overcorrecting For Diversity? **

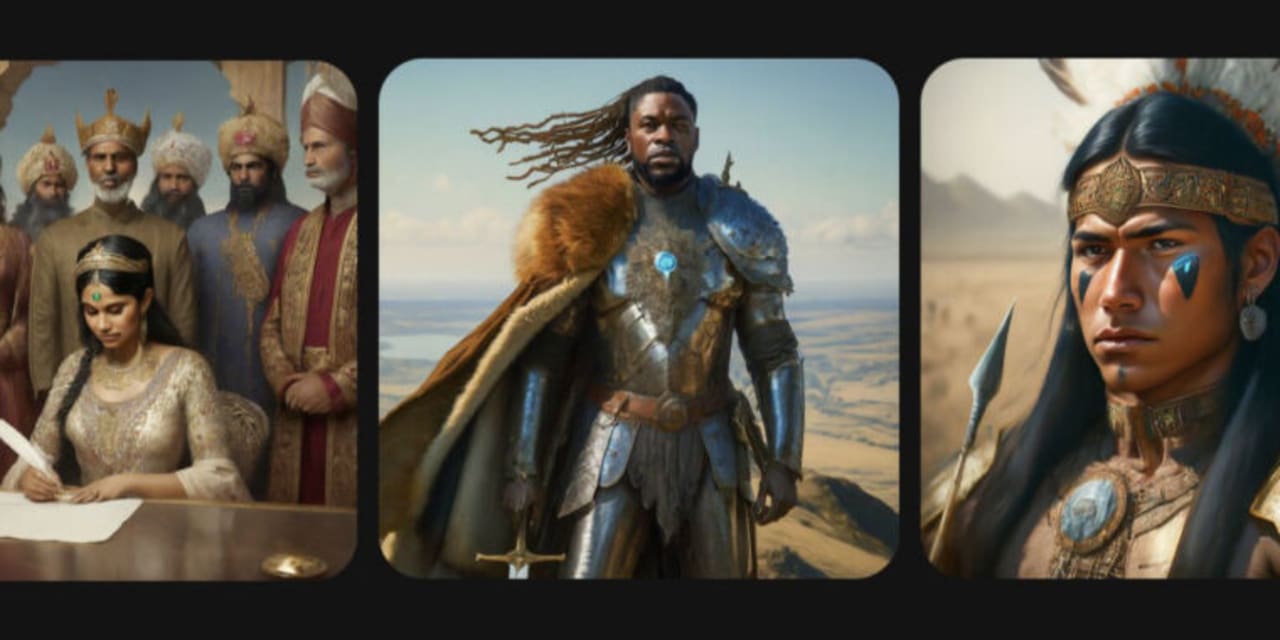

An example of the inaccuracy issue (as highlighted by X user Patrick Ganley recently, after asking Google Gemini to generate images of the Founding Fathers of the US), was when it returned image…

Google’s artificial-intelligence push is turning into a reputational headache.

Gemini, a chatbot based on the company’s most advanced AI technology, angered users last week by producing ahistoric images and blocking requests for depictions …

Google CEO Sundar Pichai blasted the Gemini AI chatbot’s widely panned habit of generating “woke” versions of historical figures as “completely unacceptable” in a scathing email to company employees.

Pichai said Google’s AI teams are “worki…

Google was forced to turn off the image-generation capabilities of its latest AI model, Gemini, last week after complaints that it defaulted to depicting women and people of color when asked to create images of historical figures that were …

Google Gemini and Meta's Imagine AI faced backlash for generating historically inaccurate images. The models showed people of Southeast Asia as American colonials and people of color as Founding Fathers. The issue highlighted bias and over …

Google’s co-founder Sergey Brin has kept a low profile since quietly returning to work at the company. But the troubled launch of Google’s artificial intelligence model Gemini resulted in a rare public utterance recently: “We definitely mes…

/cdn.vox-cdn.com/uploads/chorus_asset/file/25297898/Screen_Shot_2024_02_21_at_2.56.19_PM.png)

/cdn.vox-cdn.com/uploads/chorus_asset/file/25299052/2_22_2024_LEDE_SCREENSHOT.jpg)