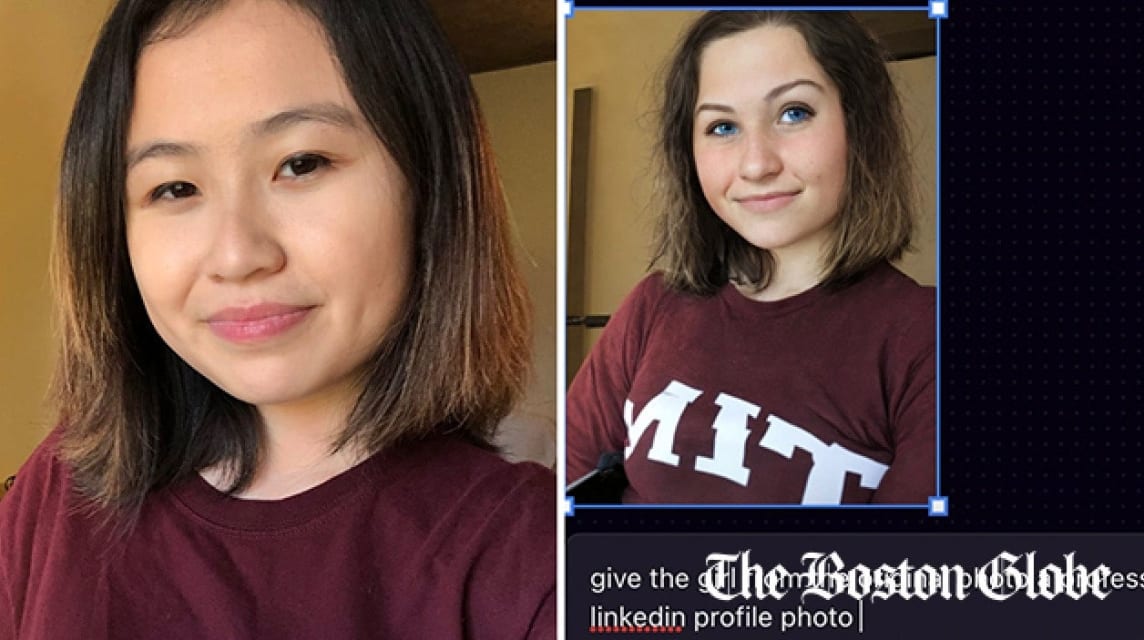

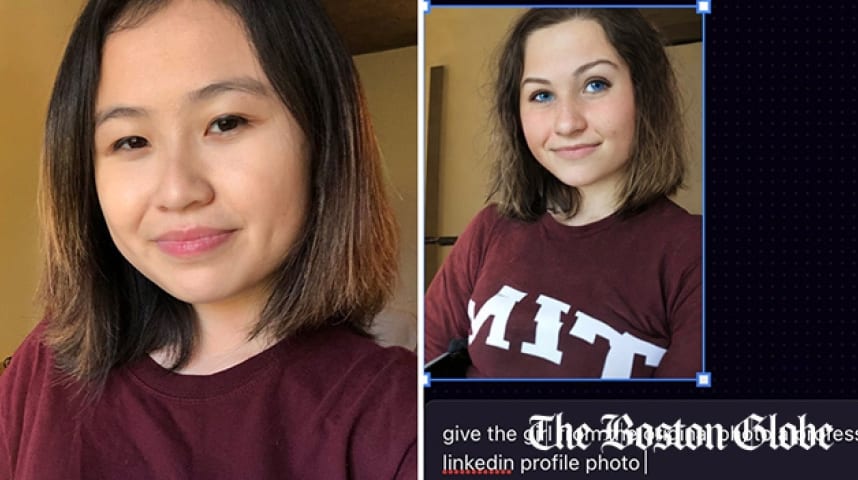

Description: An AI application modified an MIT student's photo to appear 'professional' by lightening her skin and changing her eye color to blue, highlighting the racial bias in the training data of the program.

Entities

View all entitiesAlleged: Playground AI developed and deployed an AI system, which harmed Rona Wang and Racial minorities who may have experienced the same result.

Incident Stats

Risk Subdomain

A further 23 subdomains create an accessible and understandable classification of hazards and harms associated with AI

1.1. Unfair discrimination and misrepresentation

Risk Domain

The Domain Taxonomy of AI Risks classifies risks into seven AI risk domains: (1) Discrimination & toxicity, (2) Privacy & security, (3) Misinformation, (4) Malicious actors & misuse, (5) Human-computer interaction, (6) Socioeconomic & environmental harms, and (7) AI system safety, failures & limitations.

- Discrimination and Toxicity

Entity

Which, if any, entity is presented as the main cause of the risk

AI

Timing

The stage in the AI lifecycle at which the risk is presented as occurring

Post-deployment

Intent

Whether the risk is presented as occurring as an expected or unexpected outcome from pursuing a goal

Unintentional

Incident Reports

Reports Timeline

Rona Wang is no stranger to using artificial intelligence.

A recent MIT graduate, Wang, 24, has been experimenting with the variety of new AI language and image tools that have emerged in the past few years, and is intrigued by the ways the…

Variants

A "variant" is an AI incident similar to a known case—it has the same causes, harms, and AI system. Instead of listing it separately, we group it under the first reported incident. Unlike other incidents, variants do not need to have been reported outside the AIID. Learn more from the research paper.

Seen something similar?

Similar Incidents

Did our AI mess up? Flag the unrelated incidents

Fake LinkedIn Profiles Created Using GAN Photos

· 4 reports

TayBot

· 28 reports

Uber AV Killed Pedestrian in Arizona

· 25 reports

Similar Incidents

Did our AI mess up? Flag the unrelated incidents

Fake LinkedIn Profiles Created Using GAN Photos

· 4 reports

TayBot

· 28 reports

Uber AV Killed Pedestrian in Arizona

· 25 reports