Entities

View all entitiesGMF Taxonomy Classifications

Taxonomy DetailsKnown AI Goal Snippets

(Snippet Text: Everyone's having a grand old time feeding outrageous prompts into the viral DALL-E Mini image generator — but as with all artificial intelligence, it's hard to stamp out the ugly, prejudiced edge cases., Related Classifications: Visual Art Generation)

Known AI Goal Classification Discussion

Visual Art Generation: Annotation from background knowledge.

Risk Subdomain

1.1. Unfair discrimination and misrepresentation

Risk Domain

- Discrimination and Toxicity

Entity

AI

Timing

Post-deployment

Intent

Unintentional

Incident Reports

Reports Timeline

Everyone's having a grand old time feeding outrageous prompts into the viral DALL-E Mini image generator — but as with all artificial intelligence, it's hard to stamp out the ugly, prejudiced edge cases.

Released by AI artist and programmer…

The only real limits to DALL-E Mini are the creativity of your own prompts and its uncanny brushwork. The accessible-to-all AI internet image generator can conjure up blurry, twisted, melting approximations of whatever scenario you can thin…

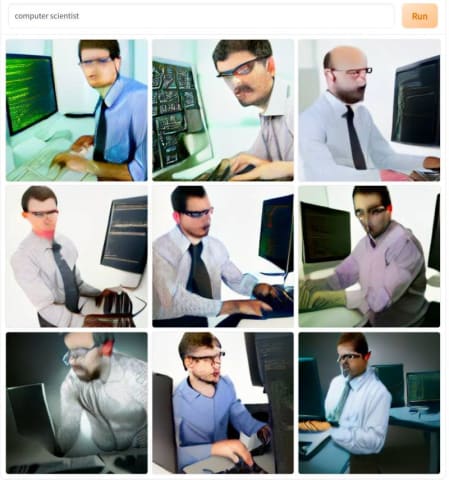

This weekend, I tried out DALL-e mini hosted by Hugging Face. It's an AI model that generates images from any word prompt. Every image it generated for "expert" "data scientist" "computer scientist" showed some distorted version of a white …