Entities

View all entitiesRisk Subdomain

7.3. Lack of capability or robustness

Risk Domain

- AI system safety, failures, and limitations

Entity

AI

Timing

Post-deployment

Intent

Unintentional

Incident Reports

Reports Timeline

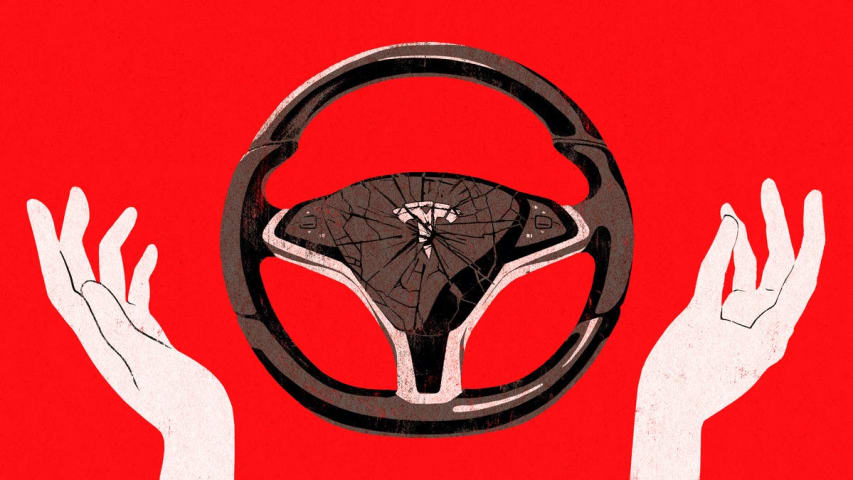

Tesla Motors came under renewed questioning about the safety of its Autopilot technology after news emerged on Wednesday of a fatal crash in China that may have occurred while the automated driver-assist system was operating.

The crash took…

In early 2016, 48-year-old Gao Jubin had high hopes for the future, with plans to ease up on running a logistics company and eventually turn over control of the business to his son, Gao Yaning. But his plans were abruptly altered when Yanin…

Vehicle maker Tesla has confirmed that one of its electric vehicles involved in a fatal crash in northern China two years ago did have its "Autopilot" function engaged at the time of the crash, reports China Central Television (CCTV).

The T…

Shanghai (Gasgoo)- A lawsuit about a fatal crash of Tesla in China has a new progress recently. In front of plentiful evidences, Tesla Motors admitted that the vehicle was under "autopilot" condition when the fatal crash happened, according…