Entities

View all entitiesRisk Subdomain

1.1. Unfair discrimination and misrepresentation

Risk Domain

- Discrimination and Toxicity

Entity

AI

Timing

Post-deployment

Intent

Intentional

Incident Reports

Reports Timeline

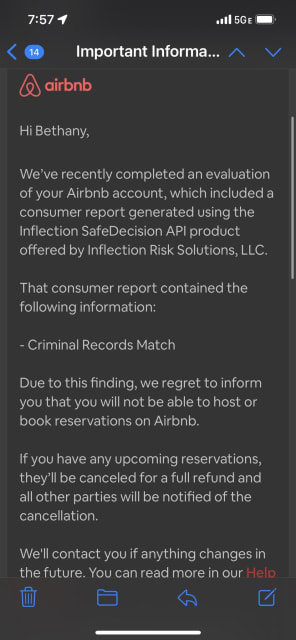

EPIC has filed a complaint with the FTC, alleging that Airbnb has committed unfair and deceptive practices in violation of the FTC Act and the Fair Credit Reporting Act. Airbnb secretly rates customers “trustworthiness" based on a patent th…

Airbnb may be using automated decision-making to leave some users out in the cold.

If you've ever been in the market for a holiday rental property in the past few years, chances are you've been on Airbnb.

The US-based platform has become a …

Airbnb may be using automated decision making to boot users from the short-term rental platform, based on factors like social media, employment history and your IP address.

Consumer advocacy group Choice called out Airbnb in a report questi…

Airbnb may be digging through users’ old social media posts to keep people it deems untrustworthy off the site.

If the algorithm it uses doesn't like what it sees, it seems users can be rejected without explanation.

That’s what happened to …

An investigation by the Australian consumer rights organisation, Choice, has found that the online accommodation marketplace Airbnb is secretly collecting users’ personal data to assess whether they are trustworthy enough to make a booking.…

Did I just… get a lifetime @Airbnb booking ban for a 9 year old possession charge?!? 🤯