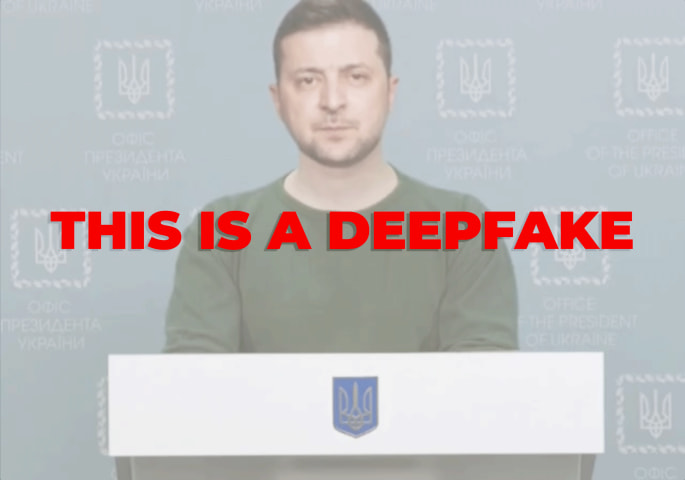

Description: A reportedly AI-manipulated video circulated on social media that falsely portrayed U.S. President-elect Donald Trump calling for the release of Nigerian separatist leader Nnamdi Kanu and threatening sanctions against Nigeria. The video allegedly used old footage paired with AI-generated audio mimicking Trump's voice. Fact-checkers identified the video as inauthentic, noting mismatched lip-sync, fabricated quotes, and the fictitious date of "November 31st."

Editor Notes: Timeline notes: The reported posting of the video to social media was November 20, 2023. FactCheckAfrica published its report on the video on November 22, 2024. The report was included in the database on April 21, 2025. For a similar incident, see Incident 1051.

Entities

View all entitiesAlleged: Unknown deepfake technology developers and Unknown voice cloning technology developers developed an AI system deployed by Unknown actors, which harmed Nnamdi Kanu , Media integrity , General public of Nigeria , Electoral integrity and Donald Trump.

Alleged implicated AI systems: Unknown voice cloning technology , Unknown deepfake app , TikTok and Social media platforms

Incident Stats

Risk Subdomain

A further 23 subdomains create an accessible and understandable classification of hazards and harms associated with AI

4.1. Disinformation, surveillance, and influence at scale

Risk Domain

The Domain Taxonomy of AI Risks classifies risks into seven AI risk domains: (1) Discrimination & toxicity, (2) Privacy & security, (3) Misinformation, (4) Malicious actors & misuse, (5) Human-computer interaction, (6) Socioeconomic & environmental harms, and (7) AI system safety, failures & limitations.

- Malicious Actors & Misuse

Entity

Which, if any, entity is presented as the main cause of the risk

AI

Timing

The stage in the AI lifecycle at which the risk is presented as occurring

Post-deployment

Intent

Whether the risk is presented as occurring as an expected or unexpected outcome from pursuing a goal

Intentional

Incident Reports

Reports Timeline

Loading...

Claim

A viral video on social media shows the President-elect of the United State, Donald Trump urging the Nigerian government to release Nnamdi Kanu, the leader of the Indigenous People of Biafra (IPOB).

Verdict

False. The video is d…

Variants

A "variant" is an AI incident similar to a known case—it has the same causes, harms, and AI system. Instead of listing it separately, we group it under the first reported incident. Unlike other incidents, variants do not need to have been reported outside the AIID. Learn more from the research paper.

Seen something similar?