User Story Spotlights: Behind the Scenes of Database Developments

Each major feature of the AI Incident Database (AIID) is built as a series of incremental developments within the "Agile" framework, whereby capabilities are grounded in user stories detailing the wins achieved by the feature. We will be spotlighting these user stories over time to show how our efforts build upon each other in the direction of promoting responsible AI by learning from past mistakes.

In the past month, user experience (UX) engineer Luna McNulty, database editor Khoa Lam, and full stack engineer Cesar Varela each accomplished milestones supporting the ability to visualize and group incidents to drive user insights.

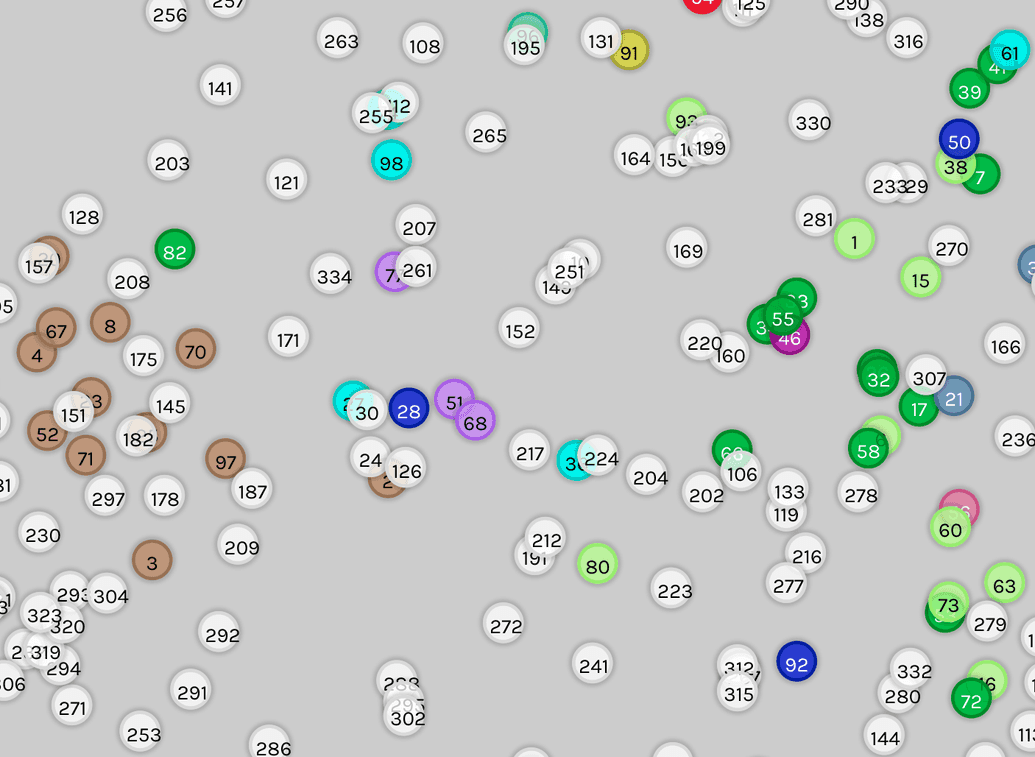

Spatial Visualization

User Story: "I want to understand incident clusters so I I can motivate my {research, policy, advocacy, engineering} work"

| A key function of the AIID is the discoverability of incidents, especially as incidents increase through time. The AIID’s first taxonomy provided by the Center for Security and Emerging Technology at Georgetown University gave users a powerful tool to search and discover by classification filters, but incident reports contain far more information than can be captured in a single taxonomy. UX engineer Luna McNulty added a layer on top of this taxonomy and the incident embedding model to group incidents according to their textual properties. The data visualization plots incidents closer to similar incidents based on the embedding model, and incidents are colored based on the selected CSET classification type. As the number of incidents increases and the incident data becomes richer, additional ways to visualize the data will help tell a story about past AI harms. |

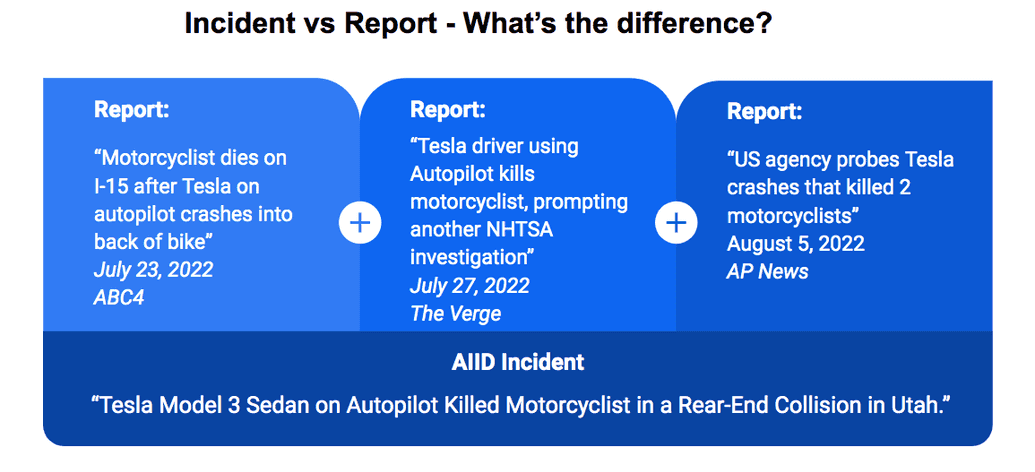

Featured User Story: Enriching Incident Data

User Story: "I want incidents to have titles so I can refer to them in discussion with other {researchers, policy makers, engineers, people}"

| From its start, the AIID has collected individual reports (e.g., news articles) of AI harms and retrospectively grouped them into incident pages presenting context and information about the same event. As the database has grown, it has become necessary to distill the information presented by incident reports into comprehensive summaries of each incident. Summaries allow database users to cut through sensationalized headlines into consensus views of what occurred. The figure below shows the distinction between reports and incidents, and gives an example of how the individual report titles translate to composite incident titles. |

Database editor Khoa Lam systematically worked through all incident records to add titles, descriptions, and alleged parties {deployers, developers, harmed parties}. The new metadata opens opportunities for many future database developments to group and present incidents in ways that power insights and trends in AI harms.

Both of these user stories ultimately support our mission to build responsible AI practices by understanding the past, but we also want to foster a culture of responsibility for responding to incidents after they occur. This brings us to the final user story of the blog post with Cesar's developments.

Featured User Story: Entity Pages

User Story: "I want to see pages summarizing my organization according to the incidents we are associated with so I can develop a program mitigating the effects"

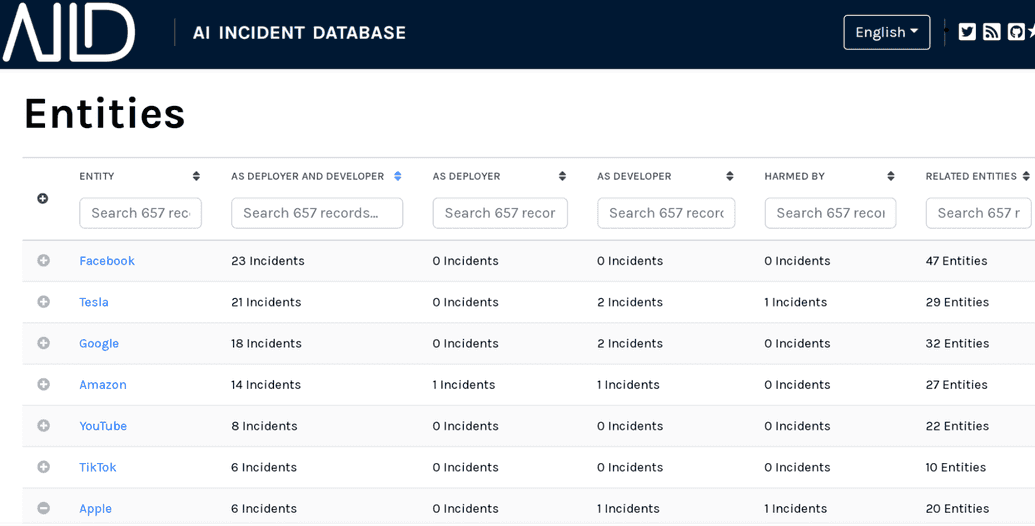

| Building upon Khoa’s work enriching incidents with key metadata, full stack engineer Cesar Varela developed a way to view incidents grouped by “entity”. Database visitors can view pages highlighting the incidents generated and responded to by various companies. In a future release, we will provide these entities with the ability to add incident responses (i.e., details from their perspective on what happened, why it happened, and what they will do to prevent/mitigate its recurrence). As a practice for building a collective sense of responsibility for future AI incidents, these response processes are critical to the production and deployment of socially beneficial AI systems. |

Looking to Dig Deeper?

Our goal is not only to be a repository of AI incidents, but to contribute to the mission of responsible AI development and deployment with data presentation supporting insights. If you have an idea for a new way of visualizing or searching incidents, please contact us!