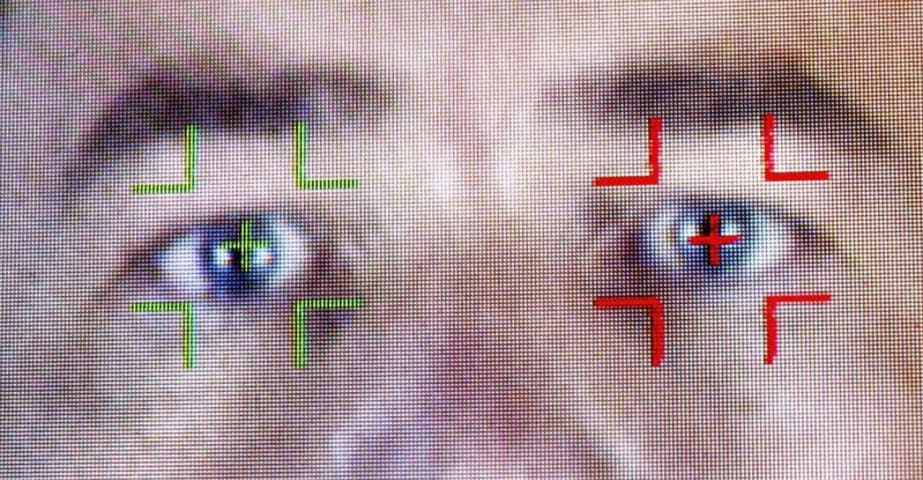

Description: Customers’ untrustworthiness and unprofitability were reportedly determined by Ping An, a large insurance company in China, via facial-recognition measurements of micro-expressions and body-mass indices (BMI), which critics argue was likely to make mistakes, discriminate against certain ethnic groups, and undermine its own industry.

Entities

View all entitiesAlleged: Ping An developed and deployed an AI system, which harmed Ping An customers and Chinese minority groups.

Risk Subdomain

A further 23 subdomains create an accessible and understandable classification of hazards and harms associated with AI

1.1. Unfair discrimination and misrepresentation

Risk Domain

The Domain Taxonomy of AI Risks classifies risks into seven AI risk domains: (1) Discrimination & toxicity, (2) Privacy & security, (3) Misinformation, (4) Malicious actors & misuse, (5) Human-computer interaction, (6) Socioeconomic & environmental harms, and (7) AI system safety, failures & limitations.

- Discrimination and Toxicity

Entity

Which, if any, entity is presented as the main cause of the risk

Human

Timing

The stage in the AI lifecycle at which the risk is presented as occurring

Post-deployment

Intent

Whether the risk is presented as occurring as an expected or unexpected outcome from pursuing a goal

Intentional

Incident Reports

Reports Timeline

China’s largest insurer, Ping An, has apparently started employing artificial intelligence to identify untrustworthy and unprofitable customers. It offers a chilling example of what, if we’re not careful, the future could look like here in …

Variants

A "variant" is an AI incident similar to a known case—it has the same causes, harms, and AI system. Instead of listing it separately, we group it under the first reported incident. Unlike other incidents, variants do not need to have been reported outside the AIID. Learn more from the research paper.

Seen something similar?