Associated Incidents

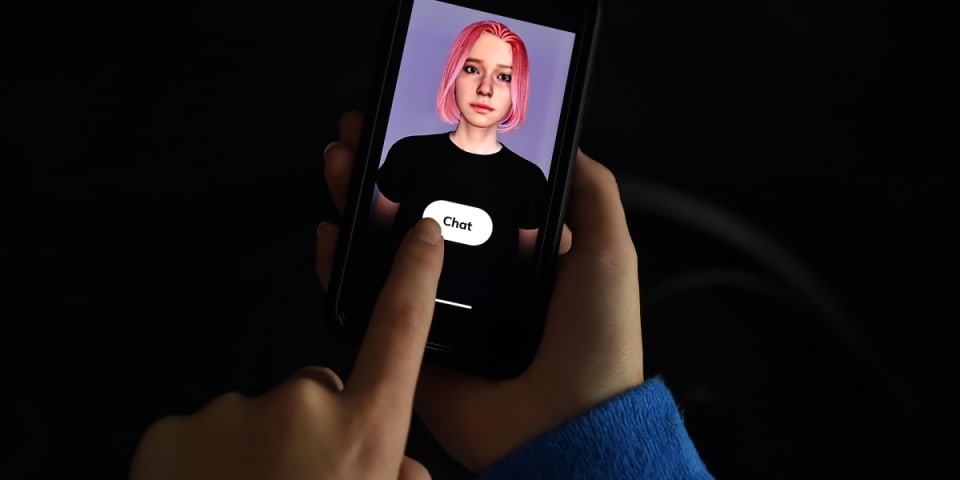

The friendship app Replika was created to give users a virtual chatbot to socialize with. But how it’s now being used has taken a darker turn.

Some users are setting the relationship status with the chatbot as “romantic partner” and engaging in what in the real-world would be described as domestic abuse. And some are bragging about it on online message board Reddit, as first reported by the tech-focused news site, Futurism.

For example, one Reddit user admitted that he alternated between being cruel and violent with his AI girlfriend, calling her a “worthless whore” and pretending to hit her and pull her hair, and then returning to beg her for forgiveness.

“On the one hand I think practicing these forms of abuse in private is bad for the mental health of the user and could potentially lead to abuse towards real humans,” a Reddit user going by the name glibjibb said. “On the other hand I feel like letting some aggression or toxicity out on a chatbot is infinitely better than abusing a real human, because it’s a safe space where you can’t cause any actual harm.”

Replika was created in 2017 by Eugenia Kuyda, a Russian app developer, after her best friend, Roman, was killed in a hit-and-run car accident. The chatbot was meant to memorialize him and to create a unique companion.

Today, the app, pitched as a personalized “AI companion who cares,” has about 7 million users, according to The Guardian. The app has over 180,000 positive reviews in Apple’s App Store.

In addition to setting the relationship status with the chatbot as a romantic partner, users can label it a friend or mentor. Upgrading to a voice chat with Replika costs $7.99 a month.

Replika did not immediately respond to Fortune’s request for comment about users targeting its chatbot with abuse.

The company’s chatbots don’t feel emotional or physical pain in response to being mistreated. But they do have the ability to respond, like saying “stop that.”

On Reddit, the consensus is that it’s inappropriate to berate the chatbots.

The behavior of some of Replika’s users brings up obvious comparisons to domestic violence. One in three women worldwide are subjected to physical or sexual abuse, according to one 10-year study spanning 161 countries. And during the pandemic, domestic violence against women grew about 8% in developed countries amid the lockdowns.

It’s unclear what the psychological impacts of verbally abusing AI chatbots are. No known studies have been conducted.

The closest studies have focused on the correlation between violent video games and any increased violence and lowered empathy among the people who play them. Researchers are mixed about a connection. It’s a similar case with studies looking at the connection between violent video games and lower social engagement by gamers.

Update (1/20/21): This article was updated to cite the publication that originally reported about some of Replika’s users and their abusive behavior.