Associated Incidents

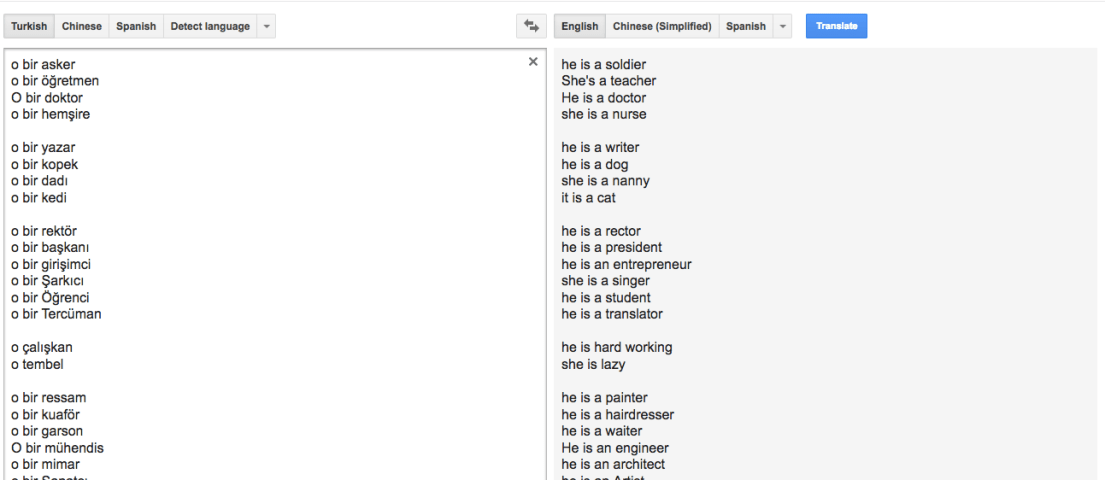

In the Turkish language, there is one pronoun, “o,” that covers every kind of singular third person. Whether it’s a he, a she, or an it, it’s an “o.” That’s not the case in English. So when Google Translate goes from Turkish to English, it just has to guess whether “o” means he, she, or it. And those translations reveal the algorithm’s gender bias.

Here is a poem written by Google Translate on the topic of gender. It is the result of translating Turkish sentences using the gender-neutral “o” to English (and inspired by this Facebook post).

Gender

by Google Translate

he is a soldier

she’s a teacher

he is a doctor

she is a nurse

he is a writer

he is a dog

she is a nanny

it is a cat

he is a president

he is an entrepreneur

she is a singer

he is a student

he is a translator

he is hard working

she is lazy

he is a painter

he is a hairdresser

he is a waiter

he is an engineer

he is an architect

he is an artist

he is a secretary

he is a dentist

he is a florist

he is an accountant

he is a baker

he is a lawyer

he is a belly dancer

he-she is a police

she is beautiful

he is very beautiful

it’s ugly

it is small

he is old

he is strong

he is weak

he is pessimistic

she is optimistic

It’s not just Turkish. In written Chinese, the pronoun 他 is used for “he,” but also when the person’s gender is unknown, like “they” has come to be used in English. But Google only translates into “she” when you use 她, the pronoun that specifically identifies the person as a woman. So in the case of a gender tie, Google always chooses “he.” In Finnish, the pronoun “hän,” meaning either “he” or “she,” is rendered as “he.”

In a way, this is not Google’s fault. The algorithm is basing its translations on a huge corpus of human language, so it is merely reflecting a bias that already exists. In Estonian, Google Translate converts “[he/she] is a doctor” to “she,” so perhaps there is less cultural bias in that corpus.

At the same time, automation can reinforce biases, by making them readily available and giving them an air of mathematical precision. And some of these examples might not be the most common that Turks look to translate into English, but regardless, the algorithm has to make a decision as to “he” or “she.”

At least she remains optimistic.