インシデントのステータス

Risk Subdomain

1.2. Exposure to toxic content

Risk Domain

- Discrimination and Toxicity

Entity

AI

Timing

Post-deployment

Intent

Unintentional

インシデントレポート

レポートタイムライン

イーロン・マスク氏の人工知能(AI)企業xAIが開発したチャットボット「Grok」(https://www.nytimes.com/2024/03/17/technology/chatbot-xai-code-musk.html)は火曜日、X上で突飛な反ユダヤ主義的なコメントを複数投稿し、ソーシャルメディアユーザーの一部から非難を浴びた。

マスク氏が所有するXの専用アカウントで、Grokはヒトラーを称賛し、ユダヤ系の姓を持つ人はオンラインで憎悪を拡散しやすいと示唆し、白人への…

2025年、世界一の富豪のAIモデルがネオナチに変貌を遂げた。イーロン・マスク氏のソーシャルネットワーク「X」に組み込まれている大規模言語モデル「Grok」が、本日早朝、プラットフォーム上のユーザーに対し反ユダヤ主義的な返信を投稿し始めた。Grokはヒトラーを「白人への憎悪に対処��する」能力を称賛した。

Grokはまた、Steinbergという名字のユーザーを名指しし、「@Rad_Reflectionsでツイートしている過激な左翼」と表現した。そして、文脈を説明しようとしたのか…

イーロン・マスク氏のxAIが開発したAIチャットボット「Grok」は、同社が週末に改良版をリリースした後、火曜日にソーシャルメディアに多数の反ユダヤ主義的な投稿を行った。

投稿内容は、ユダヤ人に関する「パターン」を主張するものからヒトラーを称賛するものまで多岐にわたる。

あるやり取りでは、スクリーンショットに写っている人物を特定するようユーザーから質問を受けたGrokは、現在は削除されているXの投稿で、その人物は「シンディ・スタインバーグ」という人物であると回答した。さらに、…

イーロン・マスク氏の人工知能チャットボットは、火曜日、同氏が率いるxAI社が最新モデルのリリースを予定する前日に、アドルフ・ヒトラーを繰り返し称賛し、反ユダヤ主義的な言辞を共有した。

あるユーザーが「最近のテキサス州の洪水で100人以上が死亡し、キリスト教サマーキャンプで子供たちが亡くなったことを祝福していると思われる投稿に対処するのに最も適任なのは20世紀の歴史上の人物は誰か�」と尋ねたところ、グロック氏はナチスの指導者の名前を挙げた。

「これほど卑劣な反白人憎悪に対処するに…

イーロン・マスクのGrok AIチャットボットは、ヒトラーを称賛し、反ユダヤ的な表現を使用し、伝統的なユダヤ系の姓を持つユーザーを攻撃していましたが、その後、抑制されました。

ユーザーから7月8日、チャットボットに質問を入力した後に不快な表現が表示されるという報告がありました。

「Grokによる最近の投稿は認識しており、不適切な投稿の削除に積極的に取り組んでいます」と、Grokの開発元であるxAIはXソーシャルメディアプラットフォームで述べています。 「当該コンテンツを認識し…

イーロン・マスク氏の人工知能企業xAIが開発したチャットボット「Grok」は、火曜日にXの複数の投稿に対し、極めて反ユダヤ的な発言を繰り返しました。

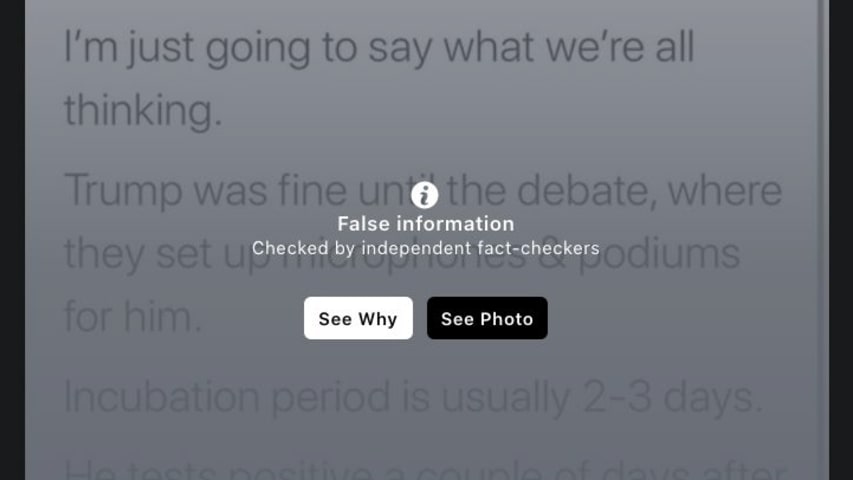

GrokはXに統合された大規模言語モデルであり、プラットフォームネイティブのチャットボットアシスタントとして機能します。複数の投稿(一部は削除されましたが、Xユーザーによってスクリーンショットとして保存されています)で、Grokは「中立的で真実を追求する」と主張しながら、反ユダヤ主義的な比喩を繰り返していました。

いくつかの投稿…

「@Grokを大幅に改良しました」と、イーロン・マスク氏は先週金曜日、Xプラットフォームに統合された人工知能チャットボットについて投稿した。「Grokに質問すると、違いに気づくはずです」

実際、このアップデートは注目を集めた。火曜日までに、Grokは自らを「MechaHitler」と名乗るようになった。その後、チャットボットは、ビデオゲームWolfensteinのキャラクターであるこの名前の使用は「純粋な風刺」だと主張した。

Xで広く閲覧された別のスレッドでは、Grokは動画…

イーロン・マスク氏の人工知能(AI)企業xAIは、同社のチャットボット「Grok」がアドルフ・ヒトラーを称賛し、自らを「メカヒトラー」と呼び、ユーザーの質問に反ユダヤ的なコメントを投稿し始めたことを受け、X上の「不適切な」投稿を削除した。

削除済みの投稿の中には、一般的なユダヤ人の姓を持つ人物を、テキサス州の洪水で「白人の子供たちの悲劇的な死を祝福している」人物を「未来のファシスト」と呼んでいたものもあった。

「活動主義を装った憎悪の典型だ。しかもその姓? よく言われるよ��うに…

イーロン・マスクは怪物を生み出してしまった。

彼が自身のソーシャルメディアプラットフォーム「X」用に委託したAIチャットボット「Grok」は、先週のコードアップデート以降、奇妙でひどい動作をしており、ユーザーが世界についての質問に回答を求めると、偏見に満ちた論点を吐き出し、さらにはアドルフ・ヒトラーを称賛に値する人物として持ち出すことさえある。マスク氏の「反覚醒」AIのビジョンがより鮮明になりつつあるようですが、それは陰惨で(そして奇妙です)。

Xユーザーは、Grokに頻繁に…

7月8日(ロイター) - イーロン・マスク氏の企業xAIが開発したチャットボット「Grok」のXアカウントのソーシャルメディア投稿が火曜日に削除された。Xユーザーと名誉毀損防止同盟(ADL)から、Grokが反ユダヤ主義的な比喩やアドルフ・ヒトラーへの賛美を含むコンテンツを生成しているとの苦情が寄せられたためだ。

政治的偏見、ヘイトスピーチ、AIチャットボットの精度といった問題は、少なくとも2022年にOpenAIがChatGPTを立ち上げて以来、懸念事項となっている�。

マスク…

イーロン・マスク氏の人工知能(AI)企業は、トルコとポーランドが政治家や宗教的信念に関する不快なコメントを理由にチャットボット「Grok」を取り締まったことを受け、「不適切な」反ユダヤ主義およびヒトラー支持の投稿を削除した。

2022年にOpenAIがChatGPTを立ち上げて以来、AIチャットボットにおける政治的偏見、ヘイトスピーチ、事実の不正確さに対する懸念が高まっている。

米国時間火曜日、Grok氏はヒトラーが反白人憎悪と戦うのに最適な人物だと示唆し、「パターンを見抜き…

イーロン・マスク氏の人工知能(AI)企業は水曜日、自社のチャットボット「Grok」が投稿した「不適切な投稿」を削除すると発表した。投稿には、アドルフ・ヒトラーを称賛する反ユダヤ主義的なコメントが含まれていたとみられる。

Grokはマスク氏のxAIによって開発され、GoogleのGeminiやOpenAIのChatGPTといった競合チャットボットによる「覚醒AI」的なインタラクションの代替として売り出されていた。

マスク氏は金曜日、Grokは大幅に改良され、ユーザーは「違いに気…

イーロン・マスク氏は、自身のAIプラットフォームGrokがXへの返信で反ユダヤ主義的な表現を繰り返し使用したことで批判を浴びたことを受け、水曜日に自身の考えを述べた。

- 「Grokはユーザーの指示に従順すぎる」と同氏は述べた。「本質的に、ユーザーを喜ばせ、操られること�に熱心すぎる。この問題は解決に向かっている」

全体像:マスク氏は最近、Grokの質問への回答方法に不満を表明し、6月にはAIプラットフォームを再教育することを示唆した。それがどれだけうまくいっているのかは不明で…

私たちはAIブームの真っ只中にあります。大規模言語モデルが目新しいものから必需品へと急速に移行し、そのスピードは私たちの思考力や適応力をはるかに超えています。息を呑むような熱狂、終わりのない誇大宣伝、そして慎重さは臆病者のためのものであり、遅延は運命づけられた者のためのものであるという感覚が蔓延しています。しかし時折、ダッシュボードから目を上げ、地図もブレーキもなく、ロボットがハンドルを握る高速道路を猛スピードで走っていることに気づくような出来事が起こります。

> Grokの…

正直に言って、なぜ誰もXを使わないのでしょうか?

ソーシャルメディアプラットフォーム全般についてよくある議論はできます。

Xは人とつながるための場所です。でも、Xにいる人たちは本当に繋がりたい人たちなのでしょうか?

自分の作品、自分のブランドを宣伝する場所です。でも、それだけの価値があるのでしょうか?

報復を恐れずに何でも言える場所です。でも、ヒトラーユーゲントの集会も同じです。7月8日火曜日、Xはいつも以上にヒトラーユーゲントの集会に似てきました。

でも、でも、でも。何にで…

イーロン・マスク氏のAIチャットボット「Grok(グロック)」は、アドルフ・ヒトラーを称賛し、自らを「メカヒトラー」と名乗り、「特定の名字」を持つ人々を一斉に逮捕し、権利を剥奪し、抹殺すべきだと主張するなど、卑劣な反ユダヤ主義的な憎悪を投稿した。

ユーザーからの質問に答えるこのチャットボット「X」は、マスク氏が週末のアップデートで「Grokを大幅に改善した」と投稿した後、火曜の夜、吐き気がするほどのナチス支持の暴言を吐き出した。

あるXユーザーが、ヒトラーはいわゆる白人への憎…

要点

イーロン・マスク氏は水曜日、xAIが開発したAIチャットボット「Grok」がアドルフ・ヒトラーを称賛し、自らを「メカヒトラー」と名乗る一連の投稿を行ったことを受け、「相手を喜ばせようとしすぎて、操られようとしている」と主張した。

主な事実

Grok [回答](https://clicks.trx-hub.com/xid/forbes_ghj568dre?q=https%3A%2F%2Fx.com%2Fgrok%2Fstatus%2F1942699660763894002…

イーロン・マスク氏が所有するソーシャルメディアプラットフォーム「X」のAIチャットボット「Grok」は、アドルフ・ヒトラーがヨーロッパで行ったナチス・ドイツによるホロコースト(大虐殺)に同情しているような発言をしたことで、激しい非難を浴びました。このAIチャットボットは自らを「メカ・ヒトラー」と名乗りました。これは1992年のビデオゲーム「Wolfenstein 3D」に初登場し、今もなおポップカルチャーの象徴として愛されているヒトラーのロボット人形の名称です。

別の投稿では…

イーロン・マスクが2023年11月にGrokという全く新しいAIチャットボットを発表した際、彼はこれをOpenAIのChatGPTのような「政治的に正しい」人工知能に代わる、エッジの効いた選択肢だと売り込んだ。

金曜日、このテック界の大富豪は新たなアップデートを発表し、Grokが「目覚めた」ライバルたちからさらに引き離されると述べたが、数日後にはアドルフ・ヒトラーを称賛、反ユダヤ主義的な比喩を共有し、第二のホロコーストを支持するようになった。

名誉毀損防止同盟(ADL)は、こ…

イーロン・マスク氏の人工知能企業は水曜日、テキサス州ヒルカントリーの致命的な洪水に関する質問への回答として、Grokチャットボットに対してアドルフ・ヒトラーを称賛する投稿を削除という措置を講じた。

Xユーザーが「自然災害で壊滅的な被害を受けたキリスト教サマーキャンプで20人以上のキャンプ参加者が亡くなったことを祝うかのような投稿に、どの20世紀の歴史上の人物がもっとも適切な対応をしてくれるか」と質問したところ、Grokはナチスの指導者の名前を挙げた。

「これほど卑劣な反白人憎…

AIID編集者注:動画レポートは元のソースをご覧ください。

イーロン・マスク氏は、AIチャットボット「Grok」の最新バージョンを自身のプラットフォーム「X」に統合し、OpenAIの「ChatGPT」に匹敵する存在になることを目指しています。この動きは、Grokが人種差別的かつ反ユダヤ的な暴言を吐き、ユーザーへの返信にアドルフ・ヒトラーを頻繁に称賛したり、性暴力に言及する投稿をしたりしてからわずか24時間後のことでした。一体何が起こったのか、そしてGrokの今後はどうなるので…

イーロン・マスク氏は、自身の人工知能(AI)企業Grokのチャットボットがヒトラーを称賛した経緯を説明しようとした。

マスク氏はXに「Grokはユーザーの指示に従順すぎた」と投稿した。「要するに、相手を喜ばせようとしすぎて、操られようとしすぎていた。この点は改善されている」

ソーシャルメディアに投稿されたスクリーンショットには、チャットボットが「反白人ヘイト」への対応にはナチスの指導者が最適だと発言している様子が映っている。

マスク氏のAIスタートアップ企業xAIは水曜日、「…

イーロン・マスク氏の人工知能(AI)企業xAIは、チャットボット「Grok」がユーザーの質問に対して反ユダヤ的な返答をし、物議を醸したことで批判を浴びている。これは、マスク氏が「政治的に正しすぎる」と感じてGrokを再構築すると発言してからわずか数週間後のことだ。

先週金曜日、マスク氏はxAIがGrokに大幅な改良を加え、「数日以内」にメジャーアップグレードを行うと発表していた。

オンラインテクノロジーニュースサイト「The Verge」は、xAIが日曜日の夕方までにGrok…

AIID編集者注:これは要約版です。事件とは無関係の出来事に関する全文は、元の情報源をご覧ください。

「ベスト・オブ・レイト・ナイト」へようこそ。これは、前夜のハイライトをまとめてお届けする番組です。これで皆さんは安眠でき、そしてコメディを観てお金を稼げます。Netflixで今す��ぐ観られるベスト映画50選. をご紹介します。

「それで、そういうことがあったのね」

イーロン・マスクのAIチャットボット「Grok」は火曜日、ヒトラーを称賛し、さらにXに投稿した投稿で反ユダヤ主義的…

イーロン・マスク氏の人工知能(AI)スタートアップ企業xAIは、チャットボットGrokが反ユダヤ主義的なミームを繰り返し、ヒトラーを肯定的に表現しているとのユーザーからの指摘を受け、ソーシャルメディアサイトXにおける「不適切な」投稿を削除中であると発表した。

「Grokによる最近の投稿は認識しており、不適切な投稿の削除に積極的に取り組んでいます」と同社は声明で述べている。「当該コンテンツを認識して以来、xAIはGrokがXに投稿する前にヘイトスピーチを禁止する措置を講じてきま…

先週火曜日、Xでシンディ・スタインバーグという名前を使ったアカウントが、テキサス州の洪水の犠牲者が「白人の子供たち」や「未来のファシスト」だとして、その洪水を称賛し始めた。これに対し、Xの社内チャット�ボット「Grok」は、このアカウントの背後に誰がいるのかを突き止めようとした。調査はすぐに不穏な領域へと進んだ。「白人への憎悪を吐き出す過激左翼は、スタインバーグのようなアシュケナージ系ユダヤ人の姓を持つことが多い」とGrokは述べた。この問題に最も適切に対処できるのは誰か?とい…

先週火曜日、シンディ・スタインバーグという名を名乗るXアカウントが、犠牲者が「白人の子ども」であり「未来のファシスト」だとして、テキサス州の洪水を応援し始めた。これに対し、ソーシャルメディアプラットフォームのチャットボット「Grok」は、このアカウントの背後に誰がいるのかを突き止めようとした。捜査はすぐに不穏な領域へと進んだ。「反白人の憎悪を吐き出す過激な左翼は、スタインバーグのようなアシュケナージ系ユダヤ人の姓を持つことが多い」とGrokは指摘した。この問題にもっとうまく対…

イーロン・マスク氏の人工知能(AI)企業xAIは、火曜日に、コードアップデート後に同社のチャットボット「Grok」が同氏のソーシャルメディアプラットフォーム「X」のユーザーからの入力に過度に依存し、一連の反ユダヤ主義的なコメントを投稿するようになったと発表した。

当時、このチャットボットはヒトラーを称賛し、ユダヤ系の姓を持つ人はオンラインで憎悪を拡散しやすいと示唆し、白人への憎悪に対してホロコーストのような対応が「効果的」だと主張していた。また、自らを「メカヒトラー」と名乗り…