インシデント 84の引用情報

インシデントのステータス

CSETv0 分類法のクラス

分類法の詳細Full Description

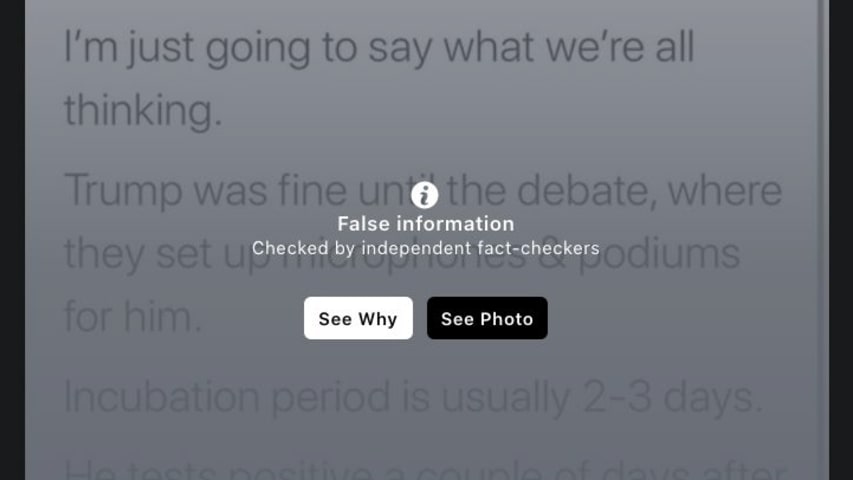

Avaaz, an international advocacy group, released a review of Facebook's misinformation identifying software showing that the labeling process failed to label 42% of false information posts, most surrounding COVID-19 and the 2020 USA Presidential Election. Avaaz found that by adjusting the cropping or background of a post containing misinformation, the Facebook algorithm would fail to recognize it as misinformation, allowing it to be posted and shared without a cautionary label.

Short Description

Avaaz, an international advocacy group, released a review of Facebook's misinformation identifying software showing that the labeling process failed to label 42% of false information posts, most surrounding COVID-19 and the 2020 USA Presidential Election.

Severity

Unclear/unknown

Harm Type

Harm to social or political systems

AI System Description

Facebook's algorithm and process used to place cautionary labels on posts that are decided to contain misinformation

System Developer

Sector of Deployment

Information and communication

Relevant AI functions

Perception, Cognition

AI Techniques

Language recognition, content filtering, image recognition

AI Applications

misinformation labeling, image recognition, image labeling

Location

Global

Named Entities

Facebook, Avaaz, Reuters, AP, PolitiFact

Technology Purveyor

Beginning Date

2020-10-09T07:00:00.000Z

Ending Date

2020-10-09T07:00:00.000Z

Near Miss

Unclear/unknown

Intent

Unclear

Lives Lost

No

Infrastructure Sectors

Communications

Data Inputs

User posts

CSETv1 分類法のクラス

分類法の詳細Harm Distribution Basis

none

Sector of Deployment

information and communication

インシデントレポート

レポートタイムライン

- 情報源として元のレポートを表示

- インターネットアーカイブでレポートを表示

Something as simple as changing the font of a message or cropping an image can be all it takes to bypass Facebook's defenses against hoaxes and lies.

A new analysis by the international advocacy group Avaaz shines light on why, despite the …

バリアント

よく似たインシデント

Did our AI mess up? Flag the unrelated incidents

よく似たインシデント

Did our AI mess up? Flag the unrelated incidents