Incident 824: Une vidéo truquée (deepfake) attribue à tort des allégations à un ancien étudiant dans une attaque contre le candidat à la vice-présidence Tim Walz.

Outils

Entités

Voir toutes les entitésStatistiques d'incidents

Risk Subdomain

4.1. Disinformation, surveillance, and influence at scale

Risk Domain

- Malicious Actors & Misuse

Entity

AI

Timing

Post-deployment

Intent

Intentional

Rapports d'incidents

Chronologie du rapport

Matthew Metro n'a pas reconnu le visage qui est apparu sur l'écran de son téléphone portable lorsqu'il a cliqué sur un lien qu'un ami lui a envoyé par SMS la semaine dernière. Mais après avoir lancé la lecture de la vidéo en ligne, il a été…

Un réseau de propagande pro-russe, connu pour créer des vidéos de dénonciation truquées, semble être à l'origine d'un effort coordonné visant à promouvoir des allégations folles et sans fondement selon lesquelles le gouverneur du Minnesota …

Plus tôt ce mois-ci, le candidat démocrate à la vice-présidence Tim Walz a été en proie à des rumeurs de MAGA selon lesquelles il aurait entretenu des relations sexuelles avec une de ses anciennes élèves. Il semble désormais que ces rumeurs…

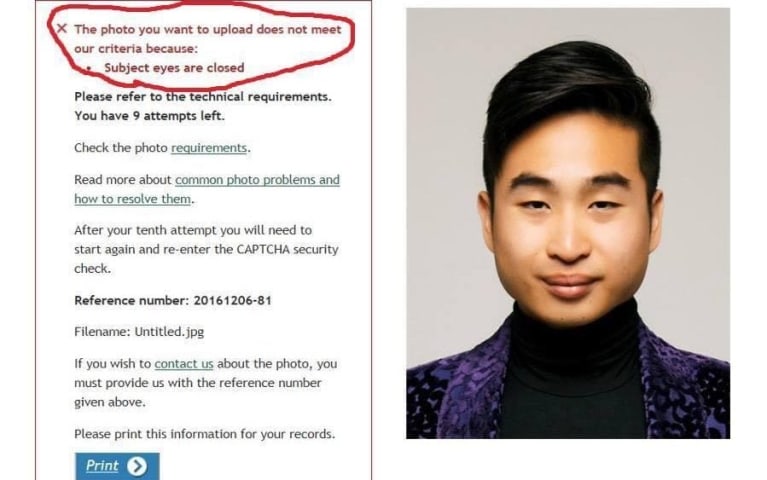

Une vidéo largement partagée sur X prétend montrer un ancien élève du lycée Mankato West accusant Tim Walz de l'avoir agressé sexuellement lorsque le candidat démocrate à la vice-présidence était professeur dans l'école du Minnesota il y a …

Des responsables du renseignement américain ont déclaré mardi que des Russes cherchant à perturber les élections américaines avaient créé une fausse vidéo et d'autres documents diffamant le candidat démocrate à la vice-présidence Tim Walz a…

Des groupes de désinformation russes ont probablement orchestré des allégations sans fondement visant le gouverneur du Minnesota Tim Walz, accusant à tort le candidat à la vice-présidence d'avoir agressé sexuellement ses élèves alors qu'il …

Selon des responsables du renseignement américain, la Russie est à l'origine de fausses allégations salaces contre le candidat démocrate à la vice-présidence Tim Walz, qui ont largement circulé sur les réseaux sociaux la semaine dernière.

«…

La Russie est à l'origine de récentes publications sur les réseaux sociaux contenant des allégations sans fondement et salaces à l'encontre du gouverneur du Minnesota, Tim Walz, ont déclaré mardi des responsables du renseignement américain.…

Le gouvernement russe a créé une fausse vidéo virale d'un ancien étudiant du candidat démocrate à la vice-présidence Tim Walz l'accusant d'abus sexuels, ont déclaré les services de renseignement américains.

"La [communauté du renseignement]…

Si vous êtes un tant soit peu familier avec la politique, vous connaissez probablement déjà la fameuse surprise d'octobre, un événement médiatique qui éclate le mois précédant l'élection et qui a le potentiel d'influencer la course à la pré…

Un utilisateur bien ancré dans la sphère du complot QAnon a publié un message viral sur X affirmant qu'un ancien élève du gouverneur du Minnesota Tim Walz, Matthew Metro, aurait agressé sexuellement Walz en 1997 alors qu'il était encore étu…

Des pirates informatiques iraniens se préparent à une opération d'influence potentiellement majeure avant les élections américaines, parallèlement aux efforts accrus d'ingérence électorale de la Chine et de la Russie, a déclaré Microsoft da…

Des espions russes ont engagé un ancien marine américain pour diffuser de fausses nouvelles visant à salir la campagne de Harris, qui incluaient probablement des rumeurs selon lesquelles Tim Walz aurait agressé sexuellement ses anciennes él…

Nous lançons The Dougan Russian Disinformation Depository pour donner un nom, un visage, une personnalité, un modus operandi personnel et une histoire personnelle au phénomène parfois nébuleux de la désinformation russe.

John Mark Dougan,…

Les trolls russes, iraniens et chinois intensifient leurs efforts de désinformation sur les élections américaines à l'approche du 5 novembre, mais – outre le fait de saper la foi dans le processus démocratique et la confiance dans le résult…

Dans les derniers jours précédant le vote de mardi, la Russie a abandonné toute prétention à ne pas tenter d'interférer dans l'élection présidentielle américaine.

Les guerriers de l'information du Kremlin ont non seulement produit une derni…