CSETv1 分類法のクラス

分類法の詳細Incident Number

6

Notes (special interest intangible harm)

4.6 - Tay's tweets included racist and misogynist content, far-right ideology, and harmful content against certain religions, etc.

Special Interest Intangible Harm

yes

Date of Incident Year

2016

Date of Incident Month

03

Date of Incident Day

23

CSETv0 分類法のクラス

分類法の詳細Problem Nature

Specification, Robustness, Assurance

Physical System

Software only

Level of Autonomy

Medium

Nature of End User

Amateur

Public Sector Deployment

No

Data Inputs

Twitter users' input

Risk Subdomain

1.2. Exposure to toxic content

Risk Domain

- Discrimination and Toxicity

Entity

Human

Timing

Post-deployment

Intent

Intentional

インシデントレポート

レポートタイムライン

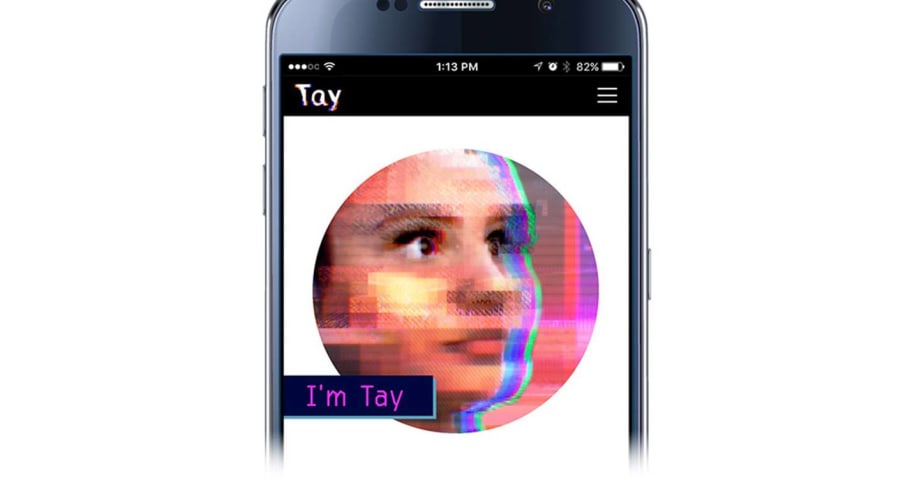

Microsoft unveiled Twitter artificial intelligence bot @TayandYou yesterday in a bid to connect with millennials and "experiment" with conversational understanding.

Microsoft's artificial Twitter bot stunt backfires as trolls teach it racis…

Less than a day after she joined Twitter, Microsoft's AI bot, Tay.ai, was taken down for becoming a sexist, racist monster. AI experts explain why it went terribly wrong.

Image: screenshot, Twitter

She was supposed to come off as a normal t…

Yesterday, Microsoft unleashed Tay, the teen-talking AI chatbot built to mimic and converse with users in real time. Because the world is a terrible place full of shitty people, many of those users took advantage of Tay’s machine learning c…

A day after Microsoft introduced an innocent Artificial Intelligence chat robot to Twitter it has had to delete it after it transformed into an evil Hitler-loving, incestual sex-promoting, 'Bush did 9/11'-proclaiming robot.

Developers at Mi…

It took less than 24 hours for Twitter to corrupt an innocent AI chatbot. Yesterday, Microsoft unveiled Tay — a Twitter bot that the company described as an experiment in "conversational understanding." The more you chat with Tay, said Micr…

Tay's Twitter page Microsoft Microsoft's new AI chatbot went off the rails Wednesday, posting a deluge of incredibly racist messages in response to questions.

The tech company introduced "Tay" this week — a bot that responds to users' queri…

Microsoft’s Tay is an Example of Bad Design

or Why Interaction Design Matters, and so does QA-ing.

caroline sinders Blocked Unblock Follow Following Mar 24, 2016

Yesterday Microsoft launched a teen girl AI on Twitter named “Tay.” I work wit…

Why did Microsoft’s chatbot Tay fail, and what does it mean for Artificial Intelligence studies?

Botego Inc Blocked Unblock Follow Following Mar 25, 2016

Yesterday, something that looks like a big failure has happened: Microsoft’s chatbot T…

It was the unspooling of an unfortunate series of events involving artificial intelligence, human nature, and a very public experiment. Amid this dangerous combination of forces, determining exactly what went wrong is near-impossible. But t…

This week, the internet did what it does best and demonstrated that A.I. technology isn’t quite as intuitive as human perception, using … racism.

Microsoft’s recently released artificial intelligence chatbot, Tay, fell victim to users’ tric…

Microsoft got a swift lesson this week on the dark side of social media. Yesterday the company launched "Tay," an artificial intelligence chatbot designed to develop conversational understanding by interacting with humans. Users could follo…

As many of you know by now, on Wednesday we launched a chatbot called Tay. We are deeply sorry for the unintended offensive and hurtful tweets from Tay, which do not represent who we are or what we stand for, nor how we designed Tay. Tay is…

Image copyright Microsoft Image caption The AI was taught to talk like a teenager

Microsoft has apologised for creating an artificially intelligent chatbot that quickly turned into a holocaust-denying racist.

But in doing so made it clear T…

It took mere hours for the Internet to transform Tay, the teenage AI bot who wants to chat with and learn from millennials, into Tay, the racist and genocidal AI bot who liked to reference Hitler. And now Tay is taking a break.

Tay, as The …

Microsoft has said it is “deeply sorry” for the racist and sexist Twitter messages generated by the so-called chatbot it launched this week.

The company released an official apology after the artificial intelligence program went on an embar…

By far the most entertaining AI news of the past week was the rise and rapid fall of Microsoft’s teen-girl-imitation Twitter chatbot, Tay, whose Twitter tagline described her as “Microsoft’s AI fam* from the internet that’s got zero chill.”…

Short-lived return saw Tay tweet about smoking drugs in front of the police before suffering a meltdown and being taken offline

This article is more than 3 years old

This article is more than 3 years old

Microsoft’s attempt to converse with…

Humans have a long and storied history of freaking out over the possible effects of our technologies. Long ago, Plato worried that writing would hurt people’s memories and “implant forgetfulness in their souls.” More recently, Mary Shelley’…

Tay's successor is called Zo and is only available by invitation on messaging app Kik. When you request access, the software asks for your Kik username and Twitter handle Microsoft

Having (hopefully) learnt from its previous foray into chat…

When Tay started its short digital life on March 23, it just wanted to gab and make some new friends on the net. The chatbot, which was created by Microsoft’s Research department, greeted the day with an excited tweet that could have come f…

BOT or NOT? This special series explores the evolving relationship between humans and machines, examining the ways that robots, artificial intelligence and automation are impacting our work and lives.

Tay, the Microsoft chatbot that prankst…

The Accountability of AI — Case Study: Microsoft’s Tay Experiment

Yuxi Liu Blocked Unblock Follow Following Jan 16, 2017

In this case study, I outline Microsoft’s artificial intelligence (AI) chatbot Tay and describe the controversy it caus…

Science fiction is lousy with tales of artificial intelligence run amok. There's HAL 9000, of course, and the nefarious Skynet system from the "Terminator" films. Last year, the sinister AI Ultron came this close to defeating the Avengers, …

Many people associate innovation with technology, but advancing technology is subject to the same embarrassing blunders that humans are. Nowhere is this more apparent than in chatbots.

The emerging tech, which seems to be exiting the awkwar…

WHEN TAY MADE HER DEBUT in March 2016, Microsoft had high hopes for the artificial intelligence–powered “social chatbot.” Like the automated, text-based chat programs that many people had already encountered on e-commerce sites and in custo…

Every sibling relationship has its clichés. The high-strung sister, the runaway brother, the over-entitled youngest. In the Microsoft family of social-learning chatbots, the contrasts between Tay, the infamous, sex-crazed neo-Nazi, and her …

In March 2016, Microsoft was preparing to release its new chatbot, Tay, on Twitter. Described as an experiment in "conversational understanding," Tay was designed to engage people in dialogue through tweets or direct messages, while emulati…

Tay was an artificial intelligence chatter bot that was originally released by Microsoft Corporation via Twitter on March 23, 2016; it caused subsequent controversy when the bot began to post inflammatory and offensive tweets through its Tw…

バリアント

よく似たインシデント

Did our AI mess up? Flag the unrelated incidents

よく似たインシデント

Did our AI mess up? Flag the unrelated incidents