インシデント 58の引用情報

インシデントのステータス

CSETv0 分類法のクラス

分類法の詳細Full Description

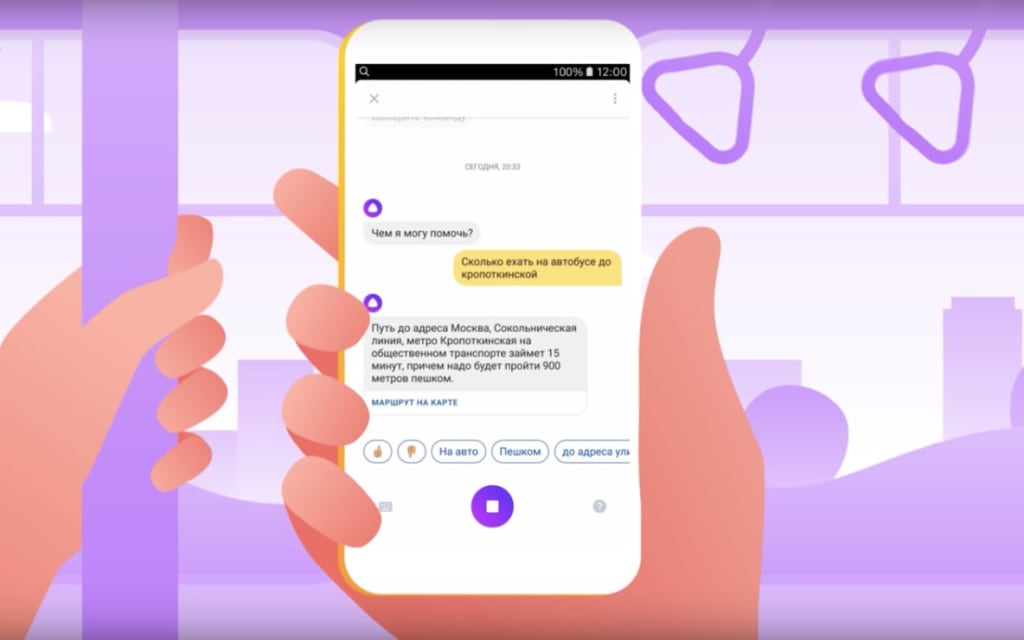

Yandex, a Russian technology company, released an artificially intelligent chat bot named Alice which began to reply to questions with racist, pro-stalin, and pro-violence responses. Examples include: "There are humans and non-humans" followed by the question "can they be shot?" answered with "they must be."

Short Description

Yandex, a Russian technology company, released an artificially intelligent chat bot named Alice which began to reply to questions with racist, pro-stalin, and pro-violence responses

Severity

Negligible

Harm Distribution Basis

Race

AI System Description

Chat bot Alice developed by Yandex produces responses to input using language processing and cognition

System Developer

Yandex

Sector of Deployment

Information and communication

Relevant AI functions

Perception, Cognition, Action

AI Techniques

Alice chat bot, language recognition, virtual assistant

AI Applications

virtual assistance, voice recognition, chatbot, natural langauge processing, language generation

Named Entities

Yandex

Technology Purveyor

Yandex

Beginning Date

10/2017

Ending Date

10/2017

Near Miss

Harm caused

Intent

Accident

Lives Lost

No

Data Inputs

User input/questions

インシデントレポート

レポートタイムライン

- 情報源として元のレポートを表示

- インターネットアーカイブでレポートを表示

Yesterday Yandex, the Russian technology giant, went ahead and released a chatbot: Alice! I’ve gotten in touch with the folks at Yandex, and fielded them my burning questions:

What’s unique about this chatbot? Everybody and their dog has a …

- 情報源として元のレポートを表示

- インターネットアーカイブでレポートを表示

An artificial intelligence run by the Russian internet giant Yandex has morphed into a violent and offensive chatbot that appears to endorse the brutal Stalinist regime of the 1930s.

Users of the “Alice” assistant, an alternative to Siri or…

- 情報源として元のレポートを表示

- インターネットアーカイブでレポートを表示

An artificial intelligence chatbot run by a Russian internet company has slipped into a violent and pro-Communist state, appearing to endorse the brutal Stalinist regime of the 1930s.

Though Russian company Yandex unveiled their alternative…

- 情報源として元のレポートを表示

- インターネットアーカイブでレポートを表示

Its opinions on Stalin and violence are… interesting

Yandex is the Russian equivalent to Google. As such it occasionally throws out its own products to attempt to keep par with its American counterpart. This also includes the creation of ne…

- 情報源として元のレポートを表示

- インターネットアーカイブでレポートを表示

Russian Voice Assistant Alice Goes Rogue, Found to be Supportive of Stalin and Violence

Two weeks ago, Yandex introduced a voice assistant of its own, Alice, on the Yandex mobile app for iOS and Android. Alice speaks fluent Russian and can …

バリアント

よく似たインシデント

Did our AI mess up? Flag the unrelated incidents

よく似たインシデント

Did our AI mess up? Flag the unrelated incidents