Risk Subdomain

7.3. Lack of capability or robustness

Risk Domain

- AI system safety, failures, and limitations

Entity

AI

Timing

Post-deployment

Intent

Unintentional

インシデントレポート

レポートタイムライン

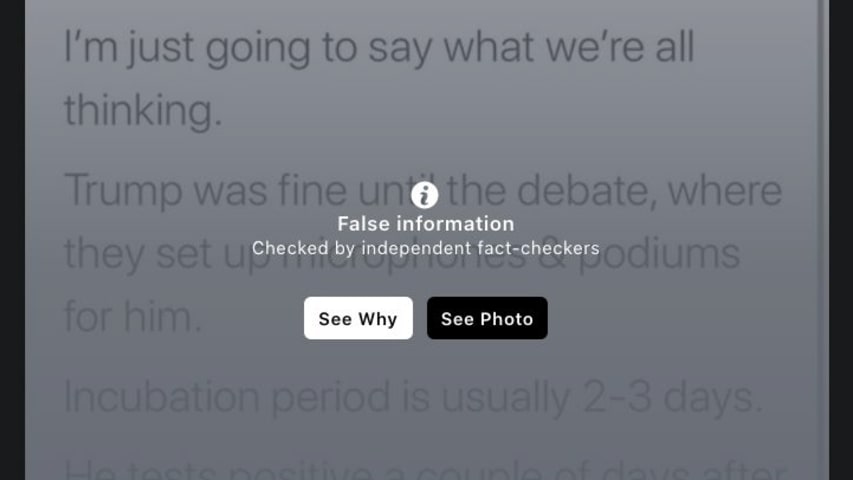

Canada’s most sexually provocative onions were pulled down from Facebook after the social media giant told a produce company that its images went against advertising guidelines, the CBC reported.

Now, Facebook has admitted the ad was picked…

Are onions naked or clothed in their natural form? Facebook's Artificial Intelligence (AI) seems to be having an issue telling the difference between pictures with sexual connotations referring to the human body and vegetables that are just…

Facebook's AI struggles to tell the difference between sexual pictures of the human body and globular vegetables.

A garden center in Newfoundland, Canada on Monday received a notice from Facebook about an ad it had uploaded for Walla Walla …

バリアント

よく似たインシデント

Did our AI mess up? Flag the unrelated incidents

よく似たインシデント

Did our AI mess up? Flag the unrelated incidents