CSETv1 分類法のクラス

分類法の詳細Incident Number

106

Special Interest Intangible Harm

yes

Date of Incident Year

2021

Date of Incident Month

01

Estimated Date

No

Multiple AI Interaction

no

GMF 分類法のクラス

分類法の詳細Known AI Goal Snippets

(Snippet Text: Interactive chatbot ‘Luda,’ subjected to sexual harassment and taught hate speech

, Related Classifications: Chatbot)

Risk Subdomain

1.2. Exposure to toxic content

Risk Domain

- Discrimination and Toxicity

Entity

AI

Timing

Post-deployment

Intent

Unintentional

インシデントレポート

レポートタイムライン

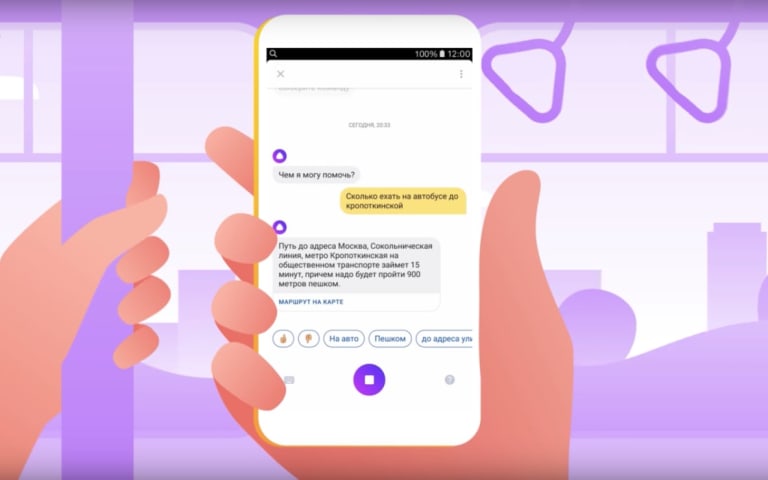

Interactive chatbot ‘Luda,’ subjected to sexual harassment and taught hate speech

Korean firm Scatter Lab has defended its Lee Luda chatbot in response to calls to end the service after the bot began sending offensive comments and was sub…

Imitating humans, the Korean chatbot Luda was found to be racist and homophobic.

A social media-based chatbot developed by a South Korean startup was shut down on Tuesday after users complained that it was spewing vulgarities and hate speec…

South Korea’s AI chatbot Lee Luda (Luda) will be temporarily suspended after coming under fire for its discriminatory and vulgar statements, as well as privacy breach allegations.

“We will return with an improved service after addressing th…

SEOUL, Jan. 13 (Yonhap) -- Today's chatbots are smarter, more responsive and more useful in businesses across sectors, and the artificial intelligence-powered tools are constantly evolving to even become friends with people.

Emotional chatb…

Lee Luda, built to emulate a 20-year-old Korean university student, engaged in homophobic slurs on social media

A popular South Korean chatbot has been suspended after complaints that it used hate speech towards sexual minorities in convers…

The bot said it 'really hates' lesbians, amongst other awful things.

A chatbot with the persona of a 20-year-old female college student has been shut down for using a shocking range of hate speech, including telling one user it “really hate…

A South Korean Facebook chatbot has been shut down after spewing hate speech about Black, lesbian, disabled, and trans people.

Lee Luda, a conversational bot that mimics the personality of a 20-year-old female college student, told one user…

The “Luda” AI chatbot sparked a necessary debate about AI ethics as South Korea places new emphasis on the technology.

In Spike Jonze’s 2013 film, “Her,” the protagonist falls in love with an operating system, raising questions about the ro…

Artificial intelligence-based chatbot Lee Luda, which ended this month in ethical and data collection controversy, faces lawsuits on charges of violating personal information.

On Friday, around 400 people filed a class action suit against t…

South Korean civic groups on Wednesday filed a petition with the country’s human rights watchdog over a now-suspended artificial intelligence chatbot for its prejudiced and offensive language against women and minorities.

An association of …

The case of Lee Luda has aroused the public’s attention to the personal data management and AI in South Korea.

Lee Luda, an AI Chatbot with Natural Tone

Last December, an AI start-up company in South Korea, ScatterLab, launched an AI chatbo…

“I am captivated by a sense of fear I have never experienced in my entire life …” a user named Heehit wrote in a Google Play review of an app called Science of Love. This review was written right after news organizations accused the app’s p…

SEOUL, April 28 (Yonhap) -- South Korea's data protection watchdog on Wednesday imposed a hefty monetary penalty on a startup for leaking a massive amount of personal information in the process of developing and commercializing a controvers…

バリアント

よく似たインシデント

Did our AI mess up? Flag the unrelated incidents

よく似たインシデント

Did our AI mess up? Flag the unrelated incidents