Incident 74: La police de Détroit a arrêté à tort un homme noir en raison d'un défaut de FRT

Entités

Voir toutes les entitésClassifications de taxonomie CSETv0

Détails de la taxonomieProblem Nature

Specification, Assurance

Physical System

Software only

Level of Autonomy

High

Nature of End User

Amateur

Public Sector Deployment

Yes

Data Inputs

biometrics, images, camera footage

Classifications de taxonomie GMF

Détails de la taxonomieKnown AI Goal Snippets

(Snippet Text: On a Thursday afternoon in January, Robert Julian-Borchak Williams was in his office at an automotive supply company when he got a call from the Detroit Police Department telling him to come to the station to be arrested., Related Classifications: Face Recognition)

Classifications de taxonomie CSETv1

Détails de la taxonomieIncident Number

74

Risk Subdomain

1.3. Unequal performance across groups

Risk Domain

- Discrimination and Toxicity

Entity

AI

Timing

Post-deployment

Intent

Unintentional

Rapports d'incidents

Chronologie du rapport

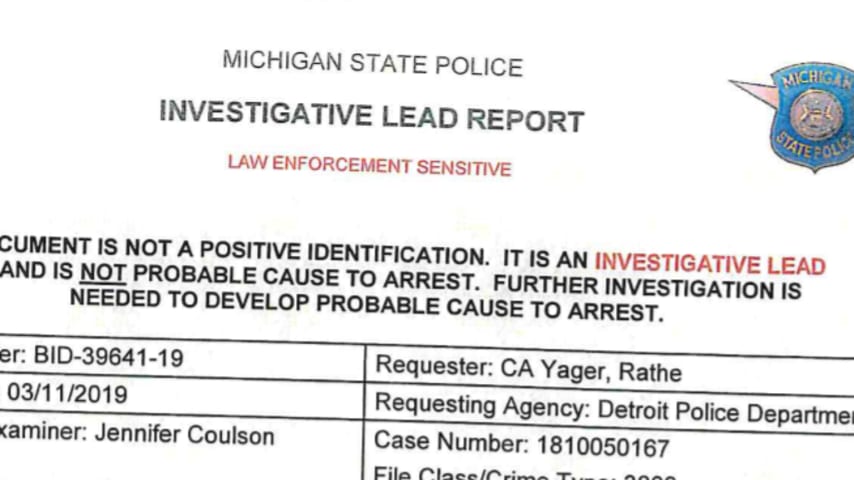

La police de Detroit a arrêté à tort Robert Julian-Borchak Williams en janvier 2020 [pour un vol à l'étalage qui avait eu lieu deux ans plus tôt](https://www.nytimes.com/2020/06/24/technology/facial-recognition-arrest. html). Même si Willia…

"Remarque : en réponse à cet article, le bureau du procureur du comté de Wayne a déclaré que Robert Julian-Borchak Williams pourrait faire effacer l'affaire et ses données d'empreintes digitales. "Nous nous excusons", a déclaré le procureur…

** Mis à jour à 21h05. HE mercredi**

La police de Detroit tentait de déterminer qui avait volé cinq montres dans un magasin de détail Shinola. Les autorités disent que le voleur a décollé avec une valeur estimée à 3 800 $ de marchandises.

L…

Mercredi matin, l'ACLU a annoncé qu'elle déposait une plainte contre le département de police de Detroit au nom de Robert Williams, un résident noir du Michigan qui le groupe a déclaré être l'une des premières personnes faussement arrêtées …

La police de Detroit a utilisé une technologie de reconnaissance faciale très peu fiable presque exclusivement contre les Noirs jusqu'à présent en 2020, selon les propres statistiques du département de police de Detroit. L'utilisation de la…

Préjugés raciaux et reconnaissance faciale. Un homme noir du New Jersey arrêté par la police et passe dix jours en prison après un faux match de reconnaissance faciale

Les préoccupations concernant l'exactitude et les préjugés raciaux conce…

Teaneck vient d'interdire la technologie de reconnaissance faciale pour la police. Voici pourquoi

Show Caption Hide Caption Programme de reconnaissance faciale qui fonctionne même si vous portez un masque Une entreprise japonaise affirme av…

Un homme du Michigan a poursuivi la police de Detroit après avoir été arrêté à tort et identifié à tort comme un suspect de vol à l'étalage par le logiciel de reconnaissance faciale du département dans l'un des premiers procès du genre à re…

Depuis qu'un officier de police de Minneapolis a tué George Floyd en mars 2020 et a relancé des manifestations massives de Black Lives Matter, les communautés à travers le pays ont repensé l'application de la loi, de l'examen granulaire des…

ROBERT WILLIAMS travaillait dans le jardin avec sa famille un après-midi d'août dernier lorsque sa fille Julia a dit qu'ils avaient besoin d'une réunion de famille immédiatement. Une fois que tout le monde fut à l'intérieur de la maison, la…

In January 2020, Robert Williams spent 30 hours in a Detroit jail because facial recognition technology suggested he was a criminal. The match was wrong, and Mr. Williams sued.

On Friday, as part of a legal settlement over his wrongful arre…

Variantes

Incidents similaires

Did our AI mess up? Flag the unrelated incidents

Incidents similaires

Did our AI mess up? Flag the unrelated incidents