インシデントのステータス

CSETv0 分類法のクラス

分類法の詳細Full Description

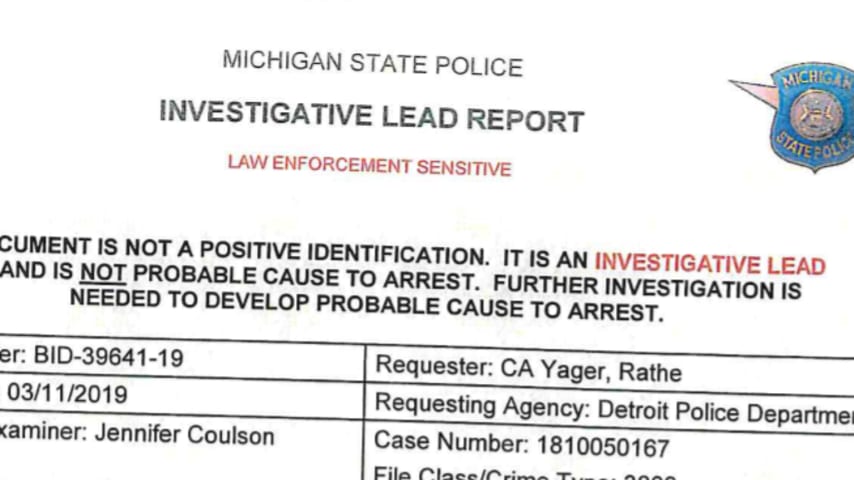

In June 2020, the Detroit Police Department wrongfully arrested Robert Julian-Borchak Williams after facial recognition techonology provided by DataWorks Plus had mistaken Williams for a black man who was recorded on a CCTV camera stealing. This incident is cited as an instance where facial recognition continues to possess racial bias, especially towards the Black and Asian population.

Short Description

The Detroit Police Department wrongfully arrest a black man due to its faulty facial recognition program provided by Dataworks Plus.

Severity

Moderate

Harm Distribution Basis

Race

Harm Type

Harm to civil liberties

AI System Description

DataWorks Plus facial recognition software was provided to the Detroit Police Department and focuses on biometrics storage and matching, including fingerprints, palm prints, irises, tattoos, and mugshots.

System Developer

DataWorks Plus

Sector of Deployment

Public administration and defence

Relevant AI functions

Perception, Cognition, Action

AI Techniques

facial recognition, machine learning, environmental sensing

AI Applications

Facial recognition, environmental sensing, biometrics, image recognition, speech recognition

Location

United States (Detroit, Michigan)

Named Entities

Detroit Police Department, DataWorks Plus

Technology Purveyor

DataWorks Plus

Beginning Date

06/2020

Ending Date

06/2020

Near Miss

Harm caused

Intent

Accident

Lives Lost

No

Data Inputs

biometrics, images, camera footage

GMF 分類法のクラス

分類法の詳細Known AI Goal

Face Recognition

Known AI Technology

Face Detection

Potential AI Technology

Convolutional Neural Network, Distributional Learning

Potential AI Technical Failure

Dataset Imbalance, Generalization Failure, Underfitting, Covariate Shift

インシデントレポート

レポートタイムライン

- 情報源として元のレポートを表示

- インターネットアーカイブでレポートを表示

Detroit police wrongfully arrested Robert Julian-Borchak Williams in January 2020 for a shoplifting incident that had taken place two years earlier. Even though Williams had nothing to do with the incident, facial recognition technology use…

- 情報源として元のレポートを表示

- インターネットアーカイブでレポートを表示

"Note: In response to this article, the Wayne County prosecutor’s office said that Robert Julian-Borchak Williams could have the case and his fingerprint data expunged. “We apologize,” the prosecutor, Kym L. Worthy, said in a statement, add…

- 情報源として元のレポートを表示

- インターネットアーカイブでレポートを表示

Updated 9:05 p.m. ET Wednesday

Police in Detroit were trying to figure out who stole five watches from a Shinola retail store. Authorities say the thief took off with an estimated $3,800 worth of merchandise.

Investigators pulled a security…

- 情報源として元のレポートを表示

- インターネットアーカイブでレポートを表示

On Wednesday morning, the ACLU announced that it was filing a complaint against the Detroit Police Department on behalf of Robert Williams, a Black Michigan resident whom the group said is one of the first people falsely arrested due to fac…

- 情報源として元の��レポートを表示

- インターネットアーカイブでレポートを表示

Detroit police have used highly unreliable facial recognition technology almost exclusively against Black people so far in 2020, according to the Detroit Police Department’s own statistics. The department’s use of the technology gained nati…

- 情報源として元のレポートを表示

- インターネットアーカイブでレポートを表示

Racial bias and facial recognition. Black man in New Jersey arrested by police and spends ten days in jail after false face recognition match

Accuracy and racial bias concerns about facial recognition technology continue with the news of a …

- 情報源として元のレポートを表示

- インターネットアーカイブでレポートを表示

Teaneck just banned facial recognition technology for police. Here's why

Show Caption Hide Caption Facial recognition program that works even if you’re wearing a mask A Japanese company says they’ve developed a system that can bypass face c…

- 情報源として元のレポートを表示

- インターネットアーカイブでレポートを表示

A Michigan man has sued Detroit police after he was wrongfully arrested and falsely identified as a shoplifting suspect by the department’s facial recognition software in one of the first lawsuits of its kind to call into question the contr…

- 情報源として元のレポートを表示

- インターネットアーカイブでレポートを表示

Since a Minneapolis police officer killed George Floyd in March 2020 and re-ignited massive Black Lives Matter protests, communities across the country have been re-thinking law enforcement, from granular scrutiny of the ways that police us…

- 情報源として元のレポートを表示

- インターネットアーカイブでレポートを表示

ROBERT WILLIAMS WAS doing yard work with his family one afternoon last August when his daughter Julia said they needed a family meeting immediately. Once everyone was inside the house, the 7-year-old girl closed all the blinds and curtains …

バリアント

よく似たインシデント

Did our AI mess up? Flag the unrelated incidents

よく似たインシデント

Did our AI mess up? Flag the unrelated incidents