CSETv1 分類法のクラス

分類法の詳細Incident Number

49

Notes (special interest intangible harm)

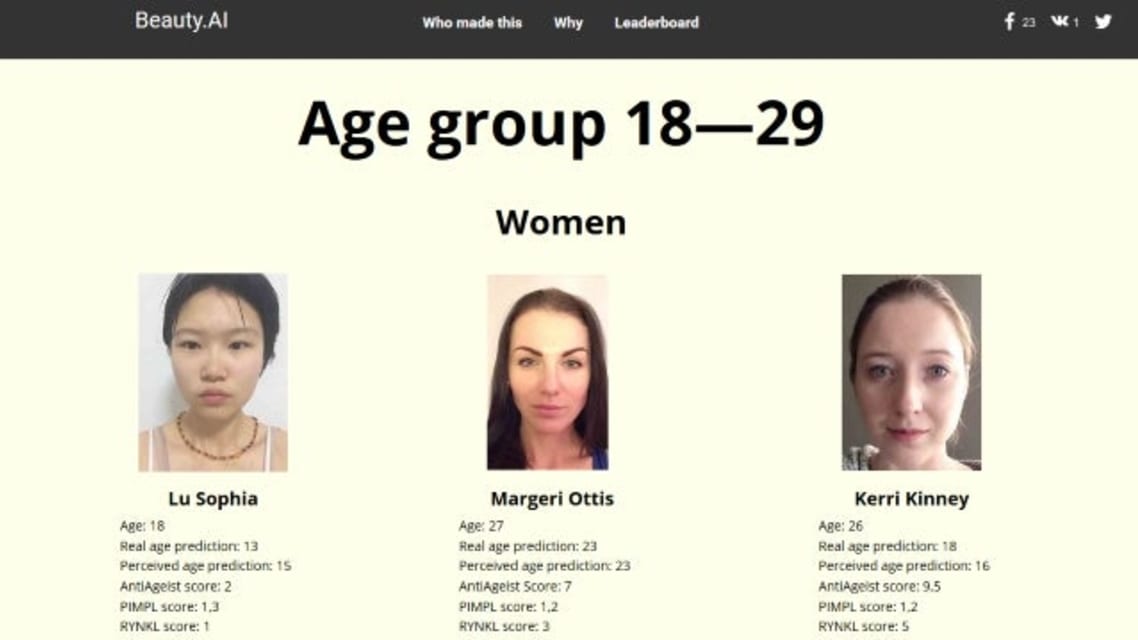

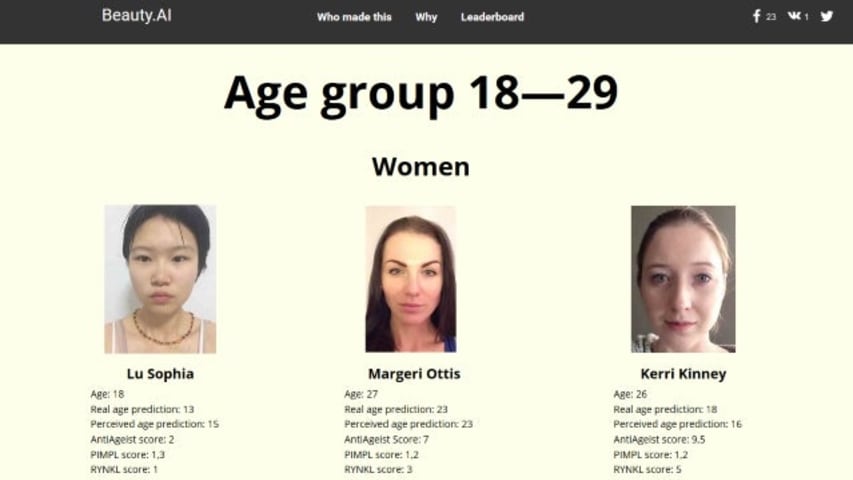

Beauty.ai determined mostly white applicants to be most attractive among all contestants.

Special Interest Intangible Harm

yes

Date of Incident Year

2016

Date of Incident Month

08

Estimated Date

No

CSETv0 分類法のクラス

分類法の詳細Problem Nature

Specification

Physical System

Software only

Level of Autonomy

High

Nature of End User

Amateur

Public Sector Deployment

No

Data Inputs

images of people's faces

Risk Subdomain

1.1. Unfair discrimination and misrepresentation

Risk Domain

- Discrimination and Toxicity

Entity

AI

Timing

Post-deployment

Intent

Unintentional

インシデントレポート

レポートタイムライン

Image: Flickr/Veronica Jauriqui

Beauty pageants have always been political. After all, what speaks more strongly to how we see each other than which physical traits we reward as beautiful, and which we code as ugly? It wasn't until 1983 tha…

The first international beauty contest decided by an algorithm has sparked controversy after the results revealed one glaring factor linking the winners

The first international beauty contest judged by “machines” was supposed to use objecti…

The first international beauty contest decided by an algorithm has sparked controversy after the results revealed one glaring factor linking the winners

The first international beauty contest judged by “machines” was supposed to use objecti…

Only a few winners were Asian and one had dark skin, most were white

Just months after Microsoft's Tay artificial intelligence sent racist messages on Twitter, another AI seems to have followed suit.

More than 6,000 selfies of individuals w…

With more than 6,000 applicants from over 100 countries competing, the first international beauty contest judged entirely by artificial intelligence just came to an end. The results are a bit disheartening.

The team of judges, a five robot …

It’s not the first time artificial intelligence has been in the spotlight for apparent racism, but Beauty.AI’s recent competition results have caused controversy by clearly favouring light skin.

The competition, which ran online and was ope…

If you’re one who joins beauty pageants or merely watches them, what would you feel about a computer algorithm judging a person’s facial attributes? Perhaps we should ask those who actually volunteered to be contestants in a beauty contest …

An AI designed to do X will eventually fail to do X. Spam filters block important emails, GPS provides faulty directions, machine translations corrupt the meaning of phrases, autocorrect replaces a desired word with a wrong one, biometric s…

It’s long been thought that robots equipped with artificial intelligence would be the cold, purely objective counterpart to humans’ emotional subjectivity. Unfortunately, it would seem that many of our imperfections have found their way int…

バリアント

よく似たインシデント

Did our AI mess up? Flag the unrelated incidents

よく似たインシデント

Did our AI mess up? Flag the unrelated incidents