Incidente 1: La aplicación YouTube Kids de Google presenta contenido inapropiado

Entidades

Ver todas las entidadesClasificaciones de la Taxonomía CSETv1

Detalles de la TaxonomíaIncident Number

1

Special Interest Intangible Harm

yes

Date of Incident Year

2016

Estimated Date

Yes

Multiple AI Interaction

no

Embedded

no

Clasificaciones de la Taxonomía CSETv0

Detalles de la TaxonomíaProblem Nature

Unknown/unclear

Physical System

Software only

Nature of End User

Amateur

Public Sector Deployment

No

Data Inputs

Videos

Lives Lost

No

Clasificaciones de la Taxonomía GMF

Detalles de la TaxonomíaKnown AI Goal Snippets

(Snippet Text: An off-brand Paw Patrol video called "Babies Pretend to Die Suicide" features several disturbing scenarios. The YouTube Kids app filters out most - but not all - of the disturbing videos.

Before any video appears in the YouTube Kids app, it's filtered by algorithms that are supposed to identify appropriate children's content YouTube also has a team of human moderators that review any videos flagged in the main YouTube app by volunteer Contributors (users who flag inappropriate content) or by systems that identify recognizable children's characters in the questionable video. Many of those views came from YouTube's "up next" and "recommended" video section that appears while watching any video. YouTube's algorithms attempt to find videos that you may want to watch based on the video you chose to watch first If you don't pick another video to watch after the current video ends, the "up next" video will automatically play. , Related Classifications: Content Recommendation, Content Search)

Risk Subdomain

1.2. Exposure to toxic content

Risk Domain

- Discrimination and Toxicity

Entity

AI

Timing

Post-deployment

Intent

Unintentional

Informes del Incidente

Cronología de Informes

Videos llenos de blasfemias, material sexualmente explícito, alcohol, tabaco y referencias a drogas: esto es lo que los padres encuentran en la aplicación YouTube Kids de Google. Así es, es una aplicación para niños. Ahora, los padres de to…

Actualización, 7 de noviembre de 2017: HOY, Parents vuelve a compartir esta historia de 2016 porque una nueva serie de videos inapropiados han surgido en YouTube Kids y están en los titulares. Si bien los nombres de los canales y los person…

La reproducción multimedia no es compatible con su dispositivo

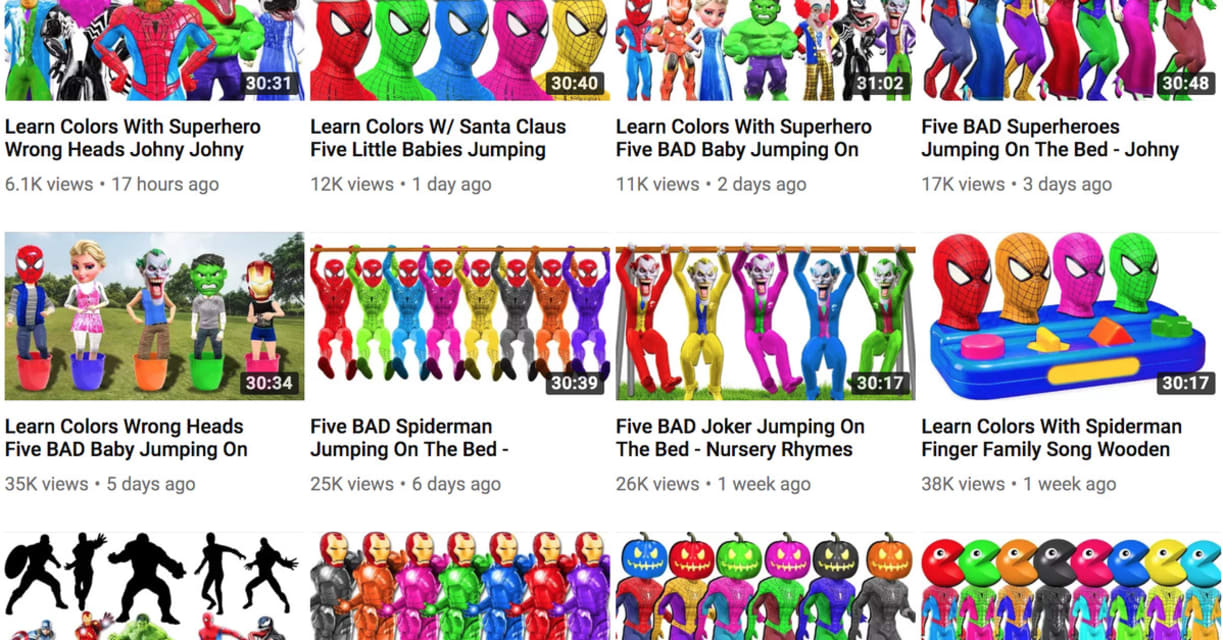

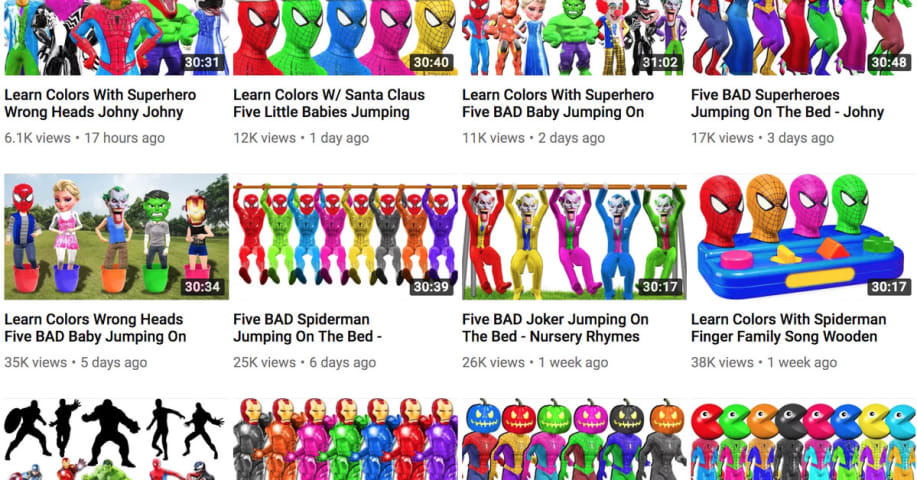

Miles de videos en YouTube parecen versiones de dibujos animados populares, pero contienen contenido perturbador e inapropiado que no es apto para niños.

Si no estás prestando m…

Un video de la Patrulla Canina fuera de marca llamado "Los bebés fingen morir suicidados" presenta varios escenarios inquietantes. Youtube

Los padres que dejan que sus hijos vean YouTube sin supervisión deberían prestar más atención a lo qu…

A principios de esta semana, un informe en The New York Times y una publicación de blog en Medium llamaron mucho la atención sobre un mundo de videos extraños y, a veces, perturbadores en YouTube dirigidos a niños pequeños. El género, del q…

Las noticias recientes y las publicaciones de blog destacaron la parte más vulnerable de YouTube Kids, la versión de Google para niños del amplio mundo de YouTube. Si bien todo el contenido de YouTube Kids está destinado a ser adecuado para…

En las últimas semanas, el mundo se enteró a través de una serie de informes que YouTube está plagado de problemas con el contenido infantil. La compañía ha aumentado la moderación en las últimas semanas para combatir la ola de contenido in…

YouTube, propiedad de Google, se disculpó nuevamente después de que aparecieran más videos inquietantes en su aplicación YouTube Kids.

Los investigadores encontraron varios videos inadecuados, incluido uno de un avión en llamas de la carica…

Los niños pudieron ver los videos de la conspiración de David Icke a través de YouTube Kids. Flickr/Tyler Merbler

La aplicación de YouTube específicamente para niños está destinada a filtrar el contenido para adultos y brindar un "mundo de …

Fotograma de video de una versión reproducida de Minnie Mouse, que apareció en el canal Simple Fun ahora suspendido Simple Fun / WIRED

Los videos de YouTube que utilizan términos de búsqueda orientados a los niños están evadiendo los intent…

YouTube Kids, que ha sido criticado por recomendar inadvertidamente videos perturbadores a los niños, dijo el miércoles que introduciría varias formas para que los padres limiten lo que se puede ver en la popular aplicación.

A partir de est…

Después de varios informes de contenido inapropiado en YouTube durante el año pasado, "Good Morning America" quiso echar un vistazo más de cerca al sitio. ¿Con qué frecuencia los niños terminan viendo contenido inapropiado en la plataforma …

YouTube es una industria masiva y un elemento básico de navegación que la gente usa para satisfacer sus necesidades educativas y de entretenimiento. Según Business Insider, el sitio web acumula alrededor de 1.800 millones de usuarios regist…

Variantes

Incidentes Similares

Did our AI mess up? Flag the unrelated incidents

Alexa Plays Pornography Instead of Kids Song

Amazon Censors Gay Books

Incidentes Similares

Did our AI mess up? Flag the unrelated incidents

Alexa Plays Pornography Instead of Kids Song