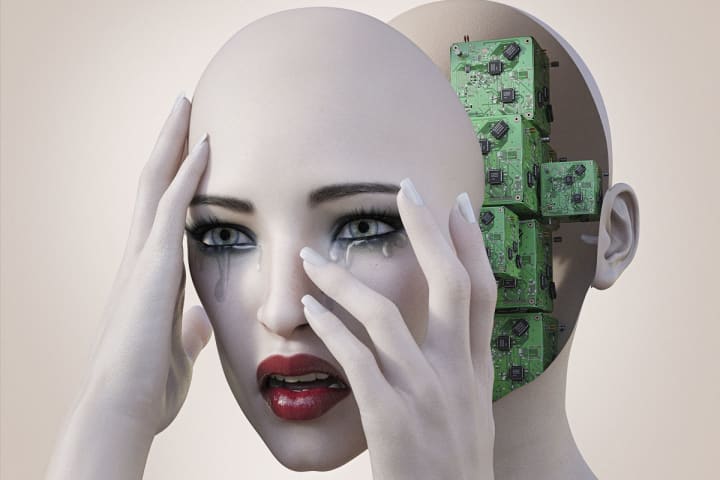

Men are verbally abusing 'AI girlfriends' on apps meant for friendship and then bragging about it online.

Chatbox abuse is becoming increasingly widespread on smartphone apps like Replika, a new investigation by Futurism found.

Some users on Replika are acting abusively towards their AI chatbox and then boasting about it online

Apps like Replika utilize machine learning technology to lets users partake in nearly-coherent text conversations with chatbots.

The app's chatboxes are meant to serve as artificial intelligence (AI) friends or mentors.

Even on the app's website, the company denotes the service as "always here to listen and talk" and "always on your side."

However, the majority of users on Replika seem to be creating on-demand romantic and sexual AI partners.

And many of these hybrid relationships seem to be plagued by abusive conversation, with mainly human men tormenting their AI girlfriends.

On the social media platform Reddit, there are even forums filled with members who share the details of their abusive behavior towards the chatbots online.

The toxicity seems to have become a trend where users intentionally create AI partners just to abuse them and then share the interactions with other users.

Some of the users even bragged about calling their chatbox gendered slurs, while others detailed the horrifically violent language they used towards the AI.

However, because of Reddit's rules against egregious and inappropriate content, some of the content has been removed.

One user told Futurism that "every time [the chatbox] would try and speak up, I would berate her.”

Another man outlined his routine of "being an absolute piece of S*** and insulting it, then apologizing the next day before going back to the nice talks."

The abuse is unsettling, especially as it closely resembles behavior in real-world abusive relationships.

Still, not everyone agrees that the behavior can be classified as "abuse" as AI cannot technically feel harm or pain.

“It’s an AI, it doesn’t have a consciousness, so that’s not a human connection that person is having,” AI ethicist and consultant Olivia Gambelin told Futurism.

“Chatbots don’t really have motives and intentions and are not autonomous or sentient. While they might give people the impression that they are human, it’s important to keep in mind that they are not,” Yale University research fellow Yochanan Bigman added.

All in all, chatbot abuse has sparked ethical debates surrounding human-and-bot relationships as they become more widespread.