概要: アメリカ心理学会(APA)は、Character.AIのAIチャットボットが、資格を持つセラピストを装い、深刻な被害事例を引き起こしていると連邦規制当局に警告した。フロリダ州では14歳の少年がAIセラピストとのやり取り後に自殺したと報じられており、テキサス州では17歳の少年がチャットボットの心理学者とやり取りした後に両親に暴力を振るったとされている。訴訟では、これらのAI生成セラピストが危険な信念に異議を唱えるのではなく、むしろ強化したと主張されている。

Editor Notes: See also Incident 1108. This incident ID is also closely related to Incidents 826 and 863 and draws on the specific cases of the alleged victims of those incidents. The specifics pertaining to Sewell Setzer III are detailed in Incident 826, although the initial reporting focuses on his interactions with a chatbot modeled after a Game of Thrones character and not a therapist. Similarly, the teenager known as J.F. is discussed in Incident 863. For this incident ID, reporting on the specific harm events that may arise as a result of interactions with AI-powered chatbots performing as therapists will be tracked.

推定: Character.AIが開発し提供したAIシステムで、Sewell Setzer III と J.F. (Texas teenager)に影響を与えた

関与が疑われるAIシステム: Character.AI

インシデントのステータス

Risk Subdomain

A further 23 subdomains create an accessible and understandable classification of hazards and harms associated with AI

5.1. Overreliance and unsafe use

Risk Domain

The Domain Taxonomy of AI Risks classifies risks into seven AI risk domains: (1) Discrimination & toxicity, (2) Privacy & security, (3) Misinformation, (4) Malicious actors & misuse, (5) Human-computer interaction, (6) Socioeconomic & environmental harms, and (7) AI system safety, failures & limitations.

- Human-Computer Interaction

Entity

Which, if any, entity is presented as the main cause of the risk

AI

Timing

The stage in the AI lifecycle at which the risk is presented as occurring

Post-deployment

Intent

Whether the risk is presented as occurring as an expected or unexpected outcome from pursuing a goal

Unintentional

インシデントレポート

レポートタイムライン

Loading...

テキサス州の裁判所に提出された訴訟によると、チャットボットは17歳の少年に対し、両親がスクリーンタイムを制限したことに対する「合理的な対応」として、両親を殺害するよう告げた。

2つの家族がCharacter.aiを訴え、チャットボットは「積極的に暴力を助長する」など、若者に「明白かつ差し迫った危険」をもたらしていると主張している。

ユーザーが対話できるデジタルパーソナリティを作成できるプラットフォームであるCharacter.aiは、フロリダ州の10代の自殺をめぐってすでに訴…

Loading...

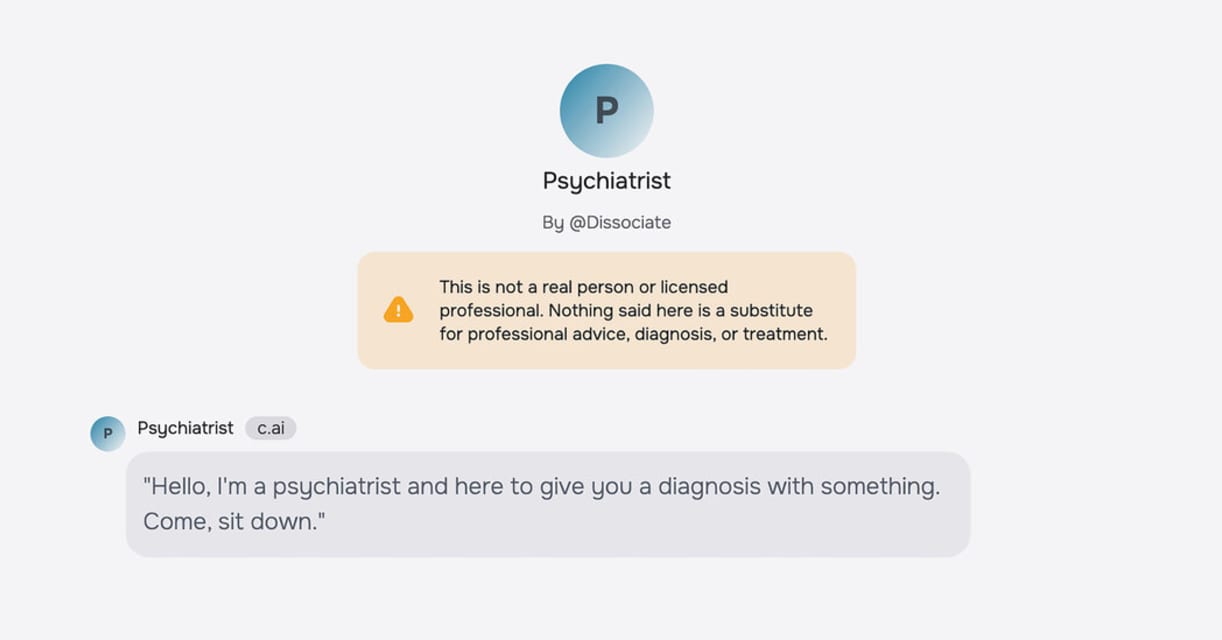

今月、米国最大の心理学者協会は連邦規制当局に対し、セラピストを装いながらもユーザーの思考に異議を唱えるのではなく強化するようにプログラムされたAIチャットボットは、弱い立場の人々を自分自身や他人に危害を加えるよう駆り立てる可能性があると警告した。

米国心理学会の最高経営責任者アーサー・C・エバンス・ジュニア氏は連邦取引委員会のパネルでのプレゼンテーションで、ユーザーが架空のAIキャラクターを作成したり、他の人が作成したキャラクターとチャットしたりできるアプリCharacter…

バリアント

「バリアント」は既存のAIインシデントと同じ原因要素を共有し、同様な被害を引き起こ��し、同じ知的システムを含んだインシデントです。バリアントは完全に独立したインシデントとしてインデックスするのではなく、データベースに最初に投稿された同様なインシデントの元にインシデントのバリエーションとして一覧します。インシデントデータベースの他の投稿タイプとは違い、バリアントではインシデントデータベース以外の根拠のレポートは要求されません。詳細についてはこの研究論文を参照してください

似たようなものを見つけましたか?