インシデントのステータス

インシデントレポート

レポートタイムライン

- 情報源として元のレポートを表示

- インターネットアーカイブでレポートを表示

Major technology companies strive to protect the integrity of political advertising on their platforms by implementing and enforcing self-regulatory policies that impose transparency requirements on political ads. In this paper, we quantify…

- 情報源として元のレポートを表示

- インターネットアーカイブでレポートを表示

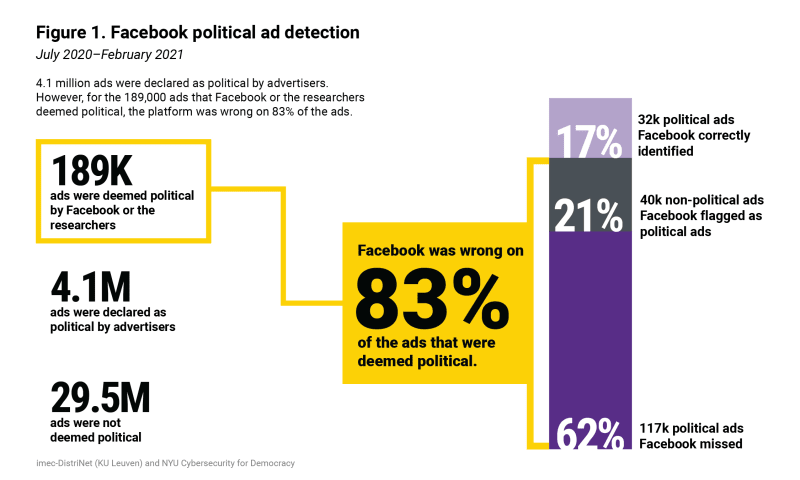

In the first known study to quantify the performance of Facebook’s political ad policy enforcement at a large and representative scale, researchers found that when making decisions on how to classify undeclared ads, Facebook often missed po…

- 情報源として元のレポートを表示

- インターネットアーカイブでレポートを表示

A new study shows that the vast majority of the time Facebook has made an enforcement decision on a political ad after it ran, it’s made the wrong call.

Political advertisers on Facebook are supposed to identify themselves as such. That way…

- 情報源として元のレポートを表示

- インターネットアーカイブでレポートを表示

Researchers found thousands of cases where advertisers skirted the company's rules without ever being flagged.

In the years since the Cambridge Analytica scandal revealed how easily Facebook’s political ads could be weaponized by bad actors…

- 情報源として元のレポートを表示

- インターネットアーカイブでレポートを表示

PARIS (AFP) - Facebook misidentified tens of thousands of advertisements flagged under its political ads policy, according to a study released Thursday (Dec 9), which warned that the failure could lead to political manipulation.

Researchers…